Project: GPT2 Model trained on reddit titles – Andrew Huang

Motivation

After working in primarily image data for the semester, I thought it would be interesting to start working on text data for my final project. Initially I wanted to present my final thesis about vocal sentiment analysis, but I was not sure if IMA policy would allow me to do so, and also the contents of that may be outside the scope of this course, and not really in the spirit of IMA and more in the spirit of CS courses. After seeing a model on Hackernoon about GPT2 and how good it is at generating coherent titles from it’s own website, I thought about creating and training my own model so that I can generate my own titles. Because it is near graduation time, I have been spending a lot of my time on r/cscareerquestions looking at my (not) so hopeful future. I realized most of the threads have a common theme and I realized, what if a machine learning system can generalize and help me make my own titles? I also have tried a model called char-rnn in the past, and I have seen language models work decently with machine learning at text generation. So I decided after looking online about how great the GPT-2 Model made by OpenAI was at generating text with good context awareness, I decided to train my own model which was good at generating computer science oriented titles.

Process

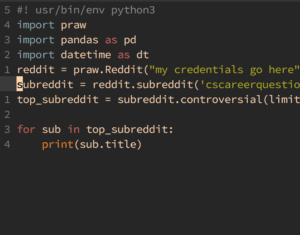

To start I extracted several thousand subreddit titles using a tool called praw. I did not have much trouble getting this code to work online for me, and all of the APIs were very clear to use.

With this I made a user application, and was able to get to get the top 1000 posts from all the respective categories, (top, controversial, etc). Once I had sufficient model, I loaded the code onto jupyter notebook from the local model initially. I discovered from the original author (Max Praw) that he had his model code in a Google Notebook called Colab. I found out that the compute performance on these notebook environments are very powerful and additionally I did not have to deal with awful dependency issues across different systems (NYUSH HPC vs INTEL Cluster) etc, so I decided to start training on there and the speed on those servers would be much faster than on both HPC and the intel cluster. I trained my dataset on the 117M parameter version of the model, as I thought that would not take too much time as the larger version of the model.

After 1000 epochs and an hour and a half of training, the model was trained. The notebooks that google offers have a Nvidia Tesla T4 GPU built for machine learning, so the models that were trained there trained very quickly.

Results

The results from my experiments were decent. I think I may have needed to get my titles from a more diverse pool of subreddit content, but because I wanted all of the generated titles to be from one central theme, I did not explore other options. As with training on all machine learning tasks, there is always that issue of “overfitting”. I believe I ran into this issue. I google’d a lot of the titles generated by the model and lot of them were direct copy of the titles that I had in the original source training set, so this is an indication of overfitting. However, it did a good job in creating coherent generation because none of the samples had cut off words or any malformed words.

Conclusion

The GPT2 Python Apis provide us users with a high level way to train an advanced language text model on any dataset with pretty good results. I would like to understand for myself in the future how GPT2 works on a deeper level, while working on my capstone project I did look into attention models and transformer, and I know that GPT2 is a variant of those models with a large number of model parameters, and the implications of this model are pretty good. I see a use of this as a good start for building a chatbot when you have a large corpus of human – human interactions for example in call centers when humans commonly resolve tasks that employees find extremely repetitive. These tasks can be automated away in favor of more productive tasks. Perhaps my GPT2 models can also be trained on the body of the posts, and I know that GPT2 models can be “seeded” so I feel a lazy user could input their own title and have a relevant user generated from it from key advice that it may wish to know, instead of using the search bar which may just use keyword matching and link it to irrelevant information. If I had more time, I would definitely make a frontend/backend using these weights and allow the user to use these prompts (consider it future work) In this use case, text generation could also be a kind of a search engine. Overall, this project was a success for me and taught me a lot about IML and how helpful interactive text generation can be for all sorts of different use cases, and how adaptive and robust these models can be.

For those who are interested the code is attached below :

https://colab.research.google.com/drive/1SvnOatUCJAhVU1OcUB1N3su3CViL0iam