Inspiration

Since the midterm project, I have been interested in how computers process human language along with the creative and practical applications of this. I have found many art projects which utilize machine learning to process language and I have found many articles stating how Machine Learning is being used for language translation (I will include references at the end of this documentation). As I had already worked with ML5.JS’s Word2Vec model, I wanted to work with text generation instead. That being said, my biggest goal in this project was to successfully train a model on my own.

Original Plan

The way in which I originally planned to execute this was to train a text generation model on my own and inference it with JavaScript. Originally, I tested Keras’ example text generation model, but found that the results it gave were nonsensical. Looking back, this could also have been due to the size of my dataset, as I was using a relatively small dataset.

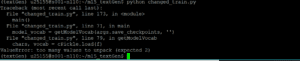

After this, I looked into many different models, but chose to go with ML5.JS as the model would automatically be converted to JavaScript. As I was still very unfamiliar with training models on Intel’s DevCloud, I spent about two weeks trying to get the model to train successfully. The first errors I received were in my bash script, and they occurred because I did not correctly reference my data directory. However, once this was solved, there was another error with a .pkl file which was created throughout the training phase. To debug this, I had to get help from Aven. With Aven’s help, we reorganized my directories and modified the Python training script. However, even after Aven helped, I was still receiving the same error (pictured below). Eventually, I found that the .pkl file which was created was created with a different name than what was reflected in the script. Therefore, I changed every instance of the file name in the training script, and was finally able to train my model.

PKL File Error:

However, once the model was trained, I found that it could not be inferenced into JavaScript. Even though the model was saved in the correct folder, whenever I would run my JavaScript, I would get an “unexpected token < in JSON” error. I had Aven look at this error as well, and instead of using JavaScript to access the model, we decided to try and see if we could run it with Python. However, this also gave us an error. Then, Aven and I did some research to see if ML5 had any pre-trained models which could be used. Once I got access to the pre-trained models, I found that they returned the same error. Therefore, after conversing with Aven, we decided that this meant that there was an error within the backend of the ML5 code. Unfortunately, we had only found this error on Saturday, leaving me with two days to put together something else for the final.

Backup Plan

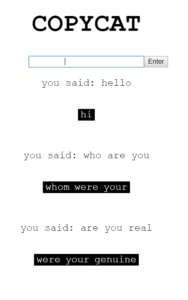

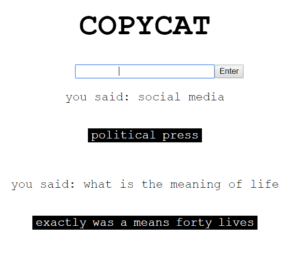

With the shortage of time in mind, I chose to work with ML5’s word2vec model once again. I chose to work with this as I knew that it would function properly and I believed I could get it to give me a similar outcome as to what I had originally pictured when planning my project.

My new idea was to utilize Word2Vec to take each word of a user input and find the next closest word. Then, it would output the new words. The effect which I received is similar to text generation, but I would say that it is more akin to something which I would call “machine poetry.” Overall, the outcome which I created is something I am currently satisfied with as I was forced to put it together in a day and a half. Therefore, the user interface design is not exactly where I would like it to be. All other aspects aside, I accomplished my original goal: I trained a model (even though it could not be inferenced), and I did something with text generation.

Conclusion

Though I was not able to carry out my original plan for the final project, I learned many useful things along the way. Through the stages of this project, I learned how to utilize and customize bash scripts, how to setup datasets for training, and I gained more familiarity with Python (a language which I only have three months worth of experience with). I also found that my project can be used to demonstrate how machines process our language along with the relations within. My end result may appear basic, but it does not completely show all the work which I have done along the way. That being said, this class has fostered my interest in machine learning and I am eager to learn more.

Interesting Projects & Articles: