Brief

For this assignment, I have trained a set of models for cifar-10 based on CNN architecture. Through the whole training process, I tested the effect of different nn networks, dropout layers, different batch size, and epochs. Also I play with the data augmentation.

Hardware

I am using Google Cloud Platform and set up a platform with Tesla V4 and 4-core CPU.

Architecture

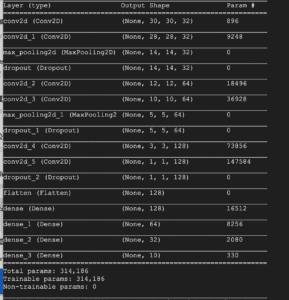

Above is a vgg like arch, which containers two successive conv layers, embedded with dropout layers and finally fully-connected layers. Below is a table indicating how it goes.

| Epoch | Batch Size | Time | Accuracy | Test Accuracy |

| 20 | 64 | 40s | 69% | 68% |

| 100 | 64 | 109s | 75% | 72% |

| 20 | 5 | 2200s | 80% | 75% |

| 20(Augumented) | 64 | 40s | 70% | 71% |

As we can see, the large batch size, the more accurate it is. It is also the same to epoch. However, because of the staple point, the increment of epoch does not always make the accuracy increase.

Also, I have tried some other architecture, like two layers of Conv, which gives a similar result compared with vgg one, which is likely to be related to a relatively small set of features.

In addition, the great drop for the for the third test is mainly due to the small set of batch which makes it harder to generalize.