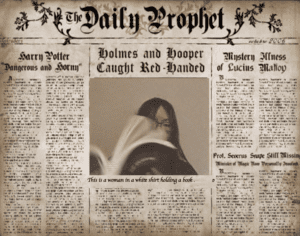

Background

When I was young, I was fascinated by the magic world created by J.K. Rowling in Harry Potter. She has created so many bizarre objects in that world of magic that I still find very remarkable today. “The Daily Prophet”, a form of newspaper in the Harry Potter world, is the main inspiration of my final project. “The Daily Prophet” is a series of printed newspaper that contains magic which allows the image on the printed paper to appear as if it’s moving. It inspires me to create an interactive newspaper with an “AI editor” where not only the images on the newspaper will update every second according to the video captured by the webcam, but also the passage on it will change according to the image. Thus, it will appear as if the newspaper is writing the reports on its own. In short, for the final project, I created a “magic” newspaper with the help of an “AI editor”.

Motivation

I’ve always found that the newspaper is a form of artwork. Even though today there are fewer and fewer people who actually take the effort to read the news in a printed newspaper, the design of such an elegant means to spread news and information still fascinates me, which is the first reason why I wanted to develop a newspaper related project. The second reason is that I find that today, even with the development of social media which allows new information to be spread almost every moment and every second, it still requires human people behind the screen to type, collect and then post that news. However, it occurs to me that if there is an AI editor that could document, write and edit the news on the newspaper for us, the real-time capability of spreading information of the newspaper would be even better. Thus, I want to create an interactive self-edited newspaper that asks an AI to write the news about the action of the people it sees by generating sentences on their own. Because the news-paper can do self-editing and writing, which seems kind of magical, it fits well with the Harry Potter Daily Prophet magic theme I wanted to do.

Methodology

The machine learning techniques behind this project is Style Transfer and image caption. In order to make the webcam video appear on the newspaper naturally, I trained several style transfer models on colfax to change the style of the webcam video so that it will fit the color and theme of the Daily Prophet background image I chose. At first, I thought that I can only train the style transfer model one by one, but later I found that I can actually train several models at the same time if I create new checkpoints and models, which can save a lot of time. Then, in order to make the newspaper to generate words on its own according to the webcam image, I used the im2txt model from Runway that Aven recommended for me. The sample code Aven shared with me allows p5 to sent the canvas data to Runway and then Runway can do image caption to these data, generate sentences as results and then send the results back to p5. Even though the model isn’t always giving out the most accurate result, I still find both the im2txt model and Runway to be super amazing and helpful. The technical part of this project is the combination of the style transfer model and the im2txt model.

Experiment

When I first tried to connect the style transfer model I got and the im2txt model together, the outcome wasn’t very promising at all. Even though Runway did generate new sentences and sent them to p5, the words it gave aren’t that relevant to the webcam image. After I looked into the reason behind it, I found that it was because the image data Runway received was the data of the whole canvas. I tried to send only the webcam video data to Runway but it seemed that the video value doesn’t really support the “.toDataURL” function. So I decided to place the newspaper background outside of the canvas and only have the webcam image on the canvas so the only data Runway processed will be the date of the webcam. This improved the outcome of the image caption to a large extent and the words all seem more relevant to the users’ webcam image. However, the results still weren’t exactly perfect because the data of the webcam the Runway received was the style transferred image, and the image caption the model is able to do is still a bit limited, it still often makes mistake when describing the image.

Social Impact

This project is designed to be an interactive and fun means to show people how the AI technology can make “magic” come true in an entertaining way. When I present this project in IMA show, many people excitedly asked me about the techniques behind this project after they interacted with it. As I introduced them about the models I used, they all showed great interest in trying it themselves to learn to apply AI techniques to design artistic or entertaining products. Thus, I hope this project can show people the potential of AI in various filed and encourage them to learn more about the application and techniques of machine learning techniques. In addition, I also think this is a nice try at using AI to be the self-editor of a newspaper.

Demo

https://drive.google.com/file/d/1P2aXo6lBLXIUWQU_GJb2ahmrlTw9xVG6/view?usp=sharing

Further Development

The one feedback I got both from the critics during presentation and guests on the IMA show is that I could try to make the output appear to be more obvious. During the IMA show, I sometimes needed to point it out to people what is changing other than the image on the newspaper. Thus, there are two things I can try to do to improve this project. First, I can try Moon’s idea to make the caption the headline of the newspaper and do a bit of adjustment to the results and made it like “WOW!!! THIS IS A WOMAN SITTING ON A CHAIR!!!”, to make the change more obvious. Second, I can try to do a live writing long paragraph where each new sentence will be put after the previous sentences and make it look like the newspaper is writing a paragraph one sentence by one sentence. I think both changes will look pretty cool, and can make the users interact with this project better.