Background

The direction of my project changed from the original concept I had in mind. Originally I wanted to do a project juxtaposing the lifespans of the user (human) and surrounding objects. Upon going through the ImageNet labels though, I realized that there was nothing to describe humans, and that the model had not been trained with human images. There were a few human-related labels (scuba diver, bridegroom/groom, baseball player/ball player, nipple, harvester/reaper), but these rarely show up through the ml5.js Image Classification, even if provided a human image. Because of this, it would be impossible to proceed with my original idea without drastically restructuring my plan.

I had seen another project called I Will Not Forget (https://aitold.me/portfolio/i-will-not-forget/) that shows first a neural network’s imagining a person, then what happens when neurons are turned off one by one. I’m not sure exactly how this works, but I like the idea of utilizing what is already happening in the neural network to make an art piece, not manipulating it too heavily. In combination with my ImageNet issue, this started to make me wonder what a machine (specifically through ImageNet and ml5.js models) thinks a human is then. If it could deconstruct and reconstruct a human body, how would it do it? What would that look like? For my new project, which I would like to continue to work on for my final as well, I want to images of humans based on how different body parts are classified with ImageNet.

New Steps

- Use BodyPix with Image Classifier live to isolate the entire body from the background, classify (done)

- Use BodyPix live to segment human body into different parts (done)

- Use BodyPix with Image Classifier live to then isolate those segmented parts, classify (in progress)

- Conduct testing, collect this from more people to get a larger pool of classified data for each body part. (to do)

- Use this data to create images of reconstructed “humans” (still vague, still looking into methods of doing this) (to do)

Research

I first was trying to mess around to figure out how to get a more certain idea of what I as a human was being classified as.

Here I use my phone as well to show that the regular webcam/live feed image classifier is unfocused and uncertain. Not only was it recognizing images in the entire frame, but also its certainty was relatively low (19% or 24%).

In the ml5.js reference page I found BodyPix and decided to try that to isolate the human body from the image.

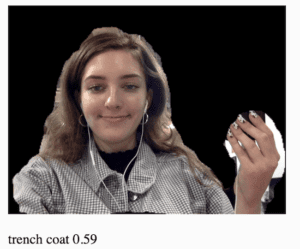

This worked to not only isolate the body, but also more than doubled the certainty. To be able to get more certain classifications for these body parts, I think it would be necessary to at least separate from the background.

With BodyPix, you can also segment the body into 24 parts. This also works with live feed, though there’s a bit of a lag.

Again, in order to get readings for specific parts while simultaneously cutting out background noise, BodyPix part segmentation would need to be used. The next step for this would be to be able to only show one or two segments of the body at a time while blacking out the rest of the frame. This leads into my difficulties.

Difficulties

I’ve been stuck on the same problem/trying to figure out the code in different ways for a few days now. I was getting some help from Tristan last week to try to figure it out, and since we have differing knowledges (he understands it at a lower level than I do) it was very helpful. It was still this issue of isolating one or two parts and blacking out the rest that we couldn’t fully figure out though. For now we know that the image is broken down into an array of pixels, which are assigned numbers that correlate to the specific body part (0-23):

Conclusion

I have a lot more work to do on this project, but I like this idea and am excited to see the results that come from it. I don’t have concrete expectations for what it will look like, but I think it will ultimately depend on what I use to create the final constructed images.