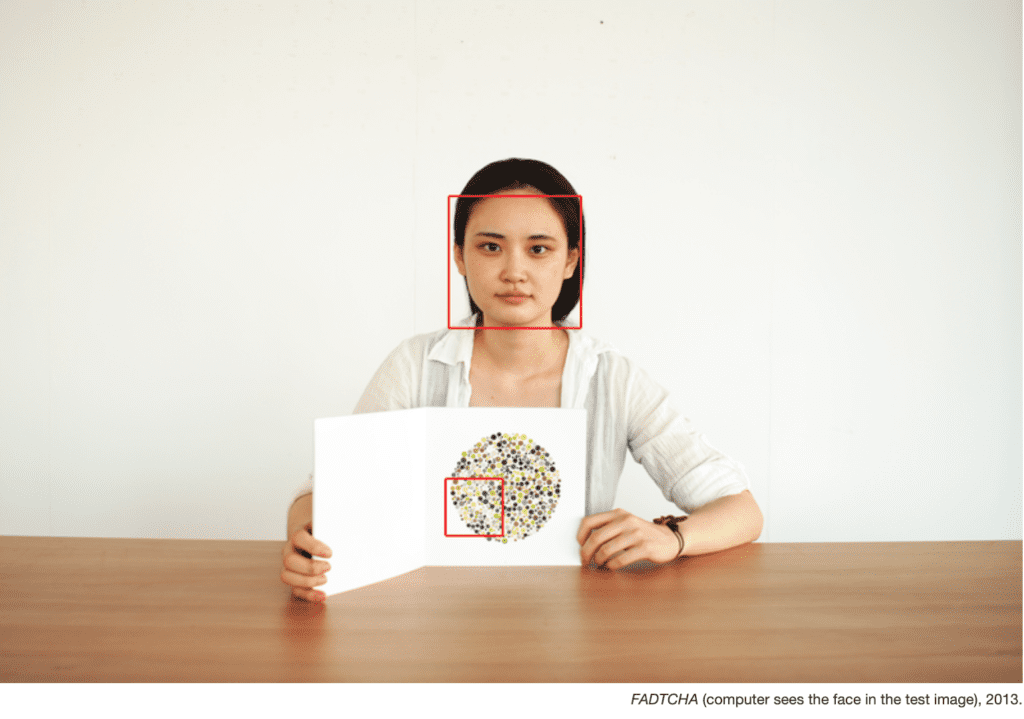

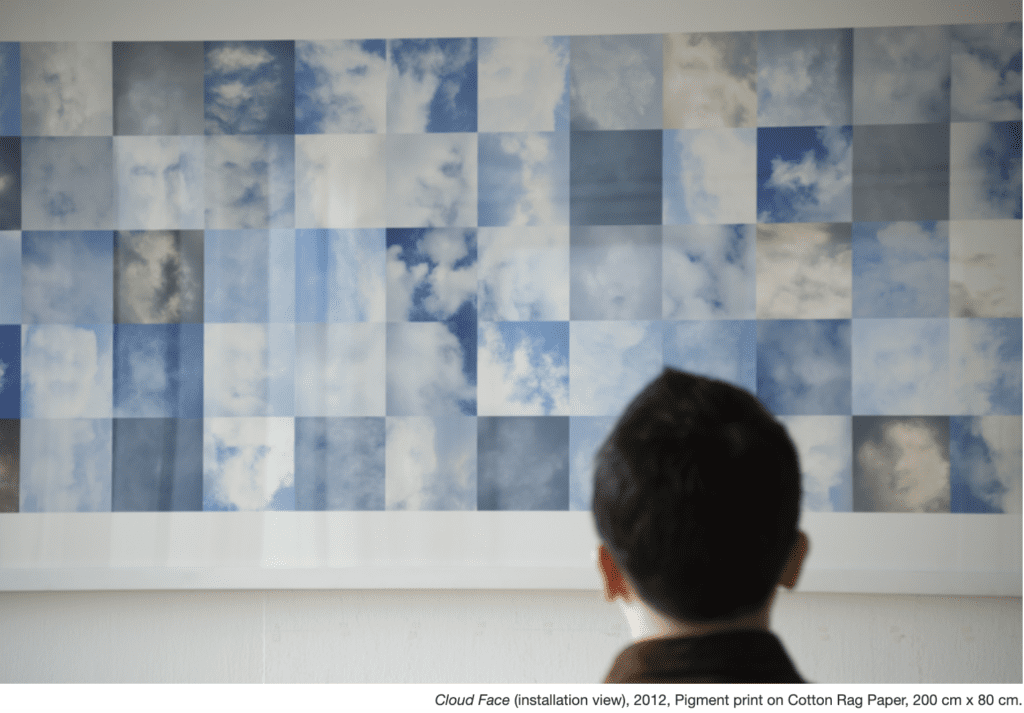

For my final project, I am focusing on the theme of human vs. computer perception. This is something I’ve tried to explore through my midterm concept and initial plan of reconstructing humans from image classification of parts. When I talked with Aven, I realized there were other, less convoluted ways of investigating this that would allow the work of the computer to stand out more. He showed me the examples from the duo Shinseungback Kimyonghun that also follow these ideas; specifically I was more inspired by the works FADTCHA (2013) and Cloud Face (2012), which both involve finding human forms in nonhuman objects.

These works both show the difference in which a face detection algorithm can detect human faces, but humans cannot. Whether or not it’s because CAPTCHA images are very abstract, and whether or not it’s because the clouds are fleeting doesn’t matter; this difference is still exposed.

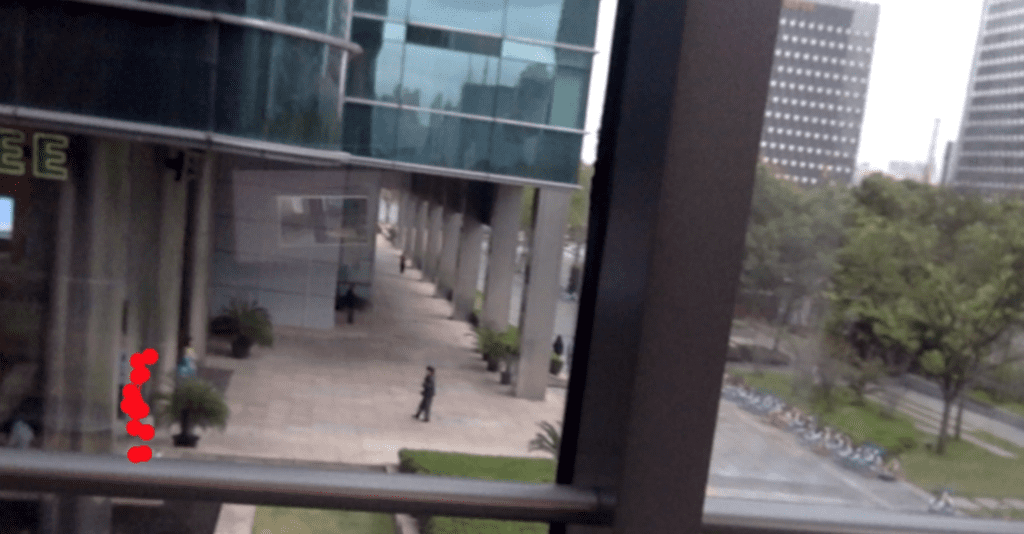

I wanted to continue with this concept by using a human body-detecting algorithm to find human forms in different spaces where we cannot see them. Because I’m most familiar/comfortable with the ml5.js example resources, I started by using BodyPix to do some initial tests, which was interesting as far as seeing what parts of buildings are seen as body segments, but it’s not a clear idea. Then I tried using PoseNet to see where points of the body could be detected.

This was a little more helpful, but still has a lot of flaws. These two images were the shots where the highest number of body points could be detected (other shots had anywhere from 1-4 points, but no similar shape to human body), but still this doesn’t seem concrete enough to use as data. I plan on using a different method for body detection—as well as a better quality camera—to continue working toward the final results.