GITHUB: https://github.com/NHibiki-NYU/AIArts-Mojisaic

INTRO

The project Mojisaic is a WeChat mini-app that can be launched directly in WeChat by a QRCode or link. The nature of WeChat mini-app is a hybrid app that displays a webpage but has better performance and higher permissions. With the help of WeChat mini-app, the ‘web’ could be started in a flash and act like a normal app. By using TensorFlowJS, Keras model can be converted and loaded on the web client. So, we can keep the power of HTML/CSS/JS as well as the magic of machine learning.

PROCEDURES

1. Train the model

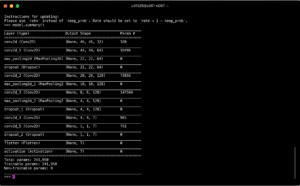

Firstly, I used a VGG like structure to build the model that detects emotion on one’s face.

Then, I find a dataset on kaggle, a data collecting and sharing website.

The dataset contains 28,709 samples in its training set and 3,589 in its validation set and testing set. Each sample consists a 48x48x1 dimensional face image and its classified emotion. There are totally 7 emotions that are labelled – Angry, Disgust, Fear, Happy, Sad, Surprise, and Neutral. With the help of this dataset, I started the training on Intel devCloud.

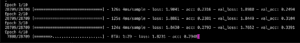

I run the training for 100 epochs and reaches 60% accuracy of emotion classification. According to the author of the previous model, the accuracy of training result lies between 53%-58%, so 0.6 is not a bad outcome.

2. Convert the model

Since I want my model to be run by JavaScript, I cannot use the model trained by Python/Keras directly. There is a tutorial on TensorFlow website about how to convert Keras model to TensorFlowJS format:

https://www.tensorflow.org/js/tutorials/conversion/import_keras

After I converted the Keras model to the TensorFlowJS model, I created a webpage and try to run the model on the web first before I step forward to WeChat mini-app.

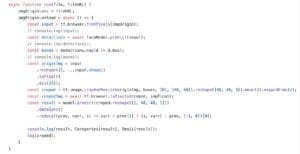

I wrote a script to detect the emotion of people in the image that the user chooses to upload from the web interface, printing out the emoji of the emotion.

3. Journey to WeChat MiniApp

As I mentioned previously, WeChat mini-app is built with JavaScript so ideally TensorFlowJS can be used in the app. However, the JavaScript engine is specially customized by WeChat developers so that the library cannot perform fetch and createOffScreenCanvas in WeChat mini-app. Fortunately, a third-party library on GitHub helped in this case.

https://github.com/tensorflow/tfjs-wechat

It is a plugin for mini-app that overrides some interfaces in TensorFlowJS to make it act normally (although not good enough). In the WeChat mini-app, I use WebCam to detect the user’s face in real-time and place emoji directly on the face. By hitting the shot button, the user can save the image or send it to his/her friends.

OBSTACLES

There are mainly 2 obstacles when I made this project.

First, old model conversion problem. When I want to extract faces from a large image, I use a library called faceapi to run its built-in model to locate faces. However, the WeChat TensorFlowJS plugin cannot support faceapi. So, I should either convert its model to normal TensorFlowJS model or modify the code directly to make it support WeChat API. After countless failures of converting old TensorFlow layer models, I surrendered and chose to modify faceapi source code. (Although it took me nearly 3 days to do that…)

Secondly, the plugin in WeChat mini-app is not perfect enough – it does not support worker in its context. So the whole computational process needs to be done in the main thread. (that’s so exciting and scaring…) Although WeChat mini-app is integrated with 2 threads – one for rendering and the other for logic. The rendering part does not do anything in fact – there are no data binding updates during the predicting process. So all the computational tasks (30 fps, about 30 face detections, and emotion classifications) make WeChat crashes (plus the shot button not responding). The temporary way to solve it is to reduce the number of predictions in one second. I only do a face detection every 3 frame and emotion classification every 15 frames to make the app appears responding.