INTRODUCTION

This week’s assignment is to tr

ain a CIFAR-10 CNN ourself by using tensorflow(keras) with its built-in CIFAR-10 dataset downloader. In this experiment, I mainly tested the settings of batch size, as well as the optimizers to explore how these factors related to the changes in the training result.

MACHINE

The machine I used is Azure Cloud Computing Cluster (Ubuntu 18.04):

Intel(R) Xeon(R) CPU E5-2673 v4 @ 2.30GHzx28GB Memory4.15.0-1055 Kernel

NETWORK LAYOUTS

The network has the following architecture:

2DConv -> 2DConv -> 2DMaxPooling -> Dropout ->

2DConv -> 2DConv -> 2DMaxPooling -> Dropout ->

Flatten -> FullyConnected -> Dropout -> FullyConnected -> Dropout

The architecture looks good to me, it has 4 convolutional layers to extract features from the source images, plus using dropout to question layers in order to find the exact feature point of each type of picture.

EXPERIMENTS

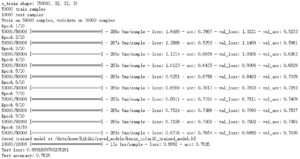

Firstly, I tried to modify the batch size to 64 (the default 1024 is so scary…), and I get the following training outcomes:

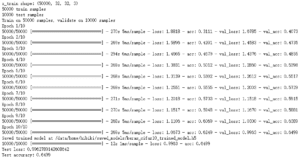

The loss is mostly above 1, and the final accuracy is not good enough, so I again narrowed down the scale of batch size to 32, the result as followed was better than the first trial:

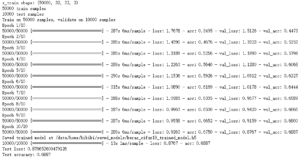

Now, the accuracy is 4% better than the previous one. So, it gives me a question: is 32 batch size small enough to get a good answer? Next, I repeatitive divided the batch size by 2 to 16 to test if it is true that small batch size does a better job in this scenario:

The outcome is positive, the accuracy is above 70% this time. So, we assume that smaller batch size can accordingly improve accuracy at this point. (But it cannot be always small. We can imagine that 0 batch size contributes nothing…)

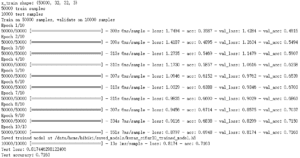

The second experiment is to explore whether RMSprop is a proper optimizer in this scenario. So I use a 32 batch size, 10 epoch of training with 2 different optimizers – RMSprop and Adam(lr=0.0002, beta_1=0.5).

During testing, we superisingly found that Adam optimizer is much better than the previous one in this network architecture. It reaches 70% accuracy only when it steps to the 5th epoch.

![]()

And the overall accuracy after 10 epoches of training gets 0.7626.