For this week’s assignment I trained the CIFAR-10 CNN by modifying the number of epochs and the dropout rates. I used CPU, as it worked best with my computer’s specs:

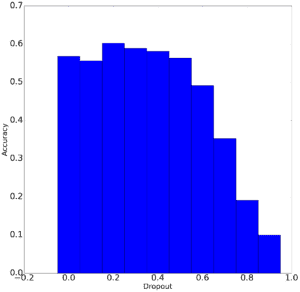

For the experiment I was interested in how dropout rates affect the accuracy, since I read in the article linked to the class slides how dropout rates represent a curve where there is initially a higher accuracy as the neurons are fully utilized to a higher potential but eventually a steep drop off as the dropout rates near a ratio of 0.2.

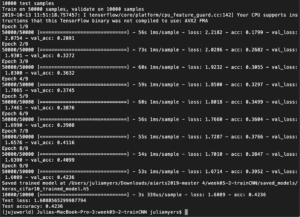

To test this, I first modified the number of epochs, changing it from 100 to 9, in order to run the experiments fast. With the epochs at 9 and no other changes, the training ran in 8 minutes and 48 seconds with an accuracy of 0.4236. The original dropout rates were in the range of the highest accuracy at 0.25, 0.25 and 0.5.

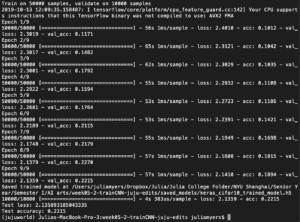

Next, I changed the dropout rates to test if accuracy would be lowered. By changing the dropout rates from 0.25 to 0.8, 0.25 to 0.7 and 0.5 to 0.9, the ratios became much lower and the training ran in 8 minutes 33 seconds with a new accuracy of 0.2215.

This exemplifies the fact that training with a lower epoch count and higher dropout rates (which increases the amount of neurons ignored in the training) does indeed lower the accuracy rate, in this case by half. So although the original accuracy rate with 9 epochs wasn’t that high at 0.4236, it was cut in half at 0.2215 when the dropout rates changed.