Skye Gao

Professor Rudi

Stupid pet trick project

February 23rd

PROJECT SUMMARY: For this project, we are required to use digital/analog sketch to build a simple interactive device. It must respond to a physical action or series of actions a person takes, and it must be amusing, surprising, or otherwise engaging. For my project, I decided to choose my equipment first then design a circumstance for my device.

MATERIALS:

- 1* Arduino Kit and its contents, including:

- 1 * Breadboard

- 1 * Arduino Uno

- 1 * Buzzer

- 1 * 1m Resistor

- 1 * Vibration Sensor

- 1 * USB A to B Cable

- Jumper Cables (Hook-up Wires)

BUILDING PROJECT: I planned to use a sensor as my interactive equipment, and the sensor I wanted to apply is a vibrations sensor. I tired to build a circuit in which I use the vibration sensor to control a buzzer. The circuit I draw is like this:

There were two ideas I had for the circumstance in which the devices works:

The initial idea was that I would make a toy (I have a toy bunny) with which when people spank or shake or touch it gently, it will make different sounds. The idea emerges from my last recitation, as I also used the vibration sensor to control the buzzer, and when I knocked on the sensor, the buzzer made noises, which was quite funny.

The circuit is easy to built, while my problem is how to write the sketch properly so that the outcome will work as I expected. For the first trial I used the IF condition to modify the degree of vibration and its responding noice. professor helped me try two kinds of code and the code is like below:

/*using map –> it doesn’t change the frequency of the speaker but the delay */

// map it to the range of the analog out:

outputValue = map(sensorValue, 0, 1023, 0, 880);

// change the analog out value:

if (outputValue >= 20) {

analogWrite(analogOutPin, outputValue);

delay(outputValue);

} else {

noTone (9);

}

/*using tone / noTone with IF */

if (sensorValue >= 50) {

tone (9,440);

delay (100);

} else if (sensorValue >50 && sensorValue <= 150) {

tone (9,24.50);

} else {

noTone (9);

}

The outcome was not ideal, as when I tried to shake the bunny with different frequency, I could figure out the change in tone but I found the sound was not that constant and corresponding with my shaking frequency. The different sounds were mixed even when I shake it with quit different force. To figure out why, I check the serial monitor, as I saw, the actual sensor value that the sensor accept is not stable, a gentle shake has sensor value that over 100 while a quite strong shake can range from 20 to 150. In terms of such problem, professor suggested me to take the instability of vibration sensor Into consideration. Also, I need to take into consideration that how to let my experiencers understand what I want them to do, like what level they are expected to touch the bunny and what responses they are supposed to get for their action.

Considering the unstable result, I found it hard to let my audiences understand my idea, and more seriously, I cannot make sure what the outcome will be like. so this idea is not discreet. I needed to come up with a better idea.

I did not want to demolish all my previous thoughts, so my second idea was based on my first one. At this step, my top mission was to find a stable way to use the vibration sensor, i.e. Let the sensor value read be stable with every acts. The solution was totally an inspiration in my mind, as I was staring at the serial monitor, trying to think out a solution, I accidentally put the bunny lying on the desk and pushed his belly unconsciously. I suddenly found out that when I do nothing with the bunny, the statistics shows around 0, while when I push the his belly every time it shows like below:

The income stats seems to be more stable this time, which makes me really excited. This time I did not need to worry that the bunny will make noice by himself as the “mute” state and “unmet” state is clearly separated. So I continued to think about a suitable and vivid context for this device.

As I saw my bunny lying on the ground and I was pushing his belly, I found my acton really like saving a person with CPR. So I thought it would be a great idea to name this project as “saving this bunny with CPR”. People can push on bunny’s bully trying to “save” him, and there will be response to their actions each time they push as well as in the end to indicate whether they have saved it successfully or not.

To achieve such effect, I did not change my circuit but modified my sketch based on my precious one. As can be seen in the photo above, the sensor value will reach over 100. so I still used the IF condition and modified the restriction as 80, when the sensor value is over 80 (i.e. your push it really hard), the buzzer will make a sound. That is the interaction o f each action. This part of code is as below:

if (sensorValue >80 && sensorValue <= 150) {

tone (9,1500);

count = count + 1;

} else {

noTone (9);

count=count;

}

And as a response for result of the whole procedure, I decided to use a count function to count people’s pushing time so that when people push to certain times, the buzzer will play a short melody by using the tone() function to indicate the result. By doing so people can feel interested in keeping dong so and fully experience the procedure of saving somebody (instead of just try one to two times and lose interest) and get a reward for that, which I found may be more real and inspirational.

This part of code as well as the whole coding is shown in the source code .

Initially I set the range of the sensor value as between 80 and 150, basing on the statistics shown in the serial monitor and my personal experience of pushing force (which is likely to be similar to the feeling of doing CPR). The stats I use as a reference is as below:

I met one problem when I tested the range. For some times at the first place it worked out well, which is really exciting. However, at one moment the stats shown appeared to be unstable again. As when the device stayed static, the stats were around 10, while when I push it it just around 20 no matter what force I was using. Which loos like below:

Considering that it has worked for some times, the code should be no problem, so the problem may be the circuit. After checking the circuit again, I found the connection of sensor is loosen which may happened when I moved the whole device, and that is the cause of the problem.After reconnecting the circuits, the outcome becomes normal and world just as I expected!!

DECORATION: SO the following part is just to do my decoration (which is my favorite part). Since I have built a context for my device, which is “save the bunny with CPR”, I want people to know that they are supposed to pushing the bunny’s belly just as CPR when they look at my project. So I draw a display board on which there is big characters saying “SOMEBODY SAVE THE BUNNY with CPR!!!“, and I draw a doctor and a nurse to further indicate this bunny may have some medical incident. When people see this board, they can relate this to those emergence happen in daily life, and the words will tell them what to do. To further inform my audience what specially they should do, I also draw a heart and attached it to the bunny’s belly, (which can also cover the sensor), so that people know where to put their hands. And just for entertaining, I put crosses on the bunny’s eye to indicate he is dying. The outcome decoration looks like below:

What’s more, I want to add more meaning to my project, not just an entertaining device. So I searched for the standard procedure of doing CPR and attached that to my show board, thus when people are experiencing my project, they can also learnt about how to use CPR to save people’s life in real life. I found this educational and practical.

(Learn how to do CPR!):

SHOW TIME: Till then, my project was completed, and what came next was the show time. My project as well as its decoration did appear to be attractive. Many people came to my table to give a try and asked me questions about my design. Here is my project on the show:

FEEDBACKS: I got several feedbacks from my peers as well as professors.

- People say the project is really cute and interesting !! 🙂

- In terms of the response melody, some people can understand that they successfully saved the bunny immediately, but some people conceived the melody as “fail”, (it may due to the melody and the crosses on the bunny’s eyes), so I have to tell them they had succeeded every time the melody plays. One suggestion I got is to change the melody and play a more delightful one. That’s really reasonable.

- Some people started their push with a really soft touch because they are not sure what to do, I have to tell them to push harder, I think I may need to add this to my show board so that people can understand they need to push hard. (But I think that’s point that people need to learn about it through trials). One relevant suggestion I got is to add a feedback to each pushing action, like adding a led which will be be on each time the bunny is pushed. (But there IS sound each time the sensor is vibrated to certain extent…maybe they did not hear that, maybe visual effect is better than sounds?)

- Some people asked me how do they know they succeed or not. That is also a concern for me during my design. It would be better if there can be another melody or some signal to indicate the failure besides success, but that will be much more complicated and I do not know how to do it at this time. But I think I will find a way to figure it out in the future.

- One professor found my project interesting and suggested me to put into practical use…(he said I can put it in the health centre). That will be really nice if I can do that, but I think I need to first improve those drawbacks that are brought up in feedbacks. Also, I think for practical use, the device should be more accurate and close to reality. It better brings to people an experience similar to the real CPR procedure as much as possible, so that it can be really educational and applicable. (But being a toy like that is fine…I think…)

CONCLUSION: This project is really interesting and inspiring. It is my first project and I really enjoy the precess of completing it. There still remains many problems for me to figure out and improve, and I think that is my goal for the next stage of studying IMA!

Source Code:

/*

Melody

Plays a melody

circuit:

– 8 ohm speaker on digital pin 8

created 21 Jan 2010

modified 30 Aug 2011

by Tom Igoe

This example code is in the public domain.

http://www.arduino.cc/en/Tutorial/Tone

*/

#include “pitches.h”

// notes in the melody:

int melody[] = {

NOTE_C4, NOTE_G3, NOTE_G3, NOTE_A3, NOTE_G3, 0, NOTE_B3, NOTE_C4

};

// note durations: 4 = quarter note, 8 = eighth note, etc.:

int noteDurations[] = {

4, 8, 8, 4, 4, 4, 4, 4

};

// These constants won’t change. They’re used to give names to the pins used:

const int analogInPin = A0; // Analog input pin that the potentiometer is attached to

const int analogOutPin = 9; // Analog output pin that the LED is attached to

int sensorValue = 0; // value read from the pot

int outputValue = 0; // value output to the PWM (analog out)

int count = 0;

void setup() {

// initialize serial communications at 9600 bps:

Serial.begin(9600);

pinMode(9,OUTPUT);

}

void loop() {

// read the analog in value:

sensorValue = analogRead(analogInPin);

// map it to the range of the analog out:

//outputValue = map(sensorValue, 0, 1023, 0, 50);

// change the analog out value:

//analogWrite(analogOutPin, outputValue);

if (sensorValue >80 && sensorValue <= 150) {

tone (9,1500);

count = count + 1;

} else {

noTone (9);

count=count;

}

if (count == 50){

// iterate over the notes of the melody:

for (int thisNote = 0; thisNote < 8; thisNote++) {

// to calculate the note duration, take one second divided by the note type.

//e.g. quarter note = 1000 / 4, eighth note = 1000/8, etc.

int noteDuration = 1000 / noteDurations[thisNote];

tone(9, melody[thisNote], noteDuration);

// to distinguish the notes, set a minimum time between them.

// the note’s duration + 30% seems to work well:

int pauseBetweenNotes = noteDuration * 1.30;

delay(pauseBetweenNotes);

// stop the tone playing:

noTone(9);

}// no need to repeat the melody.

count = 0;

delay(100);

}

// print the results to the Serial Monitor:

Serial.print(“sensor = “);

Serial.print(sensorValue);

Serial.print(“t output = “);

Serial.println(outputValue);

// wait 2 milliseconds before the next loop for the analog-to-digital

// converter to settle after the last reading:

delay(2);

}

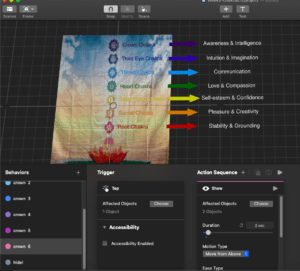

My view on computer

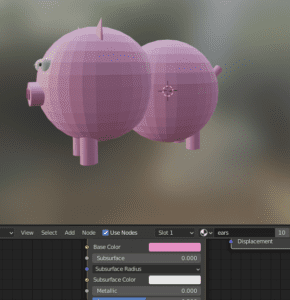

My view on computer My view on phone

My view on phone