This week we were given the task to create a functional prototype of our final project. My final project idea revolves around the idea of creating an interactive AR storytelling experience of the endangered red panda.

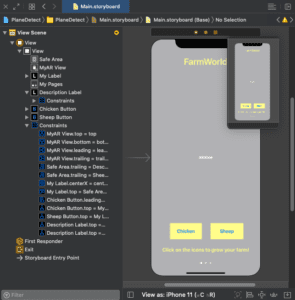

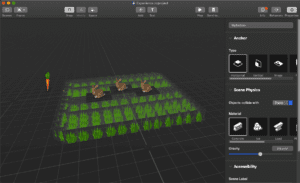

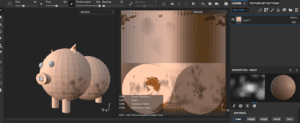

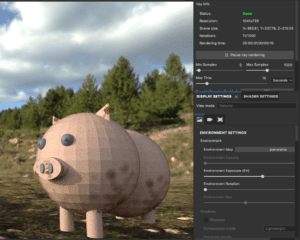

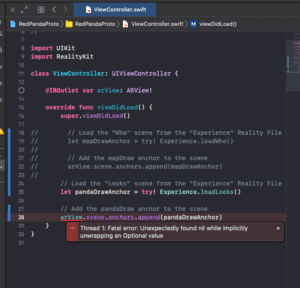

This week, I focused on putting all my assets into RC and creating a very basic prototype of what I imagine my final project to be. I mapped out the storyboard and the ideal interactions I hope to carry out. I initially had trouble referencing multiple scenes (aka. different pages) from RC into Xcode and that caused a lot of problems. I also focused a lot of 3-D modeling through Blender and texturing them through Substance Painter. Since I spent more time on building and importing other assets, I didn’t get a chance to fix up my panda to the ideal image yet.

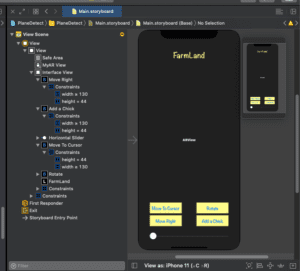

For the screen design, I wasn’t exactly sure how to go about the design because as of now RC detects the image on each page and triggers actions, rather than through an interface on the screen. I will need to think more about how to vamp up the interactivity. For future work, I was thinking I could add more interactivity on the screen by including buttons/labels/etc that trigger some sort of popup notification that allows the user to learn more about something or go to a link? It would also be cool to have the assets “pop out” of the book! Not sure yet.

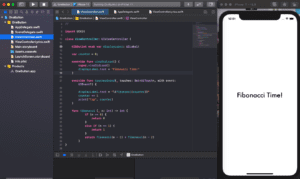

My end-to-end user journey is still in the process of being finished, but you can kind of see the idea of what the story journey is like. For future work, I would like to figure out how to create a start and end page so that the user journey is more clear. I am just not sure how the code for that would be because we’ve mostly been working on creating in the moment AR experiences. As of now, I’ve been working on separate scenes rather than one coherent one. So, when the image anchor is detected, it triggers an interaction for that anchor, causing overlap from the previous page. I’m not sure how to make the previous pages assets go away or make certain assets (like the pandas) stay.

Here’s a link to this problem.

Here is a link to the pages I have put into RC so far.

I have 3 more pages that I would like to add, but I wanted to see how to make the story/experience coherent before I put those into RC. I’m just not sure how I would begin to go about the code for that.

Moving forward, here are some tasks I still need to work on/need help with:

- Creating a final physical storybook

- Editing and texturing my assets, mainly the red panda

- Creating + designing a start and end page

- Figuring out how to make the story one whole journey (as of now it’s just scene by scene)

- Integrating user interactions through UI?

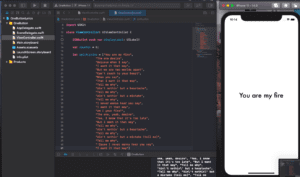

*old version

*old version