- A to P

- A

- input

- distance: 3 ultrasonic distance sensors

- output

- LED brightness: red, yellow, blues LEDs

- communication

- input

- P

- input

- communication

- output

- videos

- texts

- input

Interactive Media Arts @ NYUSH

Project Title: Won Color el Stage

Descriptions of the project can be found in my last article. No big deviation from the initial configuration. https://wp.nyu.edu/shanghai-ima-documentation/foundations/interaction-lab/cx592/fnl-prjct-essay-by-changzhen-from-inmis-session/

1. The Strategy

Three players must cooperate as importantly as compete to win the game. After all, mixed color indicates it’s at least two colors.

If only one player inputs too much, the score would go to one of her opponent. E.g. If it’s solely red who inputs, mixed color will be judged purple instead of orange that’s counted to be her score.

If they three input too much, the score decreases for all. The larger input for each, the worse decrease.

2. Digital Design

The video parts will be displayed on 1920*1080 (16:9) TV screen. The video image is made of the MikuMikuDance video in the center, three primary colors on the left with each party’s score, mixed color on the right, and the match on the top.

3. Physical Design

The circuit is hidden in a triangular prism. There are holes laser cut on each side face to fix an ultrasonic distance sensor and an LED indicating her input color. The sensor senses the distance on each side 0~30cm, and the brightness of the LED changes in response. The prism is made of shiny reflexible materials, because it’s designed to be a stage. Around each angle of its top is pasted a small paper with the anime idol printed on.

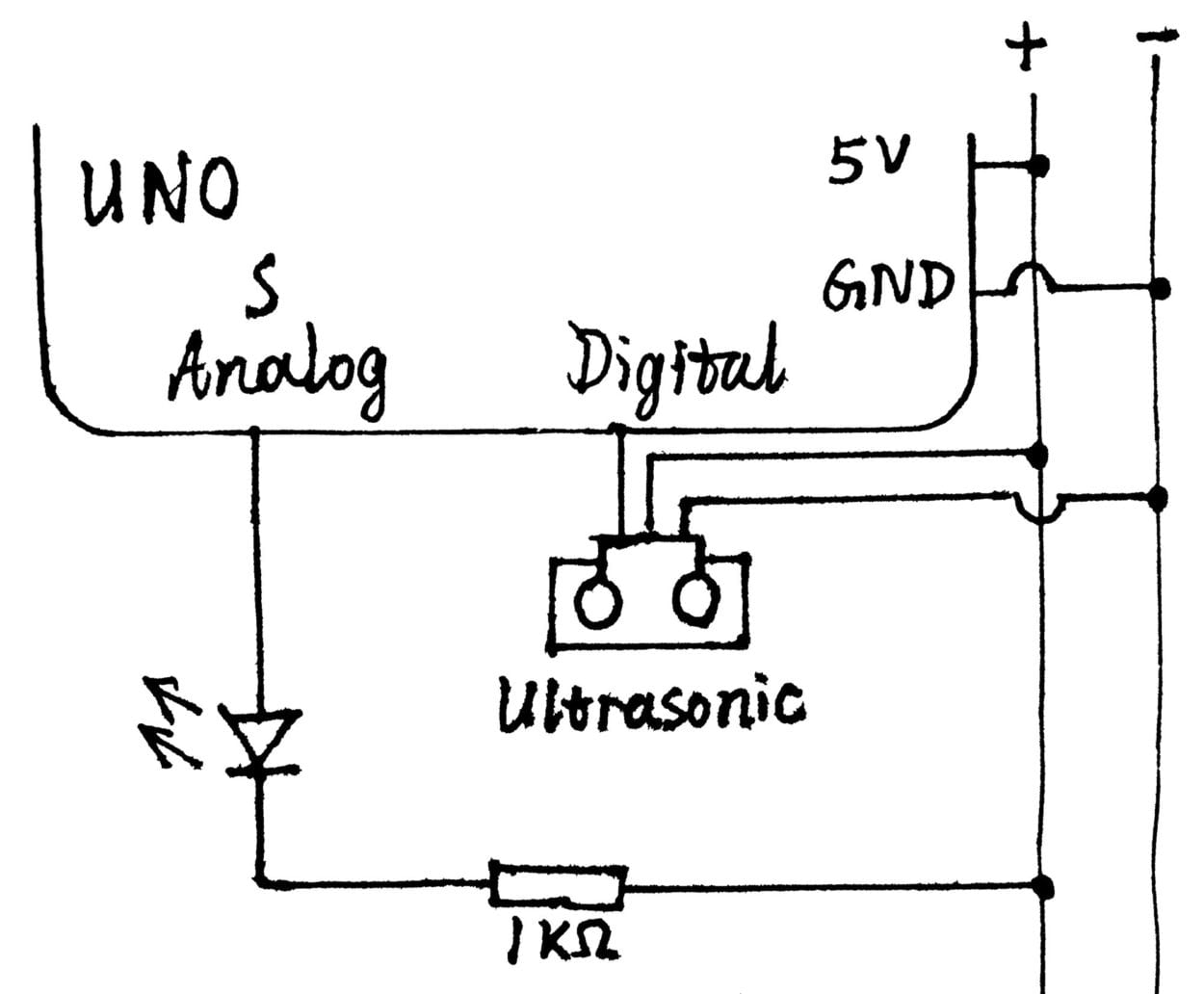

To my surprise, the ultrasonic distance sensor requires a library and is connected to digital pin on arduino. This sketch is symmetrical for each player.

4. Objections and Suggestions to This Project

Marcella firstly suggests I get rid of the video and let the event just happen on the physical stage. The idol who holds stage will be identified by color stage lights. Secondly, she puts forward the reason that if three players watch the screen in the same direction, the triangular prism design loses its meaning. Thirdly, the input method may be better if it’s to sense the movements of the player dancing. As for the first, I regret not to have taken this advise, because I had limited time to make adjustment. As for the second, I think VR glasses instead of a TV on one side will also make players play face to face forming a triangle. Her third idea is awesome.

Christina Bowllan and her friend objected to the video content. By negligence, I didn’t take feminists like them into account. If I had known that who knew little about anime, comics, and games will not accept the artistic appearance of such anime female characters dancing, I would change the content like I didn’t adopt the idea of mixing color to draw flags, which is political. I might choose landscape videos or so.

Professors suggested I reveal each party’s score so that players know it’s a match and knows where they’re more clearly. I adjusted that on the IMA festival day. And I dismantle the paper cover tent above the stage by their suggestion to make the stage easier on the eyes.

5. Conclusions

Marcella and other professors’ suggestions remind me that each component of the project shall have a focused purpose to account for what the project is about and how it’s played.

The feminists’ objections remind me that I shall consider when and where a project is allowed. It’s intended for all in the context of this inter lab class, so I shall make it acceptable to all.

The core of this project is its strategy. It suggests the real life situations. Victory doesn’t come to a single person; it comes to groups that corporate and compete. So the strategy can be applied to more than this idol dancing thing to create other colorful intellectual projects.

Arduino Code

//include distance sensor and hook up to digital pin 2, 4, 7

#include “Ultrasonic.h”

Ultrasonic s1(2);

Ultrasonic s2(4);

Ultrasonic s3(7);

void setup() {

Serial.begin(9600);

}

void loop() {

//read the distance in cm

int d1 = s1.MeasureInCentimeters();

int d2 = s2.MeasureInCentimeters();

int d3 = s3.MeasureInCentimeters();

//restrict the range within 30cm

if(d1 > 30) {

d1 = 30;

}

if(d2 > 30) {

d2 = 30;

}

if(d3 > 30) {

d3 = 30;

}

//control the brightness of color LED according to the distance

analogWrite(9,255-d1*255/30);

analogWrite(10,255-d2*255/30);

analogWrite(11,255-d3*255/30);

//serial communicate to processing

Serial.print(d1);

Serial.print(“,”);

Serial.print(d2);

Serial.print(“,”);

Serial.print(d3);

Serial.println();

}

Processing Code

import processing.serial.*;

import processing.video.*;

import processing.sound.*;

//create instance; movie is the video of the dancing idol of that color; BGM is background music

Movie orange;

Movie green;

Movie purple;

SoundFile BGM;

String myString = null;

Serial myPort;

//3, because there are three datas for each idol in one serial line

int NUM_OF_VALUES = 3;

int[] sensorValues;

//mixed color

color c;

//r, y, b are the incremental score counted by millis(); O, G, P are the accumulative scores of each player

long red = 0;

long yel = 0;

long blu = 0;

long Ora = 0;

long Gre = 0;

long Pur = 0;

// play BGM only once

boolean play = true;

void setup() {

//TV screen size

size(1920, 1080);

noStroke();

background(0);

setupSerial();

//get the files

orange = new Movie(this, “orange.mp4”);

green = new Movie(this, “green.mp4”);

purple = new Movie(this, “purple.mp4”);

BGM = new SoundFile(this, “BGM.mp3”);

textSize(128);

fill(255);

text(“mix”, 1655, 450);

}

void draw() {

updateSerial();

printArray(sensorValues);

//m1, 2, 3 are multipliers, the input amount, derived from distance

float m1 = 1-float(sensorValues[0])/30;

float m2 = 1-float(sensorValues[1])/30;

float m3 = 1-float(sensorValues[2])/30;

//play BGM only once

if (play == true) {

BGM.play();

play = false;

}

//display each’s color and the mixed on screen as rectangles

fill(255, 255-255*m1, 255-245*m1);

rect(0, 0, 288, 360);

fill(255, 255-10*m2, 255-255*m2);

rect(0, 360, 288, 360);

fill(255-255*m3, 255-105*m3, 255);

rect(0, 720, 288, 360);

c = color(255-255*m3, 255-(255*m1+10*m2+105*m3)*255/370, 255-(245*m1+255*m2)*255/500);

fill(c);

rect(1632, 540, 288, 360);

//judge the mixed color and who earns score at the moment; whose accumulative score is highest, whose idol’s video shall be played

if (m1+m2+m3 < 2.4) {

if (m1+m2 > 3*m3 && m2 > 0.1) {

red = millis()-Ora-Gre-Pur;

Ora += red;

}

if (m2+m3 > 3*m1 && m3 > 0.1) {

yel = millis()-Ora-Gre-Pur;

Gre += yel;

}

if (m3+m1 > 3*m2 && m1 > 0.1) {

blu = millis()-Ora-Gre-Pur;

Pur += blu;

}

}

if (m1+m2+m3 > 2.4) {

Ora -= 100*(m1)/(m1+m2+m3);

Gre -= 100*(m2)/(m1+m2+m3);

Pur -= 100*(m3)/(m1+m2+m3);

orange.stop();

green.stop();

purple.stop();

}

println(Ora);

println(Gre);

println(Pur);

textSize(128);

if (Ora > Gre && Ora > Pur) {

if (orange.available()) {

orange.read();

}

fill(0);

rect(288, 0, 1632, 324);

fill(255);

text(“Orange Girl on Stage”, 350, 200);

orange.play();

green.stop();

purple.stop();

image(orange, 288, 324, 1344, 756);

}

if (Gre > Ora && Gre > Pur) {

if (green.available()) {

green.read();

}

fill(0);

rect(288, 0, 1632, 324);

fill(255);

text(“Green Girl on Stage”, 350, 200);

green.play();

orange.stop();

purple.stop();

image(green, 288, 324, 1344, 756);

}

if (Pur > Gre && Pur > Ora) {

if (purple.available()) {

purple.read();

}

fill(0);

rect(288, 0, 1632, 324);

fill(255);

text(“Purple Girl on Stage”, 350, 200);

purple.play();

orange.stop();

green.stop();

image(purple, 288, 324, 1344, 756);

}

//end of game and show who wins

if(millis() >= BGM.duration()*1000) {

background(0);

fill(255);

if (Ora > Gre && Ora > Pur) {

text(“Orange Girl Wins Stage!”, 240, 500);

}

if (Gre > Ora && Gre > Pur) {

text(“Green Girl Wins Stage!”, 240, 500);

}

if (Pur > Ora && Pur > Gre) {

text(“Purple Girl Wins Stage!”, 240, 500);

}

}

fill(0);

textSize(32);

text(int(Ora), 20, 180);

text(int(Gre), 20, 540);

text(in(Pur), 20, 900);

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[3], 9600);

myPort.clear();

myString = myPort.readStringUntil(10);

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil(10);

if (myString != null) {

String[] serialInArray = split(trim(myString), “,”);

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}

PROJECT TITLE – YOUR NAME – YOUR INSTRUCTOR’S NAME

Forest Box: build your own forest–Katie–Inmi

CONCEPTION AND DESIGN:

In terms of interaction experience, our idea is to create something similar to VR: users do physical interactions in the real world and resulted in changes on the screen. There are several options we explored in the designing process. Inspired by a work by Zheng Bo called 72 relations with the golden rod, at first, we want to use an actual plant that attach multiple sensors onto it. To let the users explore different relations they can do to plants. For example, we want to attach a air pressure sensor onto the plant and whenever the someone blow to it, the video shown on the computer screen will change. And a pressure sensor that someone can step on it.

But we ended up not choosing these options because the equipment room do not have most of the sensors we need. We then select the color sensor, touch sensor, distance sensor and the motion sensor. However, we did not think carefully about the physical interactions before hooking them up. The first problem is that the motion sensor does not work as the way we want: it only sense motion but cannot identify certain gestures. As a result it sometimes will conflict with the distance sensor, so we give it up. So we have a very awkward stage where we have three sensors hooked up and different videos running according to sensor values but have difficulty to link them together.

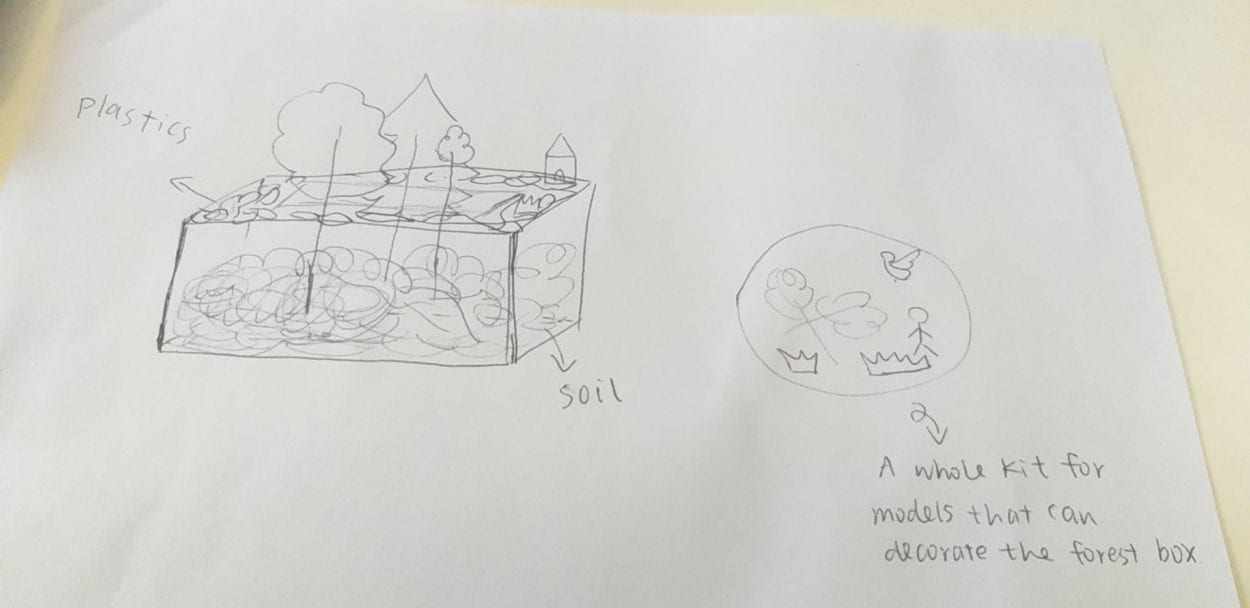

After asking Inmi for advice, the final solution we think of is to make a forest box that users can interact with and the visuals on the screen will change according to different kinds of interactions. If you place fire into the box, a forest fire video will be shown on the screen. if you throw plastics into the box, a video of plastic waste will be shown on the screen. if you pull off the trees, a video of forest being cut down will be shown on the screen. By this kind of interaction, we want to convey the message that every individuals’ small harm to earth can create huge damages. For the first scene, we use a camera with effects to attract user’s attention.

FABRICATION AND PRODUCTION:

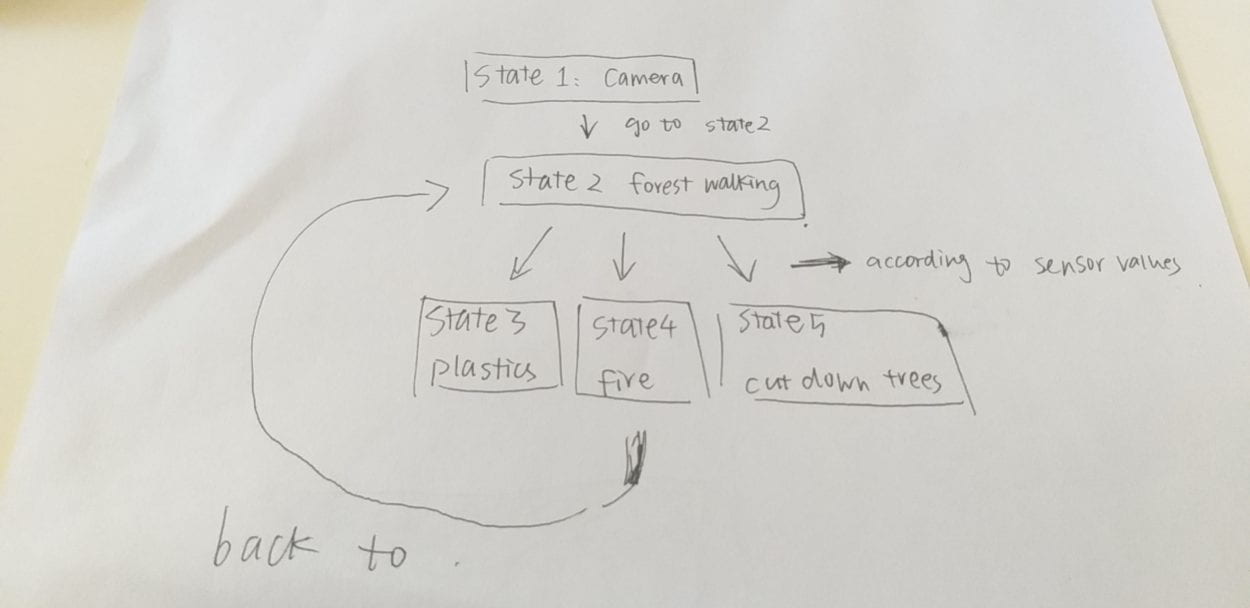

The most significant steps are first: hook up the sensors, Arduino and Processing code and let them communicate. I think the most challenging part for me is to figure out the logic in my Processing code. I did not know how to start at first because there are too many conditions and results. I don’t know how the if statements are arranged to achieve the output I want. The very helpful thing is to draw a flow diagram of how each video will be played.

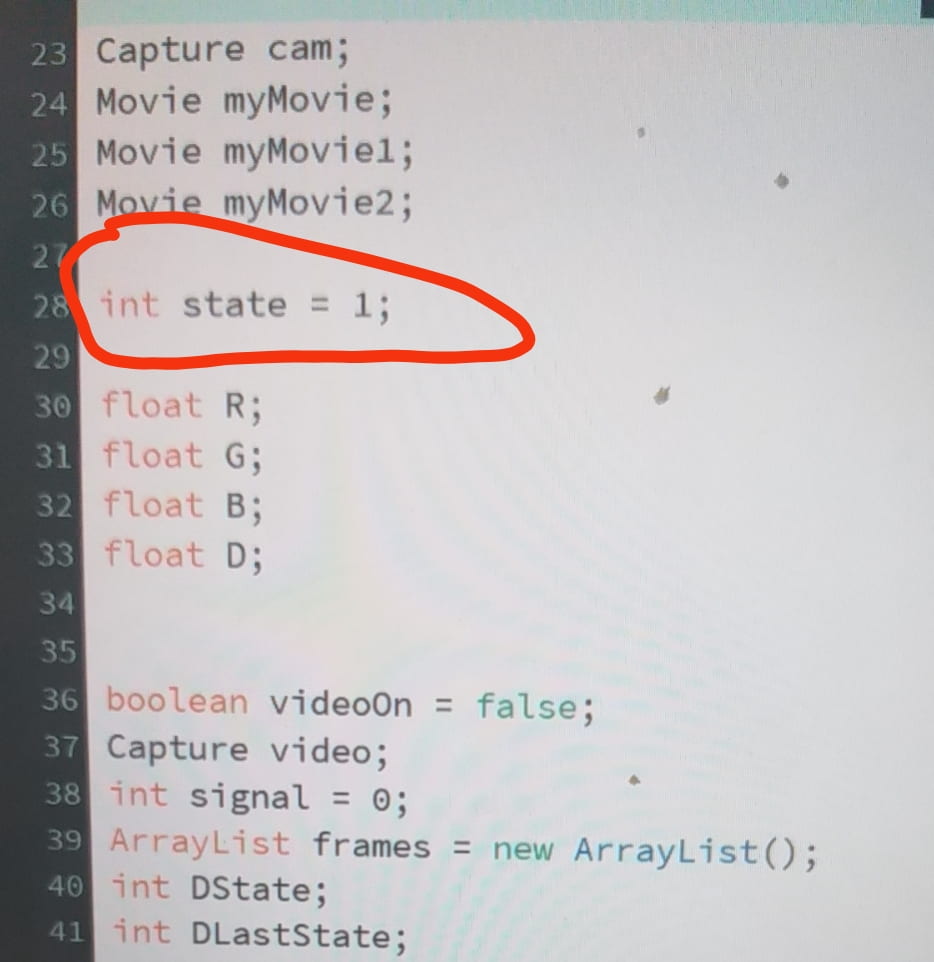

and then we need to define a new variable called state and the initial value is 1.

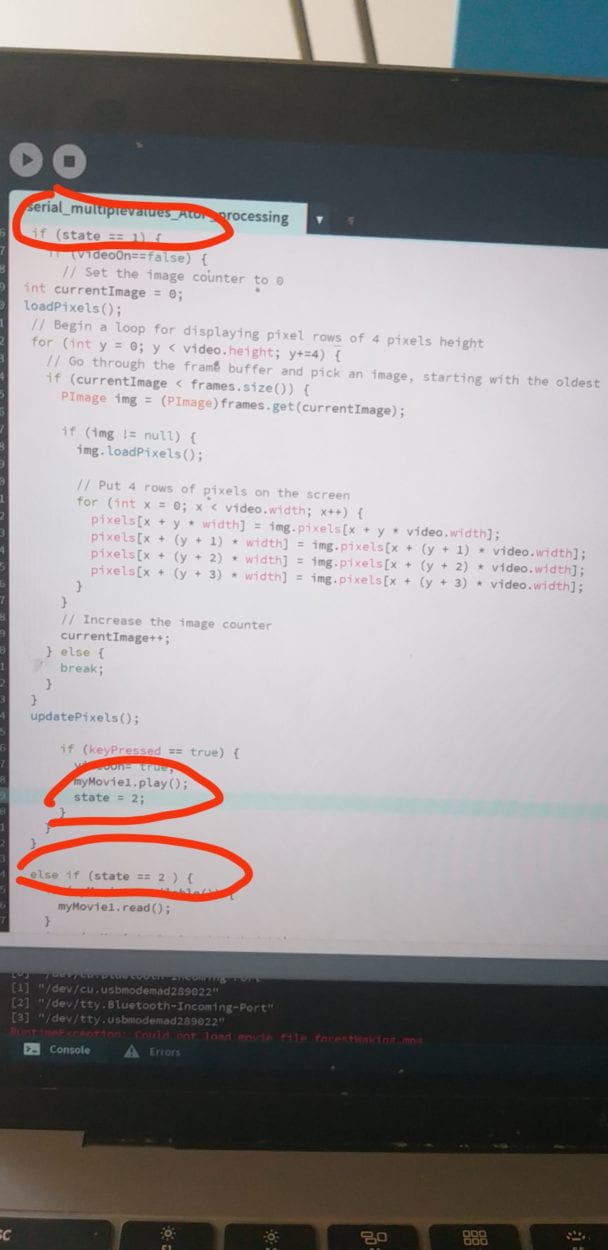

Then, the logic becomes clear and the work become simpler. I just need to write down what each state does separately and then connect them together. Although the code within one state can be very lengthy and difficult, the overall structure is simple and clear to me.

For example from state 1 to state 2:

Another important thing is we want to switch from state 1 to two by the keypress interaction. However, the video of state 2 only plays when the key is pressed, when you release the key, the state will turn back to 1. To solve this problem, I create a Boolean to trigger the on and off of the video.

At first, we want different users to run from far to near to the screen, wearing different costumes representing plastics and plants. the user wearing which costume first approach the screen determines which video to play. However, during user testing, our users said first, the costume is of poor quality. Second, the process of running is not interactive enough. Our professor also said that there’s no link between what the users are physically doing: running, to what’s happening on the screen: playing educational videos.

So after thinking through this problem, we create a forest box to represent forest, and one can interact with different elements of the box.

In this way, what the user is doing physically has some connections with what is shown on the screen.

CONCLUSIONS:

The goal of our project is to raise people’s awareness about climate issues, and reflect on our daily actions. The project results align with my definition of interaction in the way that output of the screen is determined by the input (users’ physical interaction). It is not align with my definition in the way that there is no “thinking” process between the first and the second input. We’ve already give the options they can do, so there’s no much exploration. I think our audience interact with our project the way we designed.

But there is a lot of things we can improve. First, we can better design the physical interaction with the forest box and let user to design the box the way they the want. For example, we can fill the box with dust and provide different kinds of plants and other decorations. Different users can experience the act of planting a forest together. By placing multiple color sensors in different place on the box, the visuals will change according to the different trees being planted. Second, we could first let plastics fill the surface of the box to indicate the plastic waste nowadays. If the user get rid of the plastics, the visuals on the screens will change, too. Second, for the video shown on the screen, we can draw by ourselves.

The most important thing I’ve learned is experience design. As I reflect on my project designing process, I realize for me now, it’s better to first think of an experience rather than the theme of the project. Sometimes to begin with a very big and broad theme is difficult for me to design the experience. But if you first think of an experience, for example, a game, then it’s easier to adapt the experience to your theme.

The second thing I’ve learned is coding skills. With more can more experience in coding, I think my coding logic improves. For this project, we have many conditions to determine which video to play, so there are a lot of “if statement”. I felt a mess at first, did not know how to start, then Tristan asked me to jump out of the “coding” for a second and think about the logic by drawing a flow diagram. After doing this, I felt much clearer of what I was going to do.

I think the climate issue is certainly a very serious one and everybody should care. Because climate changes effect our daily lives. “Nature does not need humans. Humans need nature.”

Strike!- Zhao Yang (Joseph)- Inmi

For the final project, my partner Barry and I decided to make an entertaining game. Basically, we chose to model an existing aircraft game. Since it’s classic and interesting, we don’t need to spend more time to introduce the mechanics of the game to the users so that we can spend more time focusing on how to accomplish a better game. For our project, we not only keep the original mechanics of the aircraft game but also add some other changes to the game. For the original game, the user needs to control the aircraft to attack the enemies and tries to avoid crashing into the enemies so that the aircraft can stay longer and get a higher score. If the aircraft doesn’t crash into the enemies or it destroys the enemies, it won’t lose their health points. However, we make some changes to this mechanics. In our game, if you let an enemy flee, your health points will also decrease. So the game encourages the user to try their best to attack the enemies. The reason why we make this change is to ensure that the game would end at some point and to increase the difficulty of the game. It is one of the creative parts of our final project. On the other hand, the way to interact with the original aircraft game is too limited. In the past, the only way to interact with the aircraft game is to click the buttons and use the joystick. In this way, the users only can interact with their fingers and hands. In that case, the users’ sense of engagement is not strong. Thus, in order to improve the aircraft game, we make changes to the ways of interaction. Based on our preparatory research, we found a project that uses Arduino and Processing. That project “tried to mimic the Virtual reality stuffs that happen in the movie, like we can simply wave our hand in front of the computer and move the pointer to the desired location and perform some tasks”. Here is the link of that project.

https://circuitdigest.com/microcontroller-projects/virtual-reality-using-arduino

In that project, the user can wear a device on his hand so that the computer could detect the motion of his hand. Then he can execute commands on the computer by moving and manipulating his hand. In my opinion, the interactive experience of this project can give people more sense of engagement. Hence, I think we can make a way to interact with our project to engage the user’s whole body. Then we came up with the idea that people can open their arms and imagine they are the aircraft itself and then tilt their bodies to control the movement of the aircraft. This is the way we expect people to interact with our project. Therefore, we chose to use the accelerometer sensor as the input of our project. This sensor can detect the acceleration on three axes. We chose the acceleration on Y-axis as the input in particular. If the user can wear the device on their wrist and wave their arm up and down, the acceleration on Y-axis will change. Then we can map this acceleration to Processing to control the movement of the aircraft. In this sense, it gives the user a sense that they are really flying. And this way to interact can reinforce the user’s sense of engagement. Moreover, the accelerometer sensor is quite small. So we can make a wearing device based on it very easily. And it’s convenient for users to wear it and take it off so that the users can enjoy the game more quickly. These are the reasons why we chose the sensor and why the sensor best suited to our project purpose. Honestly speaking, Kinect might have been another option. And it aligns with our idea that we expect people can engage their whole body to interact with our project. It’s actually our first choice of the input medium. However, it was not allowed to use the Kinect for the final project. So we have to reject this option.

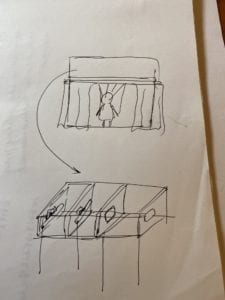

Since we tried to model an arcade game, we decided to laser cut an arcade game case in our production process. By using the case to cover my computer, it can provide the user with the sense of playing an arcade game instead of playing a computer game. This case provides a better outlook for our project. On the other hand, another significant step in our production process is to make the accelerometer sensor as a wearing device. In this case, it would be more sensitive if the user wears it instead of just holding it in their hands. And here are the images of our design of the arcade game case.

During the user test, most of the feedback was positive. Only a few of the users suggested that we should add the functions to make the aircraft can move forward so that the game could be more interesting. Furthermore, at that time, we only had one sensor. We found a problem that by only wearing one sensor, the user could only move their right arm to control the aircraft. And it was a little confusing for the users to open their arms and tilt their bodies to control the movement of the aircraft. Even though they read the instruction, some of them were still confused about the way of interaction. If we didn’t explain to them, most of them couldn’t properly interact with our project. And these are several of the videos during the user testing process.

As a result, after the user test, we decided to add another sensor to control the aircraft to move forward. Besides, we changed the one-player mode and two-player mode to easy mode and expert mode. However, it still aligns with our original thought about two players. Because of the addition of another sensor to control the aircraft to move forward, the game becomes more difficult. It’s really hard for the user to use their right arm to control the aircraft to move left and right and flip their left hand to control the aircraft to move forward and backward at the same time. If you don’t want to challenge the expert mode alone, you can ask your friend to collaborate to control the movement of the aircraft. From my perspective, the adaption of adding another sensor is effective and successful. During the IMA End of Semester Show, by wearing two sensors, even though the users chose to play the easy mode, which can only move the aircraft left and right, it made more sense to them to open their arms and tilt their bodies to control the movement of the aircraft. And the users can easily follow our instructions without being confused. I think our production choices are pretty successful. They align with our original thought that the users can use their whole body to interact with our project. Moreover, the way of using the sensors makes the game more interesting and gives the user more sense of engagement.

CONCLUSIONS

In conclusion, the goal of our project is to make an entertaining and interesting game so that the users can have fun with it and spend their spare time playing it to relax. My definition of interaction is that it is a cyclic process that requires at least two objects which affect each other. In my opinion, our project quite aligns with my definition of interaction. The motion of the user’s body controls the movement of the aircraft in the game. Meanwhile, the scores and the image of the game immediately show to him. It aligns with the part that the objects affect each other. Moreover, the user has to focus on the game and keep interacting with it so that he can get a higher score. It aligns with the part of the cyclic process. In this sense, our project aligns with my definition of interaction successfully. Basically, all the people who have played our game align with our expectations of how they should interact. Just sometimes some of the users didn’t read the instructions that we provided to them, so they might be confused about how to interact. If we had more time, we could come up with more innovative ideas on the mechanics of the game. Since the mechanics of our game is quite similar to the original game, the user might not find much novelty on our game. If it is allowed, we would like to try to use the Kinect as the way of interaction because the direct detection of our motion can make the connection between the way of interaction and the game itself more clear. After all, our idea of engaging the whole body to interact fits the use of Kinect more than the use of the accelerometer sensor. I’ve learned a lot from our accomplishments in our final project. For instance, we have to test the game by ourselves, again and again, to ensure that the user can experience the best version of the game. We had to spend a lot of time debugging. Besides, in order to laser cut the arcade game case, I learned how to use illustrator. What’s more, the most important thing when creating an interactive project is that the user is always the first thing that we need to consider.

https://github.com/JooooosephY/Interaction-Leb-Final-Project

Project name: Puppet

Conception and Design

The final goal of our project was decided at the beginning, which is to show the theme of “social expectation”. The original thought was to let the user play the role of the various forces in the society that force us to behave in a certain way, and the puppet is the representation of ourselves, who are being controlled. Therefore, the original plan was to let the user set a pose for the puppet in Processing, and thus data of the pose would be sent to the Arduino part. The real puppet on the miniature stage would first change several random poses, and finally stay at the pose that the user set before. In the first week of the process, we started to prepare the materials needed for the project with the original plan in mind. The most important part in our project is the puppet. We tried to search for one that is not so funny or childish to make our theme more distinctive, and finally decided to buy the vintage puppet that has 30 years history. We expected that the final stage may have a quite large size. If we use laser cutting to build the stage, then the materials may be insufficient. Therefore, we finally decided to use cartons as replacement. To add some dramatic atmosphere of our stage, we bought some velvet, expecting to stick them to the stage surface. In addition, we bought a mini tracker lamp to be attached to the top of the stage. For the Arduino part, we decided to use four servos connected with strings to rotate the arms and legs of the puppet. To make it more stable, we decided to use 3D printing to print some components and stick them to the servos with hot glue. In addition, we used some red velvet to make the stage curtain. Since it requires professional skills, we sent the velvet to a tailor shop, and finally got a satisfying result.

Fabrication and Production

To create the image of the puppet in Processing, I tried to draw a cartoon version of the puppet by coding at the beginning. But I finally gave up since it was too complicated and the final result may even not be satisfying due to the limitation of coding. Therefore, I decided to draw the image in a digital painting app name as Procreate. I can draw different parts of the puppet’s body in different layers of the painting screen, and thus we can directly load the images into Processing and rotate them. We first chose to use keyboard and mouse interaction to let the user control the movement of the digital puppet, and we finally finished the code. However, when we shared our thoughts with the IMA fellows, they pointed out that it would be hard for the users to see our theme of social expectation with such a simple process. Besides, it may not make sense to control the puppet with Processing instead of directly controlling it. The digital puppet and the physical puppet are presented to the user at the same time, and it looks a bit competitive. From our own perspective, we also felt that the interaction in our project was a bit weak, and the theme seemed to be vague. Therefore, we modified the plan. We planned to make our stage curtain an automatic one. We could use the motor to twine the string connected to the front of the curtain, thus opening it. Besides, I changed the color of the image in Processing to black and white tone. We could cast it on the wall with projector and it would look a huge shadow hanging over the real puppet.

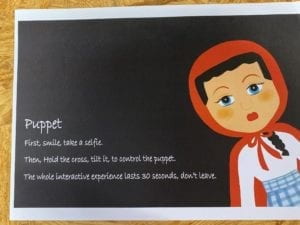

However, our plan changed again after user testing. Professor Marcela also pointed out the problem that our theme seemed to be very vague to her, and we also shared our worries with her. She gave us several valuable suggestions. She suggested us to use the cross, which is part of the real puppet, to let the user control the movement of the puppet directly. Besides, she suggested that we could use webcam to capture the user’s face, and finally put their faces in the head of the digital puppet, so the logic could be clear that the user is actually also being controlled. In addition, we also received a suggestion that we can add some voice of the puppet, to let it say something. These suggestions were extremely precious to us, and we started to change almost everything of our project after user test.

First of all, we asked the fellows which sensor we can use to control the movement of the puppet directly. They suggested that we can use the accelerometer. The angle of the rise and fall of the puppet’s arms and legs would change with the angle that the user leans the cross. In addition, since it is hard to capture the users’ face when they are moving, Professor Eric suggested us to take a picture of the user at the beginning. He helped us with the coding and finally we made it like a process of asking them to take a selfie. I wrote a script and recorded my voice to be the puppet’s voice. The lines include, “What should I do?”, “What if they will be disappointed at me?”, “What if I cannot fit in?”. The last sentence is, “Hey friend, do you know, you are just like me.” After this last sentence, the image that the user’s face is in the head of the digital puppet will be shown to the user, so that we can show the logic that while we are controlling others, we are also being controlled. However, there were some problems with the Arduino part. The night before presentation, we were testing the accelerometer, hoping that everything could work well. However, we could not even find the port connected to the computer. Besides, in our previous testing, we found that the accelerometer is quite unstable and sensitive, making it hard to control the movement of the real puppet. Professor Marcela suggested us to change the accelerometer to tilt sensors, which are more stable. We took this advice and changed the code again. Tilt sensor functions as a button, if we lean it, a certain behavior could be triggered. In our case, we used two tilt sensors to control the movement of the arms and legs respectively. And the logic is, if the left arm rises up, the right arm would fall down, vice versa. Since tilt sensor only has a function as on or off, it is also easier for us to send the data to Processing. The digital image in Processing would change with the real puppet, following its poses. After we got everything done, I made a poster, on which I wrote the instructions and also the explanation of our project theme.

Conclusions

Our project aligns well with my definition of interaction. In my preparatory research and analysis, I wrote my personal definition of a successful interaction experience. In my opinion, the process of interaction should be clear to the users, so that they can get a basic sense of what they should do to interact with the device. Various means of expression can be involved, such as visuals and audios. The experience could be thought-provoking, which may reflect the facts in the real life. My partner and I have created a small device as a game in our midterm project, so this time we decided to create an artistic one as a challenge. Our project aims at those who intentionally or compulsively cater to the social roles imposed on them by the forces in the society. We showed the logic that while we are controlling others while also being controlled by the others. In fact, it is hard to show a theme in an interactive artistic installation, and it was hard for us to find the delicate balance, the balance that we can trigger the thoughts of the user without making everything too heavy. The visual effect of our project is satisfying, and we also use music and voices to add more means of expression. The user’s interaction with our project is direct and clear. Instead of touching the cold buttons on the keyboard, they can hold the cross, listen to the monologue of the puppet, and thus build an invisible relation of empathy with the real puppet. After the final presentation, we have also received several precious suggestions. If we have more time, we would probably try to make the whole interactive process longer with more means of interaction, so that the user can be given more time to think more deeply about the theme. There are many ways to show our theme, but the results could be entirely different. We are given possibilities but may also get lost. The most important thing that I have learnt in this experience is to always be clear about what I am trying to convey and what the goals are at the beginning. Without a clear theme in mind, we are likely to lose directions, and the final work could be a mixture of various disordered ideas.

Video of the whole interactive experience:

Arduino Code:

#include <Servo.h>

Servo myservo1;

Servo myservo2;

Servo myservo3;

Servo myservo4;// create servo object to control a servo

int angleArm = 0;

int angleLeg = 0;

const int tiltPin1 = 2;

const int tiltPin2 = 4;

int tiltState1 = 0;

int tiltState2 = 0;

void setup() {

Serial.begin(9600);

myservo1.attach(3);

myservo2.attach(5);

myservo3.attach(6);

myservo4.attach(9);

pinMode(tiltPin1, INPUT);

pinMode(tiltPin2, INPUT);

}

void loop() {

//reasonable delay

delay(250);

tiltState1 = digitalRead(tiltPin1);

tiltState2 = digitalRead(tiltPin2);

if (tiltState1 == HIGH) {

angleArm = 90;

} else {

angleArm = -90;

}

if (tiltState2 == HIGH) {

angleLeg = 30;

} else {

angleLeg = -30;

}

// Serial.println(angleArm);

// Serial.println(angleLeg);

myservo1.write(90 + angleArm);

myservo2.write(90 - angleArm);

myservo3.write(90 + angleLeg);

myservo4.write(90 - angleLeg);

Serial.print(angleArm);

Serial.print(","); // put comma between sensor values

Serial.print(angleLeg);

Serial.println(); // add linefeed after sending the last sensor value

delay(100);

}

Processing Code

import processing.sound.*;

SoundFile sound;

SoundFile sound1;

import processing.video.*;

Capture cam;

PImage cutout = new PImage(160, 190);

import processing.serial.*;

String myString = null;

Serial myPort;

int NUM_OF_VALUES = 2; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

PImage background;

PImage body;

PImage arml;

PImage armr;

PImage stringlr;

PImage stringar;

PImage stringal;

PImage legl;

PImage stringll;

PImage legr;

float yal=100;

float yll=0;

float yar=0;

float ylr=0;

float leftangle=PI/4;

float rightangle=-PI/4;

float leftleg = 570;

float rightleg = 570;

float armLerp = 0.22;

float legLerp = 0.22;

float pointleftx =-110;

float pointlefty =148;

PImage body2;

boolean playSound = true;

void setup() {

size(850, 920);

setupSerial();

cam = new Capture(this, 640, 480);

cam.start();

background = loadImage("background.png");

body=loadImage("body.png");

arml=loadImage("arml.png");

stringal=loadImage("stringal.png");

armr=loadImage("armr.png");

legl=loadImage("legl.png");

stringll=loadImage("stringll.png");

legr=loadImage("legr.png");

stringar=loadImage("stringar.png");

stringlr=loadImage("stringlr.png");

body2 =loadImage("body2.png");

sound = new SoundFile(this, "voice.mp3");

sound1 = new SoundFile(this, "bgm.mp3");

sound1.play();

sound1.amp(0.3);

}

void draw() {

updateSerial();

printArray(sensorValues);

if (millis()<15000) {

if (cam.available()) {

cam.read();

}

imageMode(CENTER);

int xOffset = 220;

int yOffset = 40;

for (int x=0; x<cutout.width; x++) {

for (int y=0; y<cutout.height; y++) {

color c = cam.get(x+xOffset, y+yOffset);

cutout.set(x, y, c);

}

}

background(0);

image(cutout, width/2, height/2);

fill(255);

textSize(30);

textAlign(CENTER);

text("Place your face in the square", width/2, height-100);

text(15 - (millis()/1000), width/2, height-50);

} else {

if (!sound.isPlaying()) {

// play the sound

sound.play();

}

imageMode(CORNER);

image(background, 0, 0, width, height);

image(legl, 325, leftleg, 140, 280);

image(legr, 435, rightleg, 85, 270);

image(body, 0, 0, width, height);

if (millis()<43000) {

image(body, 0, 0, width, height);

} else {

image(cutout, 355, 95);

image(body2, 0, 0, width, height);

sound.amp(0);

}

arml();

armr();

//stringarmleft();

image(stringal, 255, yal, 30, 470);

image(stringll, 350, yll, 40, 600);

image(stringar, 605, yar, 30, 475);

image(stringlr, 475, ylr, 40, 600);

int a = sensorValues[0];

int b = sensorValues[1];

float targetleftangle= PI/4 + radians(a/2);

float targetrightangle= -PI/4 + radians(a/2);

float targetleftleg= 570+b*1.6;

float targetrightleg= 570-b*1.6;

leftangle = lerp(leftangle, targetleftangle, armLerp);

rightangle = lerp(rightangle, targetrightangle, armLerp);

leftleg = lerp(leftleg, targetleftleg, legLerp);

rightleg = lerp(rightleg, targetrightleg, legLerp);

float targetpointr = -100-a*1.1;

float targetpointl = -120+a*1.1;

float targetpointr1 = -50+b*1.3;

float targetpointr2 = -50-b*1.3;

yal= lerp(yal, targetpointr, armLerp);

yar = lerp(yar,targetpointl,armLerp);

yll= lerp(yll,targetpointr1,legLerp);

ylr = lerp(ylr,targetpointr2,legLerp);

}

}

void arml() {

pushMatrix();

translate(375, 342);

rotate(leftangle);

image(arml, -145, -42, 190, 230);

fill(255, 0, 0);

noStroke();

popMatrix();

}

void armr() {

pushMatrix();

translate(490, 345);

rotate(rightangle);

image(armr, -18, -30, 190, 200);

popMatrix();

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 11 ], 9600);

myPort.clear();

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), ",");

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}