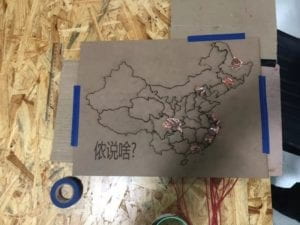

侬说啥?

CONCEPTION AND DESIGN:

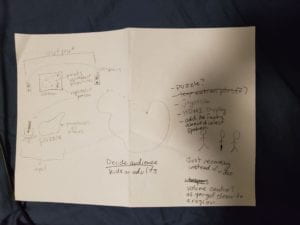

In the original plan, there wasn’t going to be a puzzle, but when the idea of focusing on the kid point of the project was brought in the class discussion, I think that was a key turning point for how this project ended up being designed.

Going into the class discussion, I wasn’t sure how I was going to make this project so interactive, but the idea of kids playing with it made total sense to me. When I was a kid, I went to my local Boys & Girls Club and then eventually started working there in the summers and after school. Through this background in making programs that kids enjoy, I knew exactly what to design to catch the eyes of kids. When designing, I wanted all of the pictures to be bright and colorful, and the character on the screen that they could move around themselves is supposed to be someone they know (It’s the character Russel from Up).

The use of the puzzle was also another really good idea brought up in the class discussion because it was a good way to keep kids engaged. I think that if we had just used a screen, kids would not have wanted to play with the project as much as they did at the final IMA show.

FABRICATION AND PRODUCTION:

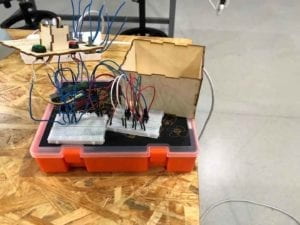

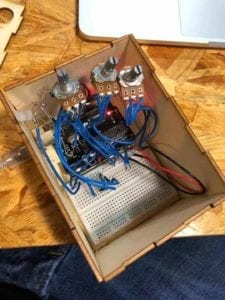

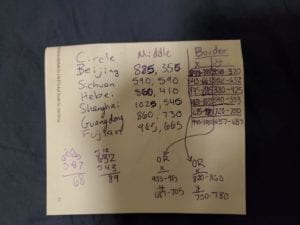

I think the most significant steps in the production process were making sure that people can’t move once they start listening to one province’s dialect and the creation of our own “buttons” by using the capacitive sensor. I think making sure that people couldn’t move once they started listening to a province was helpful to contribute to the significance of this project as it helped the message that these dialects are often forgotten or looked over, so forcing people to pay attention was the opposite of what is normally done. I also think creating our own buttons was an important step because it used the natural electricity from the human body to trigger the translation on screen.

From user testing, we learned to use headphones instead of speakers because the environment was too noisy for anyone to actually listen. In addition, we learned that the diagonal motion of the joystick had not been programmed in, so it could only move from side to side or up and down. We also learned that Shanghai is too small for Russel to fit in, so there needs to be some way to trigger the sound once Russel crosses over.

CONCLUSIONS:

I think this project was able to fit my definition of interaction because it not only involved a human user but also the interaction between Arduino and Processing in order for this to be a success. I think this doesn’t align with my definition of interaction because most of the time the human user is not actually interacting with the Processing part, they are using the Arduino to interact with the Processing, there is no direct contact. Ultimately, my audience definitely interacted with my project as it caught their eye and kept them engaged. If I had more time, I would make sure that the puzzle piece could be wither put in or picked up and out for the translations to be triggered, because I couldn’t get it working the way I wanted it to, and ultimately had to use the capacitive sensor. I really enjoyed getting the freedom to work with my hands and do my project on anything I wanted to. So many people asked me if I was a Global China Studies major when they saw my project, but no, it was just one thing I was interested in, I don’t necessarily have to be that major to have an interest in it.

I think this project was significant because a lot of the Chinese people who interacted with this project really thought deeply and reflected on their knowledge of their dialect or from one parent why they felt like they needed to not teach their children the dialect of their hometown. I also think it was interesting to hear the stories of my friends from their childhood with memories they cherished with points that made it feel like home. For me, it was also very weird to hear such stark differences between the dialects since most of the time, the only time I compare Chinese is between Mandarin and the dialect. Through this comparison, I now understood why the Chinese government wanted to push the teaching of one unified language as they are so different.

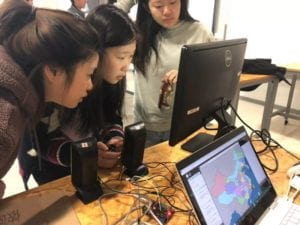

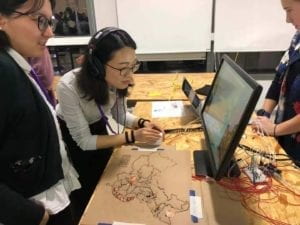

Photos from the IMA Show

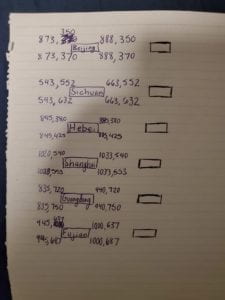

Translations and Recordings

Link to recordings because I couldn’t upload audio: Recordings