CREATIVE MOTION – LILLIE YAO – IMNI

CONCEPTION AND DESIGN:

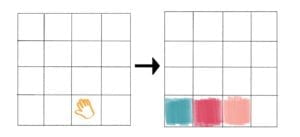

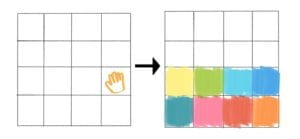

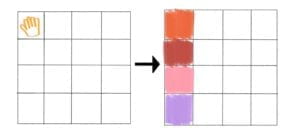

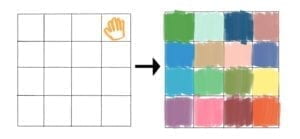

We wanted to create an interactive project where users would get to see the reflections of themselves through a light matrix, instead of seeing their reflections on something obvious like a camera or mirror. Since we wanted to make it very obvious what the users were doing, we decided to put the matrix (our output source) right in front of the camera (our input source). In the beginning we were just going to have them side by side but we realized that would take the attention off of the matrix since people tend to want to look at their reflection more than the light, no matter how obvious the light may be.

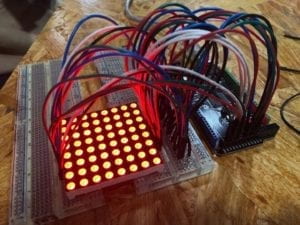

During our brainstorm period, we tried to research different light boards since we knew we wanted to have a surface of light displays instead of single LED lights. We also though that programming and using 64 or more LED lights would be very complicated. We ended up using the Rainbowduino and 8×8 LED Matrix Super Bright to create the light board as well as the Rainbowduino being able to be our Arduino/breadboard source. Since we researched a bunch of different light sources, the only one that was available to us was the Rainbowduino and LED matrix. I’m sure there would have been better options, especially because we hoped to have a bigger LED board.

FABRICATION AND PRODUCTION:

During user testing we got different feedback and suggestions from our classmates that they thought would make our project better. A lot of classmates wished that the display of the LED board was bigger so that we could make our interaction more of an experience rather than just a small board in front of their eyes. As an attempt to change that, we wanted to put together multiple LED boards to make a bigger screen. Soon after attempting that, we realized that you actually can’t put together multiple LED boards easily and make them work together. Each LED board actually operates separately from each other. As stated in our concept and design, we researched multiple different types of LED boards but a lot of the materials that were better suited for our project were not available to us in the short amount of time after user testing.

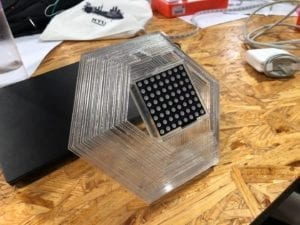

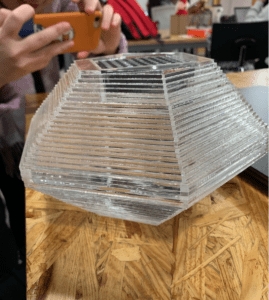

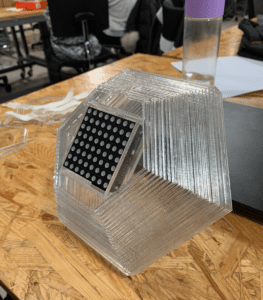

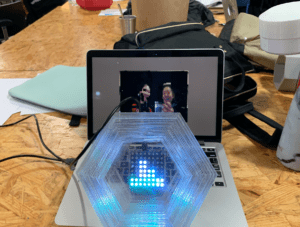

After realizing that we still had to use one LED Matrix board, we decided to make our fabrication product so that it would magnify the LED board. We decided to make a polygonal shape with the clear acrylic material where the LED would fit snug into a box on the end. We decided to go with the clear acrylic board for laser cutting is because we thought the opaque look would suit our design better than any other material. In my mind, I pictured that the LED lights would reflect off of different surfaces and it would appear more interesting if the product was see-through. We really didn’t think there would have been a better option because all of the other laser cutting materials were too dull and 3D printing wouldn’t have worked for a hollow design. After fabrication, a fellow ( which I forgot his name…..) gave us the idea to actually put the matrix INSIDE our polygon so that the lights would reflect within our polygon. This truly changed our project because now, we were able to utilize our fabrication design in a different and better way than before.

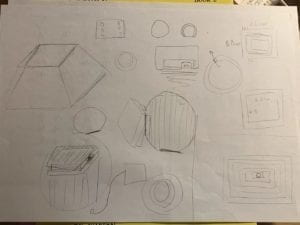

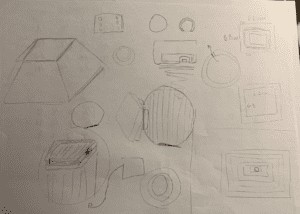

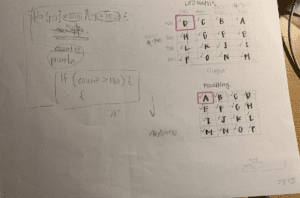

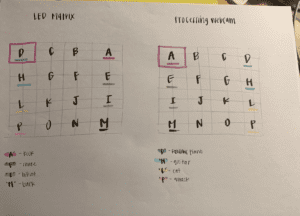

Sketching for fabrication:

Laser Printing:

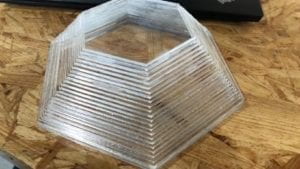

Original fabrication:

Changed fabrication so the light would shine through:

Another suggestion during user testing that we had was that users wished the LED board was facing them instead of facing up because it was hard to see the board when it is not facing the user. Therefore, we decided to make the fabrication product a polygon so that it would be easier to angle the polygon to turn to the side and face the user.

Lastly, we had a great suggestion to implement sound into our project to make it more interesting rather than just seeing light, users would also be able to trigger different sounds while they move. After getting this feedback, we decided to code different sounds into our project that would trigger when you moved in different places. This really changed our project because we got to use different sounds and lights to create art, which in my opinion made our project more well rounded.

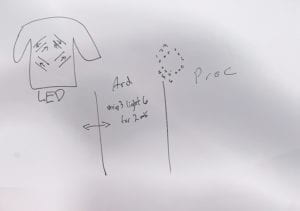

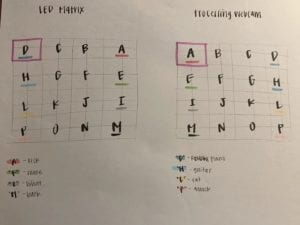

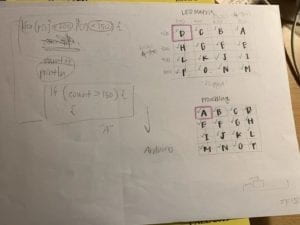

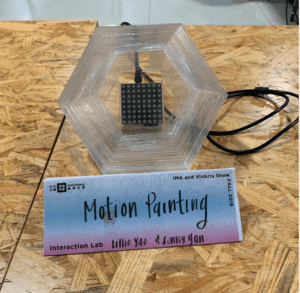

Sketches for LED board and pixels on camera:

After presenting our final project, we got some feedback saying that some of the sounds were too much and it would be better if we used all musical instruments instead of animal noises, snapshots, etc. Since we both really wanted to present our product at the IMA show, we decided to change the sounds to all instrument sounds before the show, that way it would be lighter on the ears and the users would be less confused. I think this helped our project a lot because many people really loved our project at the IMA show, even Chancellor Yu got to interact and see our project!

Chancellor Yu interacting with our project!!!!:

CONCLUSIONS:

The goal of my project was to create something that users could interact with but at the same time, have fun with. We wanted to create something that has a direct input/output as well as something users can play with for fun. As a creator, I felt like it was really cool to create something that people can interact with and have fun with at the same time.

My product result aligns with my original definition of interaction because it has both an input and an output but it will keep running whether or not it has an input. The input being the camera, will still detect changes in motion whether or not something or some one is moving. At the same time, my definition of interaction stated that interaction is a continuous loop from input to output. So if there is an input, there will 100% be an output. Which in my project, if there is any change in motion, it will change the light on the matrix and trigger the sound at the same time.

My expectation of the audience’s response was pretty similar. The only thing my partner and I didn’t really think about was that once a user sees their own reflection, they tend to focus on that instead of the lights changing. I often found myself having to explain my project instead of them figuring it out and if they did figure it out, it took a bit of time for them to do so. Other than that, my expectation of audience reactions was pretty similar.

User Reactions:

If I had more time to improve my project, I would definitely take into consideration the “experience” aspect of the project I wanted to implement. During our final project, Eric said that if really wanted to make it an experience, we needed to factor in a lot of different things. If I could change some of the things about my project to make it more of an experience I would have speakers around to amplify the sound, project the camera input onto a bigger screen, and make the LED light board bigger.

From the setbacks and failures of my project, I learned that theres always room for improvement, even if you think there isn’t enough time. I learned that there is always going to be projects and parts of other peoples work that will be better than yours but you should never compare what other peoples capabilities are to yours. After taking this class and seeing all of the work that I have done, I am very happy with all of my accomplishments. I would have never thought that this project would come to life during our brainstorming period but I’m really glad we could make it work! I’m really glad that we were able to create a fun and interactive work or art where users were able to see themselves and make art with light as well as music and sound!

Arduino/Rainbowduino Code:

#include <Rainbowduino.h>

char valueFromProcessing;

void setup()

{

Rb.init();

Serial.begin(9600);

}

unsigned char x, y, z;

void loop() {

while (Serial.available()) {

valueFromProcessing = Serial.read();

if (valueFromProcessing == ‘D’) {

Rb.fillRectangle(0, 0, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘d’) {

Rb.fillRectangle(0, 0, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘C’) {

Rb.fillRectangle(2, 0, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘c’) {

Rb.fillRectangle(2, 0, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘B’) {

Rb.fillRectangle(4, 0, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘b’) {

Rb.fillRectangle(4, 0, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘A’) {

Rb.fillRectangle(6, 0, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘a’) {

Rb.fillRectangle(6, 0, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘H’) {

Rb.fillRectangle(0, 2, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘h’) {

Rb.fillRectangle(0, 2, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘G’) {

Rb.fillRectangle(2, 2, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘g’) {

Rb.fillRectangle(2, 2, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘F’) {

Rb.fillRectangle(4, 2, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘f’) {

Rb.fillRectangle(4, 2, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘E’) {

Rb.fillRectangle(6, 2, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘e’) {

Rb.fillRectangle(6, 2, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘L’) {

Rb.fillRectangle(0, 4, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘l’) {

Rb.fillRectangle(0, 4, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘K’) {

Rb.fillRectangle(2, 4, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘k’) {

Rb.fillRectangle(2, 4, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘J’) {

Rb.fillRectangle(4, 4, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘j’) {

Rb.fillRectangle(4, 4, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘I’) {

Rb.fillRectangle(6, 4, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘i’) {

Rb.fillRectangle(6, 4, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘P’) {

Rb.fillRectangle(0, 6, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘p’) {

Rb.fillRectangle(0, 6, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘O’) {

Rb.fillRectangle(2, 6, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘o’) {

Rb.fillRectangle(2, 6, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘N’) {

Rb.fillRectangle(4, 6, 2, 2, random(0xFFFFFF));

}

if (valueFromProcessing == ‘n’) {

Rb.fillRectangle(4, 6, 2, 2, 0x000000);

}

if (valueFromProcessing == ‘M’) {

Rb.fillRectangle(6, 6, 2, 2, random(0xFFFFFF));

delay(1);

}

if (valueFromProcessing == ‘m’) {

Rb.fillRectangle(6, 6, 2, 2, 0x000000);

}

}

}

Processing Code:

import processing.video.*;

import processing.serial.*;

import processing.sound.*;

Serial myPort;

Capture cam;

PImage prev;

boolean p[];

int circleSize = 10;

SoundFile a4;

SoundFile b4;

SoundFile c4;

SoundFile c5;

SoundFile cello_slide;

SoundFile d4;

SoundFile e4;

SoundFile f4;

SoundFile g4;

SoundFile violin_slide;

SoundFile guitar;

void setup() {

size(800,600);

cam = new Capture(this, 800,600);

cam.start();

prev = cam.get();

p = new boolean[width * height];

myPort= new Serial(this, Serial.list()[ 2 ], 9600);

a4 = new SoundFile(this, “a4.wav”);

b4 = new SoundFile(this, “b4.wav”);

c4 = new SoundFile(this, “c4.wav”);

c5 = new SoundFile(this, “c5.wav”);

d4 = new SoundFile(this, “d4.wav”);

e4 = new SoundFile(this, “e4.wav”);

f4 = new SoundFile(this, “f4.wav”);

g4 = new SoundFile(this, “g4.wav”);

guitar = new SoundFile(this, “guitar.wav”);

}

void draw() {

if (cam.available()) {

cam.read();

cam.loadPixels();

}

translate(cam.width,0);

scale(-1,1);

image(cam,0,0);

int w = cam.width;

int h = cam.height;

for (int y = 0; y < h; y+=circleSize){

for (int x = 0; x < w; x+=circleSize) {

int i = x + y*w;

//fill( 0 );

if(cam.pixels[i] != prev.pixels[i]){

p[i] = true;

}

else{

p[i] = false;

}

}

for (int y = circleSize; y < h- circleSize; y+=circleSize){

for (int x = circleSize; x < w- circleSize; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

fill(cam.pixels[i]);

}

else{

fill( 0 );

}

rect(x,y,circleSize,circleSize);

}

}

int countD = 0;

for (int y = circleSize; y < 150; y+=circleSize){

for (int x = circleSize; x < 200; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

countD++;

}

}

println(countD);

if (countD > 100){

myPort.write(‘D’);

if(!guitar.isPlaying()) {

guitar.play();

}

}

else {

myPort.write(‘d’);

}

int countC = 0;

for (int y = circleSize; y < 150; y+=circleSize){

for (int x = 200+circleSize; x < 400; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

countC++;

}

}

println(countC);

if (countC > 100){

myPort.write(‘C’);

if(!g4.isPlaying()) {

g4.play();

}

}

else {

myPort.write(‘c’);

}

int countB = 0;

for (int y = circleSize; y < 150; y+=circleSize){

for (int x = 400+circleSize; x < 600; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countB++;

}

}

println(“B: ” + countB);

if (countB > 100){

myPort.write(‘B’);

if(!f4.isPlaying()) {

f4.play();

}

}

else {

myPort.write(‘b’);

}

int countA = 0;

for (int y = circleSize; y < 150; y+=circleSize){

for (int x = 600+circleSize; x < 800; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countA++;

}

}

println(countA);

if (countA > 100){

myPort.write(‘A’);

if(!guitar.isPlaying()) {

guitar.play();

}

}

else {

myPort.write(‘a’);

}

int countH = 0;

for (int y = 150+circleSize; y < 300; y+=circleSize){

for (int x = circleSize; x < 200; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countH++;

}

}

println(countH);

if (countH > 100){

myPort.write(‘H’);

if(!a4.isPlaying()) {

a4.play();

}

}

else {

myPort.write(‘h’);

}

int countG = 0;

for (int y = 150+circleSize; y < 300; y+=circleSize){

for (int x = 200+circleSize; x < 400; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countG++;

}

}

println(countG);

if (countG > 100){

myPort.write(‘G’);

}

else {

myPort.write(‘g’);

}

int countF = 0;

for (int y = 150+circleSize; y < 300; y+=circleSize){

for (int x = 400+circleSize; x < 600; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countF++;

}

}

println(countF);

if (countF > 100){

myPort.write(‘F’);

} else {

myPort.write(‘f’);

}

int countE = 0;

for (int y = 150+circleSize; y < 300; y+=circleSize){

for (int x = 600+circleSize; x < 800; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countE++;

}

}

println(countE);

if (countE > 100){

myPort.write(‘E’);

if(!e4.isPlaying()) {

e4.play();

}

}

else {

myPort.write(‘e’);

}

int countL = 0;

for (int y = 300+circleSize; y < 450; y+=circleSize){

for (int x = circleSize; x < 200; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countL++;

}

}

println(countL);

if (countL > 100){

myPort.write(‘L’);

if(!b4.isPlaying()) {

b4.play();

}

}

else {

myPort.write(‘l’);

}

int countK = 0;

for (int y = 300+circleSize; y < 450; y+=circleSize){

for (int x = 200+circleSize; x < 400; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countK++;

}

}

println(countK);

if (countK > 100){

myPort.write(‘K’);

}

else {

myPort.write(‘k’);

}

int countJ = 0;

for (int y = 300+circleSize; y < 450; y+=circleSize){

for (int x = 400+circleSize; x < 600; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countJ++;

}

}

println(countJ);

if (countJ > 100){

myPort.write(‘J’);

}

else {

myPort.write(‘j’);

}

int countI = 0;

for (int y = 300+circleSize; y < 450; y+=circleSize){

for (int x = 600+circleSize; x < 800; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

int downLt = (x – circleSize) + (y+ circleSize) * w;

int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[down] && p[left] && p[right] && p[upRt] && p[upLt] && p[downRt] && p[downLt]){

//fill(cam.pixels[i]);

countI++;

}

}

println(countI);

if (countI > 100){

myPort.write(‘I’);

if(!d4.isPlaying()) {

d4.play();

}

}

else {

myPort.write(‘i’);

}

int countP = 0;

for (int y = 450+circleSize; y < 600; y+=circleSize){

for (int x = circleSize; x < 200; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

//int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

if(p[i] && p[up] && p[left] && p[right] && p[upRt] && p[upLt]){

//fill(cam.pixels[i]);

countP++;

}

}

println(countP);

if (countP > 100){

myPort.write(‘P’);

if(!c5.isPlaying()) {

c5.play();

}

}

else {

myPort.write(‘p’);

}

int countO = 0;

for (int y = 450+circleSize; y < 600; y+=circleSize){

for (int x = 200+circleSize; x < 400; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

//int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

if(p[i] && p[up] && p[left] && p[right] && p[upRt] && p[upLt]){

//fill(cam.pixels[i]);

countO++;

}

}

println(countO);

if (countO > 100){

myPort.write(‘O’);

}

else {

myPort.write(‘o’);

}

int countN = 0;

for (int y = 450+circleSize; y < 600; y+=circleSize){

for (int x = 400+circleSize; x < 600; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

//int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[left] && p[right] && p[upRt] && p[upLt]){

//fill(cam.pixels[i]);

countN++;

}

}

println(countN);

if (countN > 100){

myPort.write(‘N’);

}

else {

myPort.write(‘n’);

}

int countM = 0;

for (int y = 450+circleSize; y < 600; y+=circleSize){

for (int x = 600+circleSize; x < 800; x+=circleSize) {

int i = x + y*w;

int up = x + (y- circleSize) * w;

//int down = x + (y+ circleSize) * w;

int left = (x – circleSize) + y*w;

int right = (x + circleSize) + y*w;

int upLt = (x – circleSize) + (y- circleSize) * w;

int upRt = (x + circleSize) + (y- circleSize) * w;

//int downLt = (x – circleSize) + (y+ circleSize) * w;

//int downRt = (x + circleSize) + (y+ circleSize) * w;

//fill( 0 );

if(p[i] && p[up] && p[left] && p[right] && p[upRt] && p[upLt]){

//fill(cam.pixels[i]);

countM++;

}

}

println(countM);

if (countM > 100){

myPort.write(‘M’);

if(!c4.isPlaying()) {

c4.play();

}

}

else {

myPort.write(‘m’);

}

prev = cam.get();

cam.updatePixels();

}