For this final project, I teamed with Tya to build our project”The epic of us”. I learned a lot during the process of making the project and I want to thank my professor and my best partner Tya and all assistants who give me a lot of help.

CONCEPTION AND DESIGN:

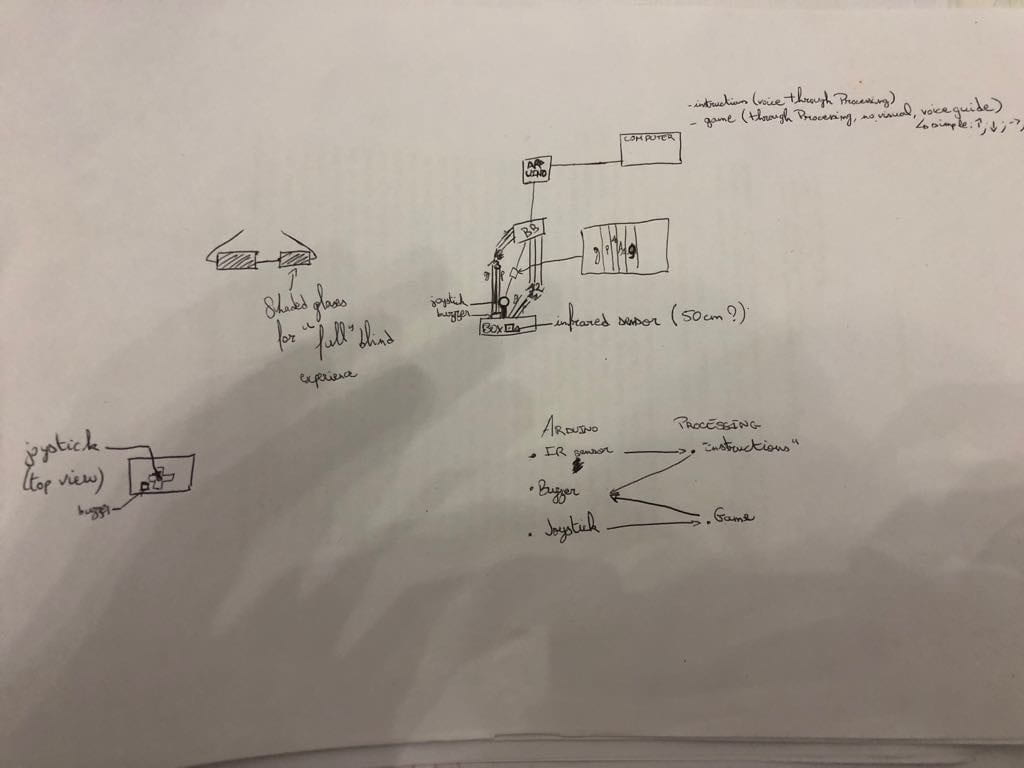

Since our project is a board game that involves two players to role-play the leader of two civilizations, we want them to try to attack each other for their development of the country. We first thought about using the fsr sensor to create a device to let the user to hit and the force the user put on will determine the damage of the other civilization. To use the fsr sensor, we need to figure out how to store the maximum value that the fsr senses during a certain period of time. But later we find it hard to control the sensor(the same problem we have met during the midterm project). And we also had two buttons for the players to begin the game, so we decide to use the button to do all interactions, the times the button be pressed would decide the damage degree. And to make the game more engaging, we put 48 LEDs on our board which nearly drove us crazy. We first try to use a chip called 74HC595 to control 8 LEDs with 3 pins from the Arduino(since we need 48LEDs and there are not enough pins), but after several days of struggling, it did not work. Though really discouraged, we still want LEDs on our board to show the steps of the players. Finally, we use Arduino Mega which has 54 pins in total to connect LEDs and Adruino. When connecting the LEDs, the Mega did not work for some reason, after asking the fellow, I learned that I should not connect the pin 0 and pin 1 to LEDs since they are used for Mega to talk with the computer. Also, the LEDs are not stable and the wires always fall off so we need to frequently check them and fix them. And about the materials of the board, we first used cupboard but we were not satisfied with it because it was a little bit soft and did not delicate enough. We used wood at last and to make the board larger, we used two wood boards and stuck them together.

FABRICATION AND PRODUCTION:

We laser cut many pictures on our board to decorate and show the process of the development of human societies. It is a very annoying job to turn all the pictures into the forms that the machine can recognize. I asked the fellow for help and the link here is very useful. But due to the different versions of the illustrator and photoshop, I first had difficulties delete the white color in the pictures. But later I figured it out. During the user testing, because we failed to figure put the use of the HC74595 chip so we did not use LEDs to show the steps of the players, we had to use two real characters and let the users to move the characters by themselves. Then the problems came out that the game board is separate from the computer screen so when users moved their characters they had to turn away to looked the board which caused them to miss some instructions on the screen. So we were determined to add the LEDs and combined the screen and the board together to create a better game. So we later laser cut a bigger board with holes on it to out LEDs. And also during the process of watching the users play the game, we found that the speed of changing the instructions is too high for someone who played the game for the first time so we later make some adjustments to make it more readable.

CONCLUSIONS:

According to my definition of interaction before —— a cyclic process that requires at least two objects (both animate and inanimate are accepted) individually has its input-analyze-output, and the whole process should be meaningful. Our project aims to let people be aware of a better way to develop is to collaborate rather than fight with each other. If people fight with each other for the resources they want they will go astray together. Since it is a board game it has a cyclic process and also meaning behind it which aligns with my definition of interaction. When people interact with our project, they did not hesitate to attack each other and they were surprised to see both of two civilizations be destroyed in the end. But later someone said that we did not give the player a clear instruction that they can choose not to attack. We want to let people choose from attack or not to attack, but it turned out that they did not have the intention to choose. That’s the point we need to further think of and improve. Usually, people will not do what you expect them to do and that’s why there is always room for improvement. If we have more time, we will make it clear for people that they have the choice to not to attack other civilizations. From my perspective, I am happy that we conveyed our idea to people. Hope our project has brought fun and deep thoughts.

The picture for our project: