My project: Cooperate! Partner!

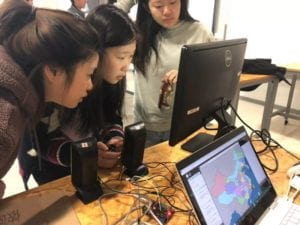

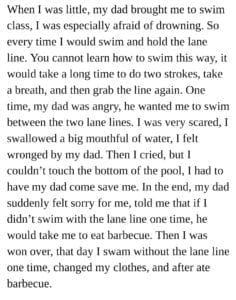

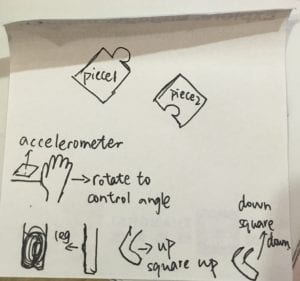

Concept: I get my idea of this project from a conversation I had with my friend A She was also an IMA student working on another project. She looked pale and complaint about her partner. This is not the first time I hear complains about partner. I also had this feeling when I did my first project in Communication Lab. It was a project needed to be done by two people but only one people finish the whole thing. It makes me feel worse when I saw other team working happily and enjoy the process of teamwork. Other team spare the work and two people both engage in the working process. Not only me and friend A have this feeling, when I further carry out the research, I found that many IMA students also had bad experience when they do group project. During the mid-term and the final, there are always quarrels between partners and complaints about partners. This makes me think that, can I use a game to improve group work experience letting people enjoy working with their teammate. Then I began my research on what lead to the bad experience. I interviewed some students in the studio. Friend B: “My partner never listened to me. This makes me really angry. We could have done better if he listened and adapt some of my suggestions”. Friend C: “I always do most of the work, my partner did nothing. I feel unfair”. Friend D: “I didn’t participate that much as my partner expected because I really don’t know she needs me, I think she is doing quite well on her own”.To sum up, I found the following things lead result in bad experience in teamwork. First is the free-rider problem which is only one person do the work and the other paying not effort. This make the person doing the entire work feels unfair. Second is the communication problem. Partners lack communication and corporation. Each stick to own ideas and doesn’t cooperate. The first bad result of lacking communication is the created project often not satisfying. The second bad result is people feel they are not being understood by their partners which often leads to quarrels and emotional breakdown. The third bad result is if you don’t tell your partner you need help, he/she may not know you need help and he/she couldn’t offer you help. So I plan to design a game which force people to engage, force them to cooperate with their teammates while at the same time still enjoy playing this game. Idea 1 is to use an accelerometer to let the user1 rotate one piece of the puzzle and user2 rotate another piece then by finding the right angle letting two pieces gather together to finish the puzzle. I plan to use the distance sensor to let the user move the piece up and down. By letting each player rotate to find the appropriate angle and control the height of the piece, I plan to make each of them engage in the game which equals to participate and do your own part of job in the teamwork. Then by letting them adjust the height and the angle to form the puzzle, I plan to make them adapt to the changes of their teammate and cooperate to form a puzzle which equals to the communicate and cooperation in the teamwork. I also set the player to use both their hand and leg to force them lose balance so they need to reach their partner for help which also represents the communication part in the project.

(idea 1)

(idea 1)

(idea2)

(idea2)

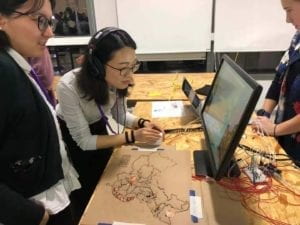

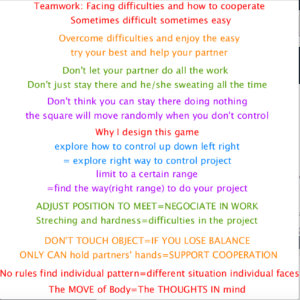

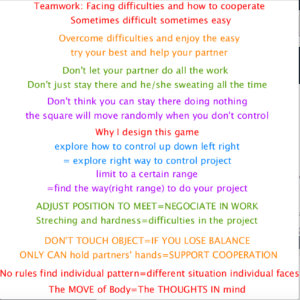

Then I did user test. I found that users can still keep balance quite well when they use their hands to rotate and raise their legs at the same time. They also said the game is too easy and should be more difficult. In order to let them losing balance enhancing the cooperation and communication, I change to idea 2 . This time, I let the users bend or straight their knee to control the left and right of the square using flexible sensor. I keep the distance sensor so the user have to also change the height of the square at the same time. To enhance the concept that both need to engage in the game and do their own work, control their own work, I make the square more difficult for the user to control. By setting x and y adding different numbers(x1 -= 30; x1 += 10; y1 += 23; y1 -= 10;) I want the user to keep a certain position for a longer time which is more difficult to control their body linking to the point that you need to make good control of your own part when working on the project. Also by adding this difficulty in controlling balance, I force them to reach to their partner for help enhancing the communication. I also set the sensor value to a certain point, that only above this level, the square go higher, if you don’t reach this height, even you raise your knee, the square will still not go up. I set this because, to make a good project, you need to pay effort, you need to reach a creation level. I transforming the mind work, the mental stress into physical body move, body stress. I hope by doing this, the player can move their body doing some exercise to relief mental stress, while at the same time, partners can have a stronger feeling that their partner is stretching, they really need your help and your participation, letting both players feel the importance of teamwork and cooperation. If you don’t cooperate participate and go to the top of the canvas, your partner have to pay a lot of effort to reach you and complete the game(project). Mental stress may be hard to see but body sweating is really obvious. I hope player can have the sense that if they don’t participate and cooperate, their partners have to keep raising their leg which is really painful. I hope they can feel the importance of participation and cooperation. I also don’t want to give only one absolute solution to this game because each person is different, the team is also different, their way of doing project is different, their solutions are also different. Different teams can find their own suitable, easy approach solution to gather together. But when doing the user test, there is actually a easiest and energy saving solution which is gather together at the bottom of the canvas no need to raise the leg that height.

(the easiest solution)

(the easiest solution)

I set this also linking back to the range setting above. It also means that if you find the easiest way of doing the project, you may not pay that much energy to achieve that level but you can still achieve the same goal gathering together. But this solution needs player to explore to find out. Just like doing project, there are many ways to complete the work, but you need to explore to find the easiest way to do that or you may pay a lot more effort to reach the same goal.

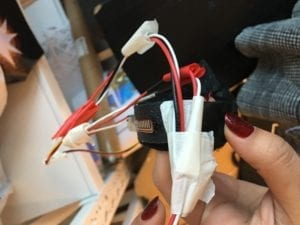

The sensor I used and the code

(the flexible sensor on the knee controlling left and right of the square)

(the flexible sensor on the knee controlling left and right of the square)

(the distance sensor sensing the distance to the ground)

(the distance sensor sensing the distance to the ground)

(tape up to get accurate statistic)

(tape up to get accurate statistic)

User test:Too much cables, easy to tripped and looks ugly. So I tape the cable together to prevent user form being tripped and dropped while also make it looks better.

User test, they said the Arduino and the cable is ugly so I later cut a box to hind the Arduino and breadboard to make it looks beautiful

(use this equipment make the user easier to put it on the knee)

(use this equipment make the user easier to put it on the knee)

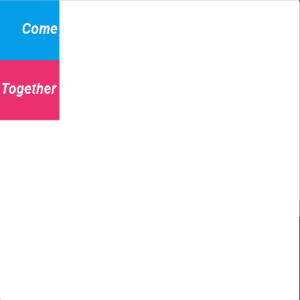

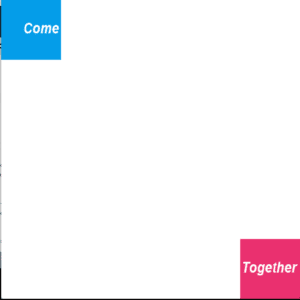

The lay out:I set the background into completely white adding no other design because I prototype some background with cool effect but the users said the background setting confuse them, the background made them hard to focus on the movement of the square soI set the background into completely white to let the user focus on the movement of the square. I also set the squares’ color into red and blue which is easier to recognize. And by adding the sentence “come together” this is like the word puzzle letting the user know they need to come together to form the phrase. I also set the blue square controlling by the left player on left corner so the player have a clear understanding of which square is controlled by him. The red square start from the right down corner so the player on the right know he is playing the red one. Also changing from the user test feedback, some team said the game before which both start from the left up corner is too easy, so I add the distance between them, to make the player do more movement to gather together.

(before start here)

(before start here)

(now start here)

(now start here)

Also according to the user test feedback, they suggested I should set up a rules so they know the knee is controlling the left and right, which square is for which, also you can only touch your partner to keep balance, so I add a instruction before the game. They also ask the meaning of this game, suggested that I should add a explanation at the end. I made both of them colorful to add the visual effect(feeling).

I also add a piece of relaxing music at when the game is finish because 1. Some user said they need a piece of music to relax after the body stretching which is difficult and energy consuming. 2. They need a clear sign to let them know they finish the game to cheer up.

(so I add this cheerful and happy music at the end along with the instruction).

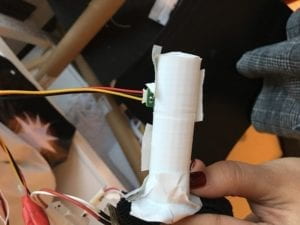

User test: Some user wear thick trousers that affect the distance sensor. I 3-D printing this pole adding on the leg equipment to make the distance sensor sense the distance accurately.

Also after the user test in class, I change the words into video to make it fun and more connected with the game.

The introduction video:

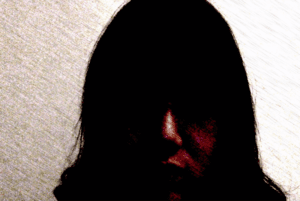

At the beginning, I used the black and white to make the game looks mysterious. I also hide my face to raise players’ interests to play this game, make the interaction continue to the game.(using photo booth, citing in reference).

The link for the video: https://drive.google.com/open?id=18HRZsgneyYCVyAYbskO4H3m__SwozWQc

(now)

(now)

(before)

(before)

The ending video:

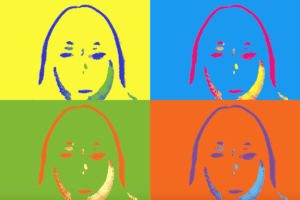

At the end I made the video colorful to cheer up and hope the player feel relaxing. I hope players find happy that the world suddenly become colorful when they finish the game. Making them satisfied with their complement of the game.

The link to the video: https://drive.google.com/open?id=1P5Yadwg5hcV5hcGjtE5GGbe-GT3kq4PJ

(now)

(now)

(before)

(before)

During the user test, as shown in the pictures, I changed some design to make the sensors works better, to let the user know how to play without my explanation and convey the meaning behind this project. But there are also problems that I didn’t solve. First is taking the user words’ for example. Leah and Eric(together): I like this game because the physical interaction is funny and the concept is good. But I wonder can the game be more difficult and have more levels? Ryan: It is a fun game but it’s really difficult to play. The first failure is I couldn’t find a appropriate difficult level for this game to make every user feel the game is fun and enjoy the difficult level. During the user test, for teams that worked before, it’s easier for them to complete this game, but for players who form up immediately, they feel difficult and energy consuming. Considering the concept, I plan to let everyone notice the importance of group work but no costing that much energy. However if I lower the difficult level, as a game, it’s less playful for other teams. The balance between conveying concept and keeping the play funny and playful is a part that I didn’t do well. Second is my project can’t really improve and change the teamwork situation. By playing this game, players feel fun and may aware of the problems by seeing the concept but when this game may doesn’t help much when they actually working on the project. The project raise awareness but don’t solve the problem.

I hope by playing this game, players be a better teammate and have better experience in group work. I hope I can let those who don’t have group work experience know what is group work and how can do a good group work. I hope I can achieve this goal by using a fun game. Even though it can’t solve the problem, I still hope students can aware that group work is important. Be a good partner is important. My definition of interaction is to let players willingly and enjoyable keep respond to different process in my project making the process continuously. The interaction in my project is consisted of two part, one is the interaction between players, the other is the interaction between the players and my project. The interaction between players is that they interact with each other to finish the game. But in the version1 of my project, the interaction is not that good where I used words to do the instruction and ending. The process is not continuous, the boring words is not connected with the players and they are unwilling to further interact with the project. To enhance the interaction between my project and the players, I made the video to create a mysterious atmosphere so they willing to participate and give responses back and forth. If I have more time, I will break the project into different level. For example, level1 is to let each user learn how to control there own square which not only make the game easier for some players but also enhancing the concept that you need to control your own work do your own work in the project. Then in level2, letting them come together enhancing the concept of cooperation and communication. I can also set different levels, changing the shape of the squares, adding accelerometer to make the game more difficult to fit the demand of other players so all players can enjoy the game. I still hope by playing this game, there will be less quarrels between partners and every student enjoy the team work. I also learn that it is difficult to do the funny game and conveying the serious concept at the same time. When next time I do project, I need to think of how to make a project responding to different interaction from the player. Not only making the players enjoy playing, but also think about making good interaction to their responses to the serious concept.

References:

Ben Fry and Casey Reas (2001)Processing example bounce[Source code].https://processing.org/examples/bounce.html

Ben Fry and Casey Reas (2001)Processing example collision[Source code].https://processing.org/examples/bounce.html

Ben Fry and Casey Reas (2001)Processing example constrain[Source code].https://processing.org/examples/bounce.html

“Photo Booth Apps.” Simple Booth, 2019, www.simplebooth.com/products/apps.

// IMA NYU Shanghai

// Interaction Lab

// For receiving multiple values from Arduino to Processing

/*

* Based on the readStringUntil() example by Tom Igoe

* https://processing.org/reference/libraries/serial/Serial_readStringUntil_.html

*/

import processing.serial.*;

import processing.sound.*;

import processing.video.*;

Movie myMovie1;

Movie myMovie2;

String myString = null;

Serial myPort;

int NUM_OF_VALUES = 4; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues;

int x = 0;

int y = 0;

PImage img1;

PImage img2;

float x1=0;

float y1=650;

float x2=650;

float y2=300;

SoundFile sound;

float easing = 0.0000000000001;

boolean stage1 = false;

boolean stage2 = false;

boolean stage3 = true;

boolean stage4 = false;

boolean soundIsPlaying=false;

long gameStartTime = 0;

void setup() {

size(650, 650);

setupSerial();

img1 = loadImage("red.png");

img2 = loadImage("blue.png");

background(255);

sound = new SoundFile(this, "cello-f1.aif");

myMovie1 = new Movie(this, "introduction.mov");

myMovie1.play();

myMovie2 = new Movie(this, "ending.mov");

myMovie2.play();

}

void draw() {

updateSerial();

printArray(sensorValues);

background(255);

//rect(x, y, 25,25);

//ellipse(25,80,50,50);

//rect(100, 100, 100, 100);

//x1= map(sensorValues[1],74,102,0,width);

float targetX2 = constrain(map(sensorValues[0], 97, 110, 0, width), 0, width-100);

float dX2 = targetX2 - x2;

x2 += dX2 * easing;

x2 = constrain(x2, 0, width-100);

float targetX1 = constrain(map(sensorValues[1], 0, 50, 0, width), 0, width-100);

float dX1 = targetX1 - x1;

x1 += dX1 * easing;

x1 = constrain(x1, 0, width-100);

float targetY2 = constrain(map(sensorValues[2], 40, 216, 0, height), 0, height-100);

float dY2 = targetY2 - y2;

y2 += dY2 * easing;

y2 = constrain(y2, 0, height-100);

//println(sensorValues[2]);

//println(x2);

//println(sensorValues[0]);

float targetY1 = constrain(map(sensorValues[2], 40, 216, 0, height), 0, height-100);

float dY1 = targetY1 - y1;

y1 += dY1 * easing;

y1 = constrain(y1, 0, height-100);

if( stage3==true){

//background(255);

//textSize(20);

//textAlign(CENTER, CENTER);

//fill(255, 0, 0);

//fill(255, 128, 0);

//text("Square Blue for left player, Square Red for Right Player",325,25);

//text("Rule1:MOVE YOUR KNEE!\nTO FIGHURE OUT HOW TO MOVE",325,75);

//text("TRY STRANGE MOVEMENT Using Your KNEE!",325,125);

//fill(76, 153, 0);

//text("Rule2:RAISE OR BEND THE LEG !",325,160);

//text("TRY!",325,195);

//fill(172, 0, 255);

//text("Rule3:THESE ARE MAGIC SQUARES ONLY WORK IN MAGIC RANGE!",325,240);

//text("FIND THAT RANGE TO CONTROL THEM!",325,280);

//text("OR THEY WILL JOKE YOU WITH WIERD MOVEMENTS",325,320);

//textSize(30);

//fill(255, 0, 0);

//text("Rule4:DON'T TOUCH ANY OBJECT!!!",325,380);

//text("TIPS:FIND YOUR OWN PATTERN TO",325,443);

//text("CONTROL THE SQUARES!!!",325,500);

//text("MAKE IT MOVE THE WAY YOU WANT IT TO!",325,550);

//text("DON'T LET IT'S MOVEMENT CONFUSE YOU",325,590);

//text("CONTROL IT!",325,630);

//fill(255, 128, 0);

if (myMovie1.available()) {

myMovie1.read();

}

if(mousePressed){

stage1=true;

stage3=false;

gameStartTime = millis();

}

}

if (stage1 == true) {

image(img1, x1, y1);

image(img2, x2, y2);

if (checkcollision(x1, y1, x2, y2, 30) && millis() - gameStartTime > 15*1000) {

stage1 = false;

stage2 = true;

}

}

if (stage2 == true) {

if(soundIsPlaying==false){

sound.play();

soundIsPlaying=true;

}

background(255);

//textSize(20);

//textAlign(CENTER, CENTER);

//fill(255, 0, 0);

//text("Teamwork: Facing difficulties and how to cooperate\n Sometimes difficult sometimes easy",325,25);

//fill(255, 128, 0);

//text("Overcome difficulties and enjoy the easy\ntry your //best and help your partner",325,95);

//fill(76, 153, 0);

//text("Don't let your partner do all the work \nDon't just stay there and he/she sweating all the time",320,165);

//fill(172, 0, 255);

//text("Don't think you can stay there doing nothing\nthe square will move randomly when you don't control",320,232);

//fill(255, 0, 0);

//text("Why I design this game",320,283);

//fill(0, 128, 255);

//text("explore how to control up down left right\n= explore right way to control project",320,330);

//fill(172, 0, 255);

//text("limit to a certain range\n=find the way(right range) to do your project",320,390);

//fill(153, 0, 153);

//fill(76, 153, 0);

//text("ADJUST POSITION TO MEET=NEGOCIATE IN WORK\nStreching and hardness=difficulties in the project",320,460);

//fill(255, 128, 0);

//text("DON'T TOUCH OBJECT=IF YOU LOSE BALANCE\n ONLY CAN hold partners'hands=SUPPORT COOPERATION",320,535);

//fill(255, 0, 0);

//text("No rules find individual pattern=different situation individual faces",325,590);

//text("The stretch of Body=The strech of mind",320,625);

if (myMovie2.available()) {

myMovie2.read();

}

}

if (sensorValues[0]>40) {

x2 += 10;

}

if (sensorValues[0]<40) {

x2 -= 30;

}

if (sensorValues[1]<5) {

x1 -= 30;

}

if (sensorValues[1]>5) {

x1 += 10;

}

if (sensorValues[2]<120 && sensorValues[2] > 90) {

y2 += 23;

}

if (sensorValues[2]<90) {

y2 -= 10;

}

if (sensorValues[3]<150) {

y1 -= 10;

}

if (sensorValues[3]>150) {

y1 += 10;

}

//println("y2",y2);

}

//println(x2, y2);

//} if (sensor2>100) {

// y2 += 10;

//}

boolean checkcollision(float x1, float y1, float x2, float y2, float d) {

//

//return x2-x1 <=30 && y2-y1 <=30;

return x1-x2==100 && y2-y1==0;

//red.png

}

boolean checkangle(float angle1, float angle2) {

return angle1==20 && angle2==30;

}

void setupSerial() {

//printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 1 ], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port "/dev/cu.usbmodem----" or "/dev/tty.usbmodem----"

// and replace PORT_INDEX above with the index number of the port.

myPort.clear();

// Throw out the first reading,

// in case we started reading in the middle of a string from the sender.

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), ",");

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}