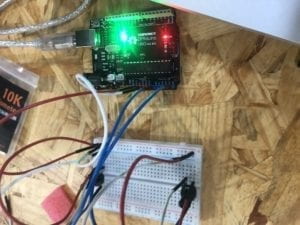

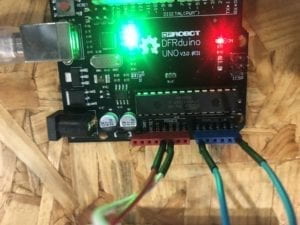

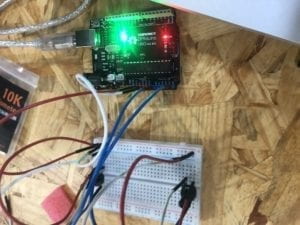

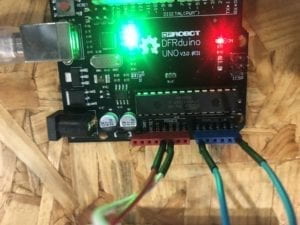

Project 1

For this project we re-created an “Etch-a-sketch” using two potentiometers (one controlling the Y axis and the other the X) to control the drawing.Using an outline of a code given in class I changed the AnalogRead so I was able to map the two sensors, I also modified my pin numbers etc. I also did the same for processing inputting and modifying things like the void setup size and defining the x and y accordingly. I also added the voidDraw to my processing.A challenge I encountered was modifying so that the line drawn previously was saved as the drawing continued.I was able to correct this and successfully complete the project.

My code for Arduino

/*

AnalogReadSerial

Reads an analog input on pin 0, prints the result to the Serial Monitor.

Graphical representation is available using Serial Plotter (Tools > Serial Plotter menu).

Attach the center pin of a potentiometer to pin A0, and the outside pins to +5V and ground.

This example code is in the public domain.

http://www.arduino.cc/en/Tutorial/AnalogReadSerial

*/

// the setup routine runs once when you press reset:

void setup() {

// initialize serial communication at 9600 bits per second:

Serial.begin(9600);

}

// the loop routine runs over and over again forever:

void loop() {

// read the input on analog pin 0:

int sensorValue1 = analogRead(A0);

int sensorValue2 = analogRead(A5);

sensorValue1 = map(sensorValue1, 0, 1023, 0, 500);

sensorValue2 = map(sensorValue2, 0, 1023, 0, 500);

// print out the value you read:

Serial.print(sensorValue1);

Serial.print(“,”);

Serial.print(sensorValue2);

Serial.println();

delay(1); // delay in between reads for stability

}

My code for Processing

// IMA NYU Shanghai

// Interaction Lab

// For receiving multiple values from Arduino to Processing

/*

* Based on the readStringUntil() example by Tom Igoe

* https://processing.org/reference/libraries/serial/Serial_readStringUntil_.html

*/

import processing.serial.*;

String myString = null;

Serial myPort;

int posx2;

int posy2;

int NUM_OF_VALUES = 2; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

void setup() {

size(500, 500);

background(0);

setupSerial();

}

void draw() {

updateSerial();

printArray(sensorValues);

stroke(255);

line(sensorValues[0], sensorValues[1], posx2, posy2);

posx2 = sensorValues[0];

posy2 = sensorValues[1];

// use the values like this!

// sensorValues[0]

// add your code

//

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 3 ], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port “/dev/cu.usbmodem—-” or “/dev/tty.usbmodem—-”

// and replace PORT_INDEX above with the index number of the port.

myPort.clear();

// Throw out the first reading,

// in case we started reading in the middle of a string from the sender.

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), “,”);

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}

etch a sketch video

Project 2

For this project we used a speaker to create a musical instrument that would make a sound when the mouse was pressed.This one was a bit more challenging for me as there were a lot of different elements coming together. We incorporated tones as well as mouse press. I encountered many troubles with this project, firstly I had a lot of trouble as my Arduino was not uploading to my board, after a quick reset this was solved and in the end was unable to ensure that for different parts of the screen distinct sounds would be outputted.

My code for Arduino

// IMA NYU Shanghai

// Interaction Lab

// This code receives one value from Processing to Arduino

int valueFromProcessing;

void setup() {

Serial.begin(9600);

pinMode(9, OUTPUT);

}

//

//void draw() {

// if (mouseX > width / 2) {

// myPort.write(‘H’);

// } else {

// myPort.write(‘L’);

// }

//}

void loop() {

// to receive a value from Processing

while (Serial.available()) {

valueFromProcessing = Serial.read();

}

analogWrite(9, valueFromProcessing);

if (valueFromProcessing == ‘H’) {

//digitalWrite(8, HIGH);

tone(9, 3000);

} else if (valueFromProcessing == ‘N’) {

// digitalWrite(8, LOW);

noTone(9);

}

else if (valueFromProcessing == ‘M’) {

//digitalWrite(8, HIGH);

tone(9, 2000);

}

else if (valueFromProcessing == ‘L’) {

//digitalWrite(8, HIGH);

tone(9, 1000);

}

// something esle

// too fast communication might cause some latency in Processing

// this delay resolves the issue.

delay(100);

}

My code for Processing

// IMA NYU Shanghai

// Interaction Lab

// This code sends one value from Processing to Arduino

import processing.serial.*;

Serial myPort;

int valueFromArduino;

int High;

int Med;

int Low;

void setup() {

size(500, 500);

background(0);

printArray(Serial.list());

// this prints out the list of all available serial ports on your computer.

myPort = new Serial(this, Serial.list()[3], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port “/dev/cu.usbmodem—-” or “/dev/tty.usbmodem—-”

// and replace PORT_INDEX above with the index number of the port.

}

void draw() {

// to send a value to the Arduino

High = height;

Med = 2*height/3;

Low = height/3;

if (mousePressed && mouseY > 0 && mouseY < Low) {

myPort.write(‘L’);

} else if (mousePressed && mouseY > Low && mouseY < Med) {

myPort.write(‘M’);

} else if (mousePressed && mouseY > Med && mouseY < High) {

myPort.write(‘H’);

} else {

myPort.write(‘N’);

}

//if (mouseX > width/2) {

// myPort.write(‘H’);

//} else {

// myPort.write(‘L’);

//}

}

Video for project 2

Reflection

Although this recitation was particularly difficult, I found it interesting utilizing these two programs to make fun and random projects. Both of which required a lot of help from friends and fellows, however in the end I was able to get both projects to (kind of) work.