Fragmented Faces

Our project is titles “Fragmented Faces” and aims to tackle the complex issue of identity and humans’ connections with others.

PROJECT STATEMENT OF PURPOSE

Our project aims to demonstrate the complexity of human identity and how identity can be represented through faces, emotions, and expressions. It also aims to show how complex and disorrienting understanding identity is when a person interacts with more and more people. The project entails using different images of peoples’ faces and cutting the images into three horizontal sections, and then randomizing the different pieces to create different faces out of many mouths, eyes, and noses.

Our audience can be generalized to entail everyone as it is focused on inclusion and understanding the identities around us, but the audience can also be a more focused group of people who are trying to understand the identities of people who surround them and who are putting effort into understanding the complexity of identity. This project was mostly inspired by a few art pieces one video of an interactive simulation about identity crises and the complexity of self identity and the other focusing on the fragmentation and complexity of identity.

Inspirational identity artwork

PROJECT PLAN

This project aims to create a dialogue and questions about identity and the connections people make based on identity, which are represented by each light-up pressure pad with a hand silhouette showing the connection. The simulation or game entails the user standing in front of the processing screen and 5-6 pressure pads each with a hand print that lights up. The different handprints will light up in a sequence that gets faster and faster and people will try to keep up with the lights, pressing their hand against the light up hand. Each time a button is pressed the faces will randomize. At first, when the simulation is easy to follow it will be easy to connect with each hand and see each identity change and create a new face, but as the simulation gets faster and more complex people will be unable to keep up with each individual interaction and the identities appearing on the screen. Once the simulation becomes impossibly complex all the hands will blink in unison and then turn off except the center hand which will remain lit and once the user presses it all the individual original images of the faces will appear together on the screen.

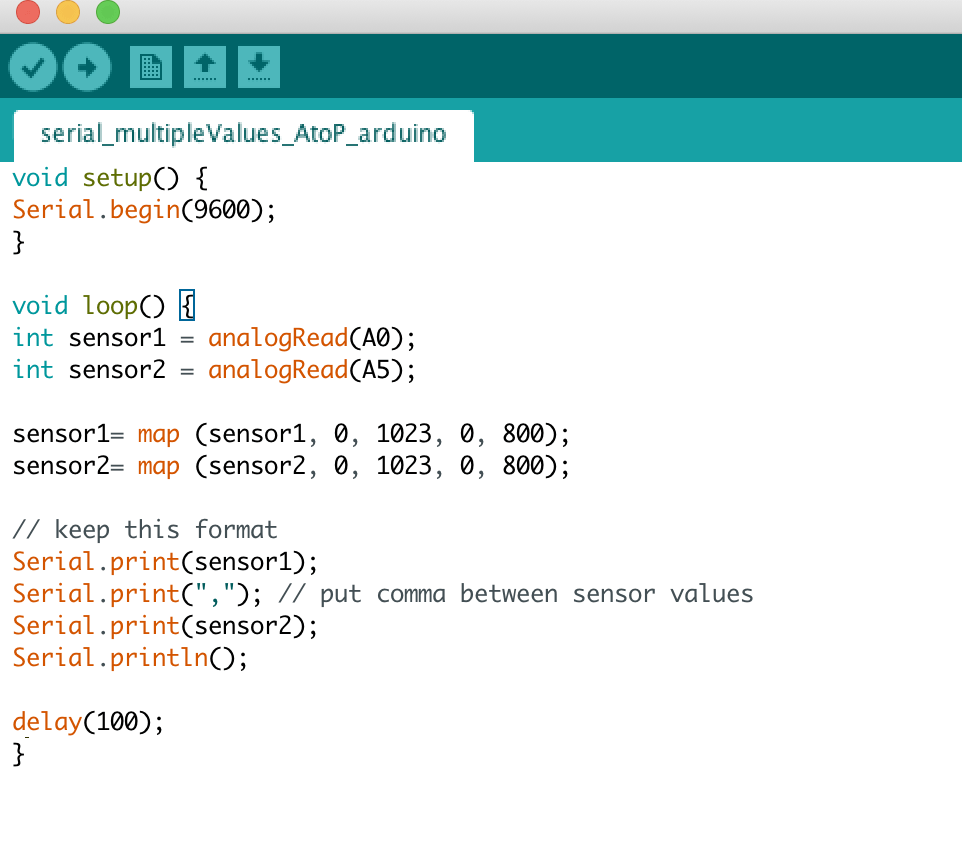

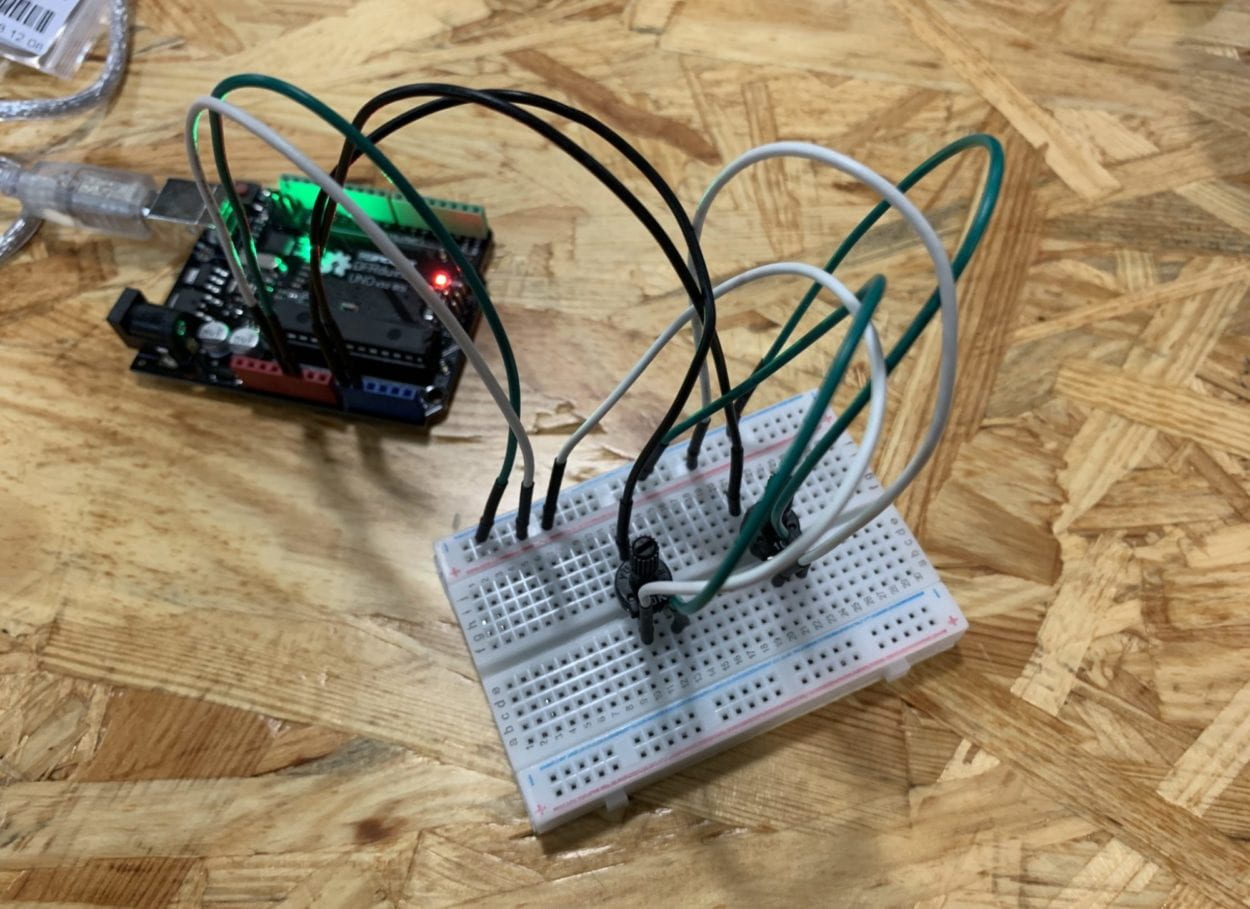

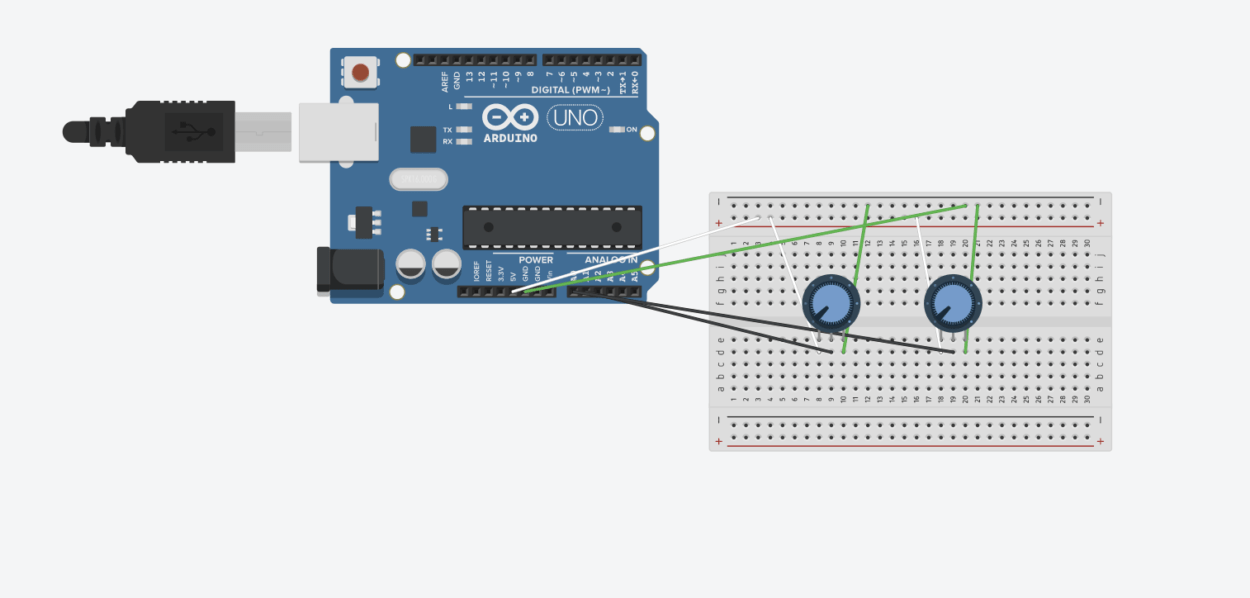

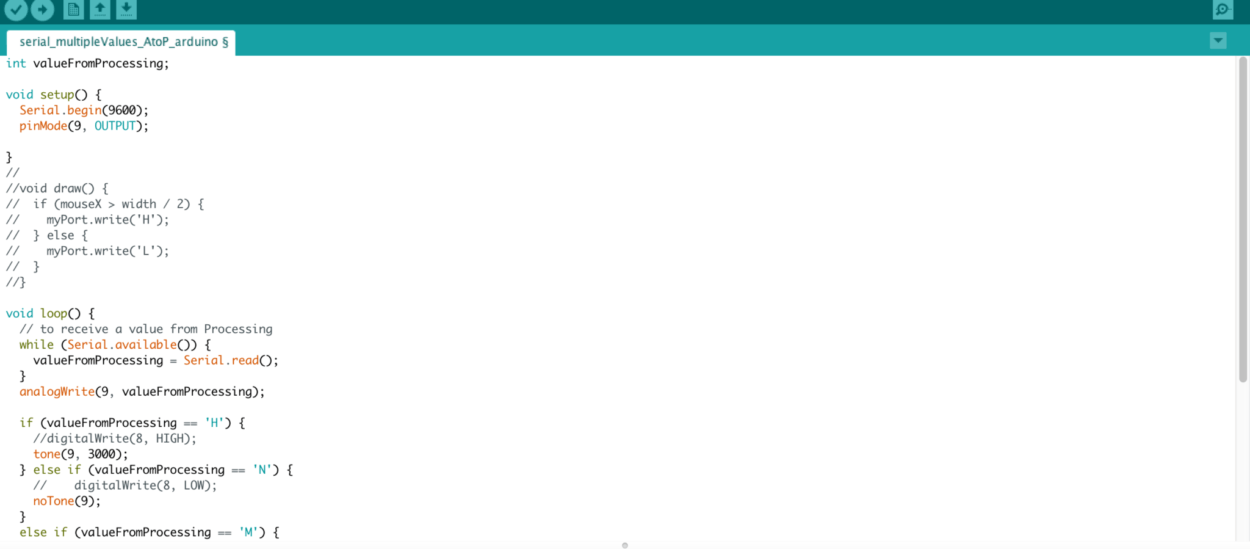

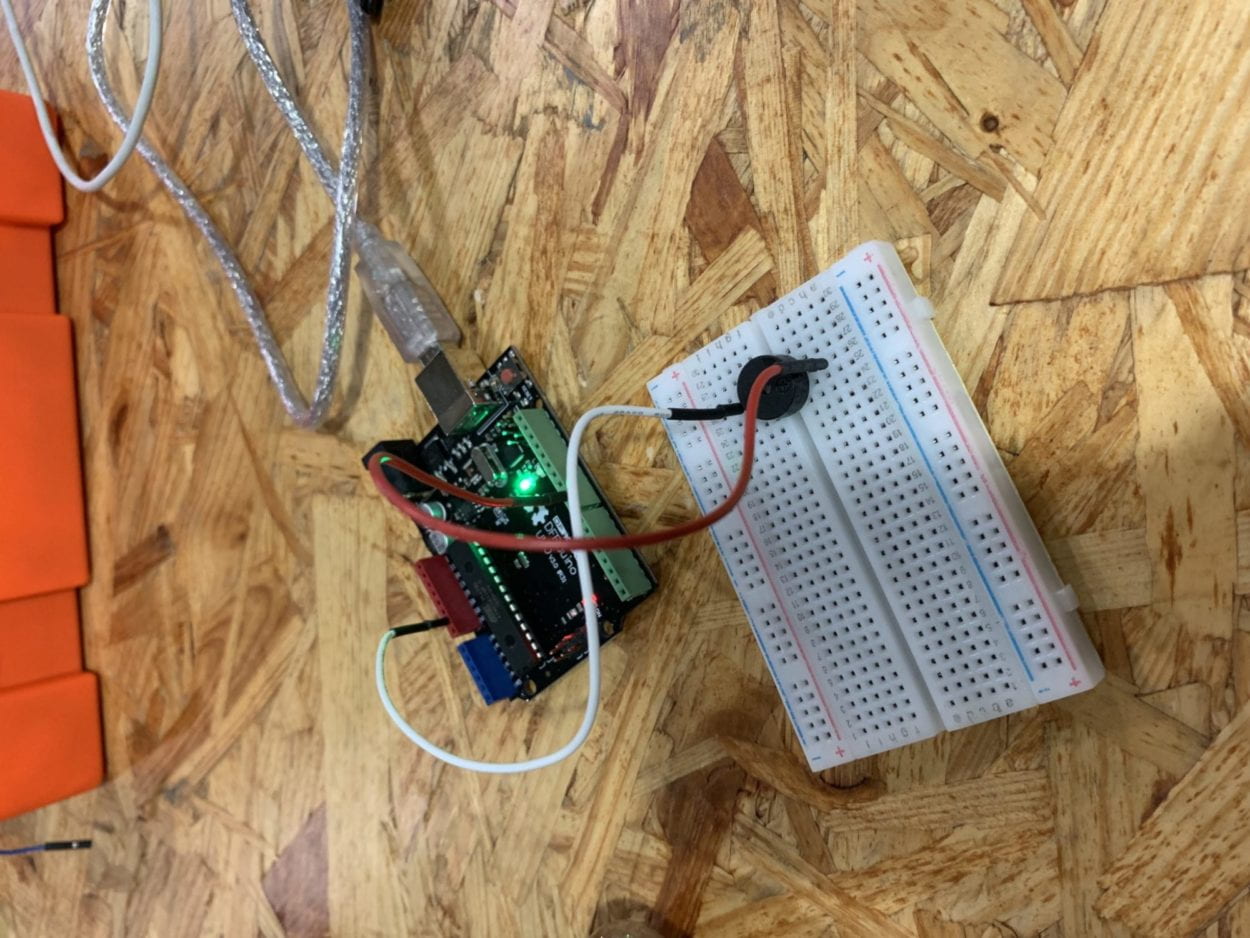

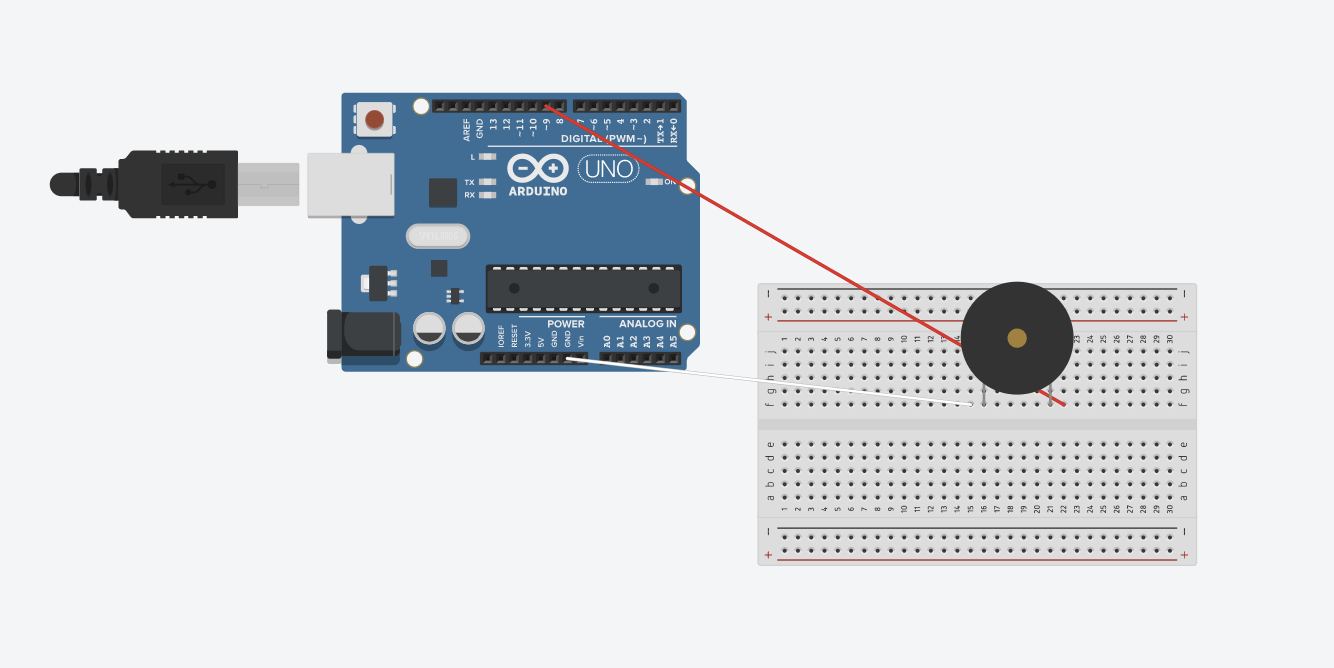

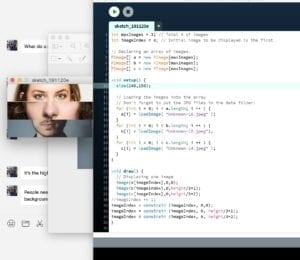

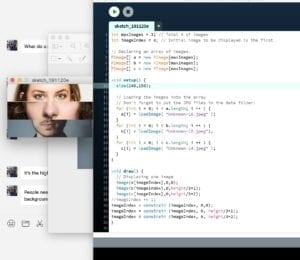

To complete this project we first will start with the processing and create a simple random face generator with one button and different image. I have already created the base code for that which has three images of faces correctly proportioned but is not randomized to include multiple options yet. After we create the processing basic code for random images, we will then add multiple buttons to control the randomization of the faces. This will be the prototype for the simulation. Next we will create the LED light flashing separately and simultaneously in Arduino and after figuring the two pieces out separately we will combine the codes and add LEDs to the buttons, which we will create out of cardboard or wood and plastic so the LEDs can show the hands lighting up. We would like to finish the basic processing code for the random faces this weekend. And add the signal communication and LED sequence by the end of next week. After the LEDs and the processing animation controlled by buttons are combined the work will mostly be focused on creating the environment and set up of the game, including the hand buttons.

The work-in-progress code for assembling random faces in processing.

Examples for the light up had prints that will also serve as buttons/pressure plates to cause the faces to change.

CONTEXT AND SIGNIFICANCE

Originally I researched projects on light and sound designs including a storytelling animated wall. This project in general led me to think about the animation in processing which has gone through different variation but ultimately involve fragmented piece coming together to create a whole. Originally, I wanted to animate a process that I saw artist do still versions of people’s faces breaking into small pixel like units and the art piece becomes more and more abstract. But after talking it over, an easier and more straight forward animation to represent this fragmentation creating a whole would be to use three horizontal panels to create a whole image. Our project was more influenced by non-interactive art pieces that represented identity as it was harder to find interactive art exhibits that focus on issues of identity of human connection. Our project was also in part influenced by the Piano Dancer project proposal I suggested as our user interface will be quite similar with light up hand prints acting as buttons, similar to the light up piano keys. This project follows our expanded definition of interaction beyond the simple understanding of two actors communicating through input, processing, and output as discussed in What Exactly is Interactivity?. Interaction involves the entire experience including people thinking and possibly creating a dialogue about a project. As I researched interactive art is also based on ideas like TATE, interactive art was also a method for the artists to make connections with the environment and their audience, enhancing both the interactive elements coming from the audience and from the machine/art piece. Each art piece is created by an artist, who has an intention for their piece and the audience, whether that is completely understood and achieved or not. Combining all the definitions of interaction researched, a successful interactive experience consists of two actors who communicate using a series of inputs, processing, and output; however, the overall experience created enhances the interaction, including forcing the audience to think differently and more specifically about an issue or thoughtful concept. Every interactive experience exist at a different amount of interaction. Some interactions are simple interactions between two parties that meet the basic requirements of input, processing, and output, and some interactions are closer to human interactions that are always changing and evolving responding to each actor’s last action, including physical but also complex mental interaction. With our midterm project and now also the final project “Fragmented Faces” we hope to push our audience’s interaction to involve a more complex level of interaction, including contemplation of the work and the meaning of it, to hopefully create a dialogue or through process about what identity means and how its easy to stop understanding others’ identities the more interactions and connections you are having with people. The confusion of the hands and light/button sequence represents the chaos of understanding identity. Hopefully, this project’s uniqueness will come from creating a new dialogue or prompting people to think about identity and connections they make with people. Although the face swap and randomizer has been done before in other projects, we have specified out focus around what identity is and what it means to connect with people. We added the simulation/game of light up hands to create a more complex and ever changing interaction between the audience and the project. Many of the projects we looked at in researching identity artwork showed the fragmentation and confusion of self identity and other, and much of identity artwork is left much up to interpretation. Our project creates and interactive and changing interface for people to engage with a changing and confusing identity, building off of their artwork around identity. I especially appreciated the chaos and complexity of self identity represented in this simulation (mentioned and linked above too). Our main goal with this completed project is to spark either physical conversation and dialogue or internal thoughts and contemplation over what identity means to different people and with how chaotic life is and how many people one meets during life, they don’t truly understand the identity of most people because we don’t take the time to think about each individual. This project could in the future be built upon to understand and complicate the concept of self identity if the project also involved capturing people’s faces and including them in the random face generator. Particularly, our message fits with an audience of people who are interested in exploring the concept of identity and understanding the people around them, to better be able to talk and interact with others in the future. This goal especially fit with the mindset of many NYUSH students who come to this school to make connections and meet people from all over the world with very different backgrounds. But the longer these students become complacent talking to the same people from the same backgrounds the less they think about people from different backgrounds with different identities.