Snack Facts – Eleanor Wade – Marcela Godoy

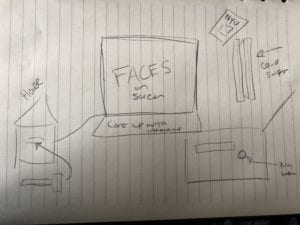

CONCEPTION AND DESIGN:

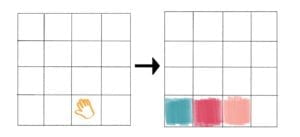

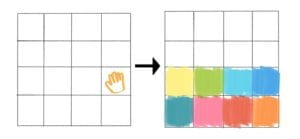

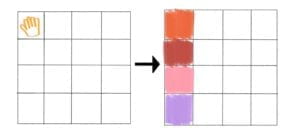

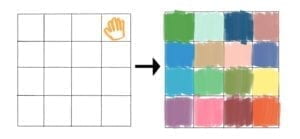

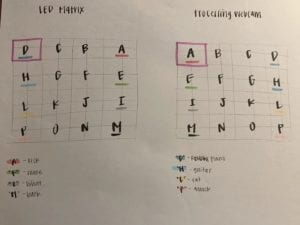

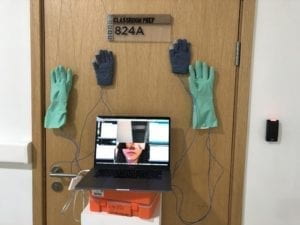

When considering how my users were going to interact with this project I took much of my research regarding the consumption of animals and animal products, as well as the typical experience of a grocery store in mind. In order to recreate a similar feeling of the vast array options that consumers are presented with at a super market, I chose to use the color scanner and foods with various colored tags to allow for users to have the experience of checking out at a typical store. Following making their decisions and selecting different products from the shelves (specifically meats with red tags, animal products with blue tags, and plant-based foods with green tags) users would scan to see an overwhelming assortment of pictures and quick facts regarding the process from an industrialized factory farm to table, and the differing environmental impacts each of these actions have. The color sensor was critical in my design and conception of this experience because it is not only a hands-on and interesting action, but also it can be very clearly linked to the overall feeling of checking out at a grocery store. It is my hope that many of my users will associate this feeling of blindly making decisions with the pictures that appear on the screen. While the shelves were made of cardboard, I also included many collected, plastic packages that are commonly used in grocery stores. This helped to further explore the question of how we process our foods and package them for our convenience, without fully understanding what the consequences of these choices are. Other materials I used, such as real foods (carton of milk, jam, bread, sausages, cookies) were an effort to make the experience appear slightly more realistic. Additionally, the few edible foods I provided were very beneficial in working to complete the experiences and add an interactive element of taste and smell to the project. It was evident that these materials–particularly the real, edible foods– were central to the interactive aspect of my project because in addition to using the color sensor, being presented with both plant based and animal based products further made customers question the choices they make everyday. In associating this specific taste with the exposed realities of the food systems, this project used levels of interactivity to educate people about the environmental impacts of their food choices.

FABRICATION AND PRODUCTION:

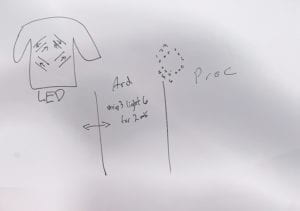

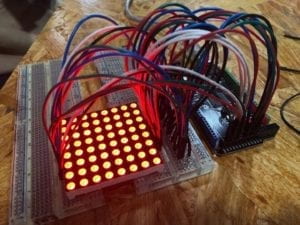

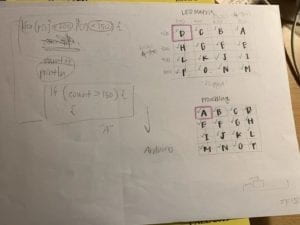

The most significant steps in my production process started with building off of my previous research about animal products and talking with Marcela regarding the best ways to move forward with how to create an interactive and educational experience involving food. After deciding to use the color sensor, I used my research from a previous recitation using this sensor to work through the Arduino to Processing communication. In working on the coding of both, and furthering the project by adding a collage of photos from my research, Marcela was exceptionally helpful to me. I definitely struggled with how to translate the specific numerical values associated with each color and how to connect this to groups of photos. User testing proved to be very beneficial to me because I was able to engage with users as they were experiencing my project, as well as receive feedback such as the problems with clarity of the text (so later I changed this to only pictures, rather than facts) as well as the speed of the shifting pictures. Users/”customers” also commented on the action of selecting individual products to scan, as well as the role that edible foods played in the entire understanding of interactivity in my project. Because of this, I made an effort to select real foods that would be pertinent to the decisions that we make regarding our every meal. In terms of justifying these aspects of the design, using sample sized foods also supported the various free samples that are commonly found at grocery stores. While the many changes I made to my project following user testing were effective, I think it would have been even better to clarify the images I used, in addition to fixing the distortion, however even after making many different alterations, this was especially difficult.

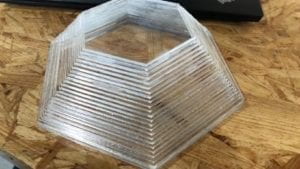

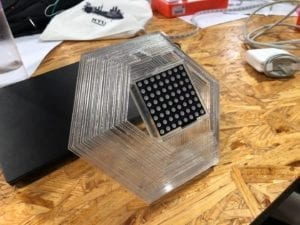

Digital Fabrication:

3D printing: https://www.thingiverse.com/thing:2304545

I decided to create a 3D printed mushroom because it represents the produce that is commonly found at a grocery store or supermarket. I wanted to 3D print rather than laser cut something because I found it relatively easy and beneficial to be able to make shelves out of cardboard, as well as the scanner that contains the Arduino and breadboard for the color scanner.

CONCLUSIONS:

The primary focus of my project is to educate people on the larger consequences and implications of their food choices. Through the interactive concept of using a scanner to trigger images specific to food production, I hope to demonstrate the consequences of dietary choices and the larger implications that surround industrialized agriculture and animal farming. The results of my project align with my definition of interaction because not only do users get to engage with a supermarket-checkout-style scanner, but also they are presented with real, edible foods to further the understanding of what you eat matters. This response from seeing unpleasant or informative images helps to further the elements of interaction in that users both learn something new and associate these facts with the foods they consume regularly. If I had more time, I would improve my project by fixing the distortion of the images, and by adding sound–specifically the screams of animals living on factory farms as well as a sound that is made after each scan to demonstrate the actions– in order to engage audiences in the experience of the project on a more complete level. This project has taught me many valuable components, for example the potential that technology and design have for enhancing our understandings of the world and shifting ideologies on even the most basic aspects of life, such as food. When users are able to experience projects that appeal to more than just one sense, it also enhances the project overall. Regarding my accomplishments on this project, I am pleased to have been able to use creative technology to be able to introduce people to the realities of food systems that they may have otherwise been very disconnected from. Ultimately, this project uses visual cues combined with senses such as taste and smell to demonstrate not only compelling methods of interaction, but also help to bridge the gap that we have from how our food is produced. Audiences and customers should care about this project and experience because it demonstrates the exceptionally detrimental consequences of eating animals and animal products, and translates these very common interactions with food and at grocery stores into more tangible and straightforward pieces of information.

BIBLIOGRAPHY OF SOURCES: