Space Piglet off Balance—Cathy Wang—Marcela

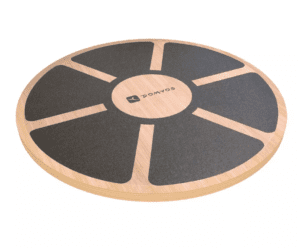

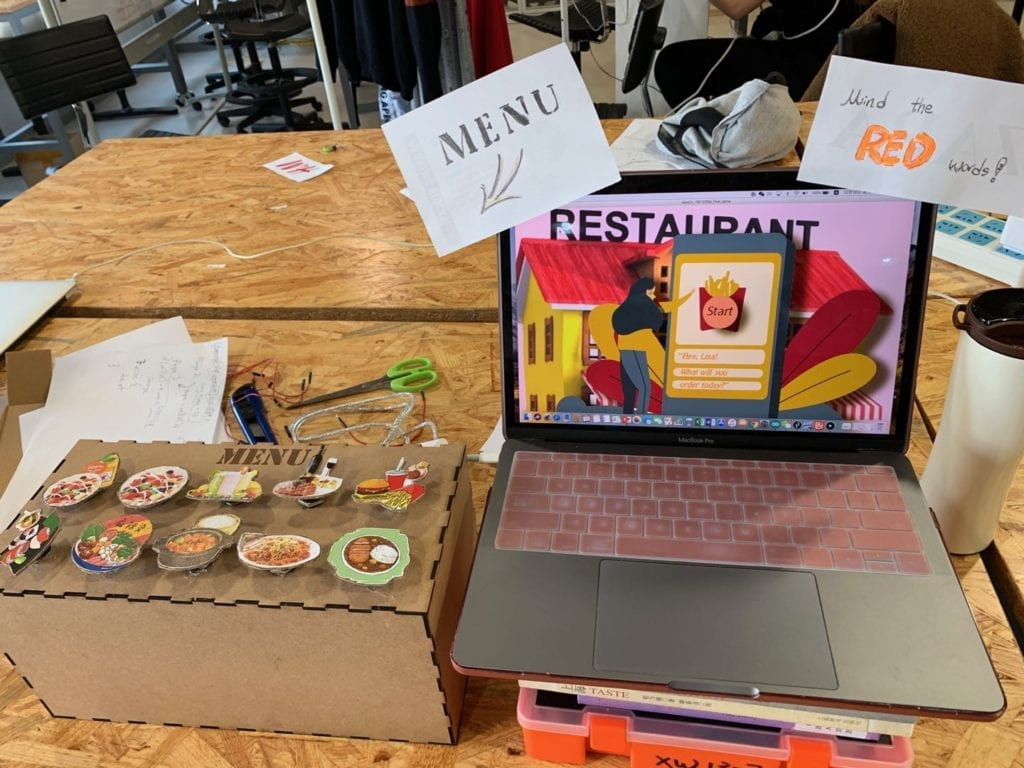

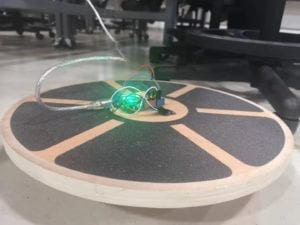

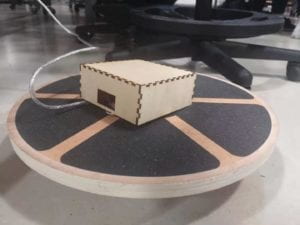

After several discussions with our professor, we decide to make a simple game with a different game experience. Under the inspiration of somatosensory game, we plan to create a game which needs our whole body to control. We have thought of using a camera to capture our movements, but we feel it will be too similar to the somatosensory game. Eventually, we choose a balance board that is usually used for work out. We need to use our whole body especially the lower half part to control the board. Then our project becomes a combination of game and exercise—work out while playing.

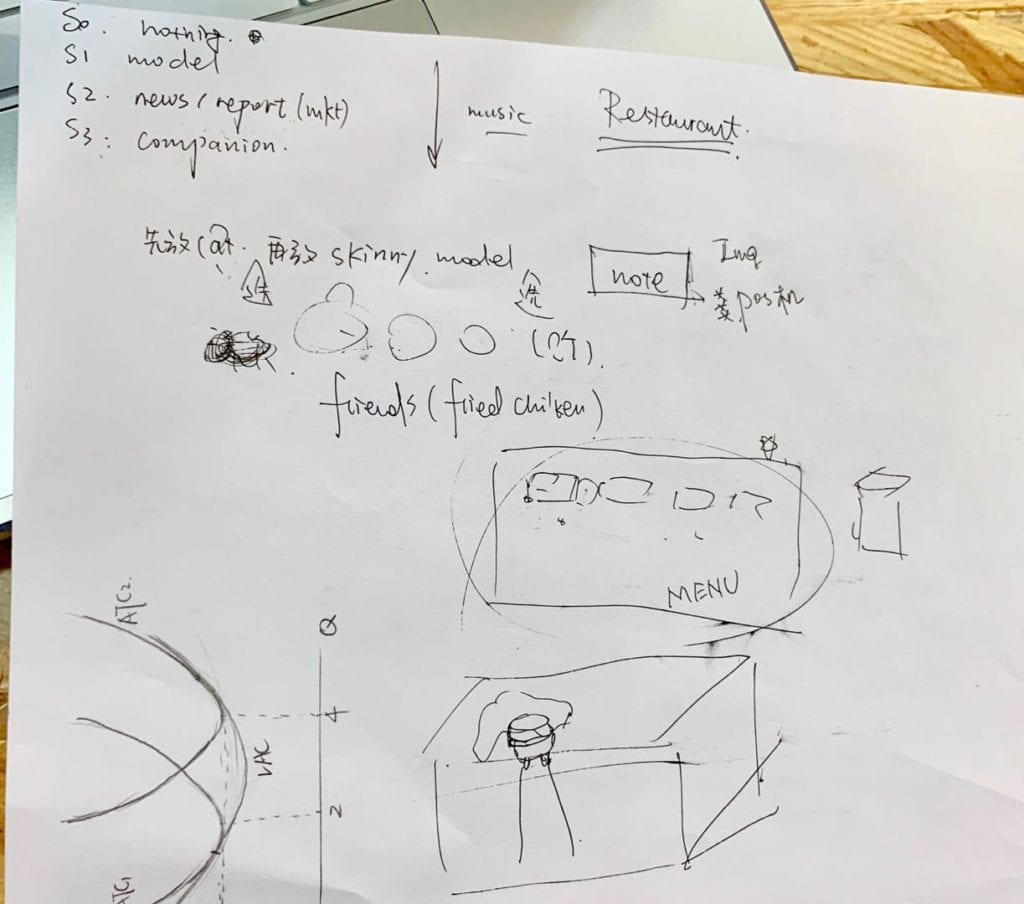

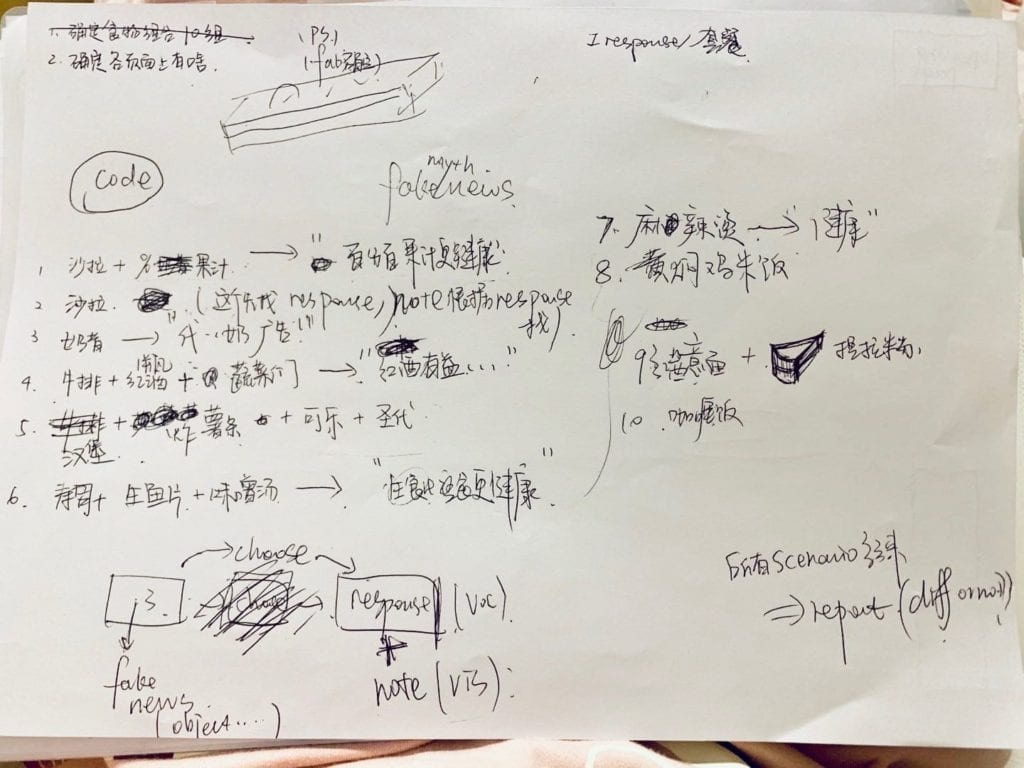

In User Testing Session, our project was still in a primary stage. What we had were just a blue background, a moving brown rectangle and a piglet controlled by us. We got lots of praise about our idea, especially for using a balance board. Meanwhile, our game was too simple with few instructions. In another word, users are confused at first and easily get bored when they master the game. Also, we do not have vocal response nor scores/time counting. It seems like we have the tools of a game, but we have not made the game a true game. Another more theoretical problem is that what the game talks about. We can’t randomly choose a game’s element. All things appear for some reason. We also need to build a connection between the physical tool and the virtual image. So, we change the shape of the brown rectangle into an ellipse with the same image as our balance board to make an echo. We also build a scenario for this game: a piglet flying in the space needs to stay on his flying machine to keep safe and avoid aerolite. In this way, we have a reason why the piglet is moving and what’s the relationship between the balance board and the game. We also change the background of a universe and add music to it. Originally, we wanted to use a picture as a background. However, after doing that, our game became too slow to be played. Then we made one by ourselves.

In the final presentation, we got a different inspiration for our project from a classmate’s comment. He said people use the balance board to help little kids practice their body-coordination and help their leg and knee joint to grow better. But they may get bored and refuse to do it. Our project transforms this “boring” equipment into a fun game, which may have a huge practical meaning. In IMA Final Show, we find that our instructions are still not clear enough. Some users thought they were supposed to control the ellipse (with the same image to the balance board) instead of the piglet. Therefore, we may need to consider clarifying how the game works or adapting the corresponding relation.

We believe a fun game needs to create a different interactive experience from other games. By adding an element of work-out, we combine kinematic movement with the visual game. We believe interaction starts at a physical level. Users are more likely to interact with more physical participation. Although we still need to improve in many details, I believe we succeed in most parts according to users’ reactions. Our project gives the game a different way to play while making a work-out tool a fun game.

// IMA NYU Shanghai // Interaction Lab // For receiving multiple values from Arduino to Processing /* * Based on the readStringUntil() example by Tom Igoe * https://processing.org/reference/libraries/serial/Serial_readStringUntil_.html */ import processing.serial.*; import processing.sound.*; SoundFile sound; String myString = null; Serial myPort; int NUM_OF_VALUES = 3; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/ int[] sensorValues; /** this array stores values from Arduino **/ PImage image; PImage image1; PImage image2; float x=800; float y=300; float speedX; float speedY; int state=0; float a=800; float b=300; float xspeed=2; float yspeed=1; int score = 0; float e=700; float f=700; float xspeeed = 10; float yspeeed = 6; float xspeeeed = 5; float yspeeeed = 9; float g=200; float h=700; int round; long gameoverTime = 0; long falloffTime = 0; boolean isPigOnDisc = true; boolean isPigRock=true; PImage bgImage; boolean start=false; void setup() { fullScreen(); //size(1200, 900); //size(1200, 800) // bgImage = loadImage("Uni.jpg"); image = loadImage("piglet.png"); image1 = loadImage("rockL.png"); image2= loadImage("rockS.png"); setupSerial(); sound= new SoundFile(this, "super-mario-bros-theme-song.mp3"); sound.loop(); } void draw() { updateSerial(); round++; if (start==true) { background(0); fill(#F5DE2C); pushMatrix(); translate(width*0.2, height*0.5); rotate(frameCount / 200.0); star(0, 0, 5, 15, 3); popMatrix(); pushMatrix(); translate(width*0.4, height*0.7); rotate(frameCount / 200.0); star(0, 0, 10, 15, 5); popMatrix(); pushMatrix(); translate(width*0.7, height*0.4); rotate(frameCount / 200.0); star(0, 0, 10, 15, 5); popMatrix(); pushMatrix(); translate(width*0.5, height*0.5); rotate(frameCount / 200.0); star(0, 0, 3, 9, 3); popMatrix(); pushMatrix(); translate(width*0.2, height*0.2); rotate(frameCount / 200.0); star(0, 0, 3, 9, 3); popMatrix(); pushMatrix(); translate(width*0.5, height*0.5); rotate(frameCount / 200.0); star(0, 0, 3, 9, 3); popMatrix(); pushMatrix(); translate(width*0.15, height*0.8); rotate(frameCount / 200.0); star(0, 0, 3, 9, 3); popMatrix(); pushMatrix(); translate(width*0.8, height*0.5); rotate(frameCount / -100.0); star(0, 0, 5, 15, 5); popMatrix(); pushMatrix(); translate(width*0.5, height*0.1); rotate(frameCount / -100.0); star(0, 0, 5, 15, 5); popMatrix(); pushMatrix(); translate(width*0.5, height*0.3); rotate(frameCount / -100.0); star(0, 0, 5, 15, 5); popMatrix(); pushMatrix(); translate(width*0.1, height*0.1); rotate(frameCount / -100.0); star(0, 0, 5, 15, 5); popMatrix(); pushMatrix(); translate(width*0.6, height*0.5); rotate(frameCount / 200.0); star(0, 0, 3, 9, 3); popMatrix(); pushMatrix(); translate(width*0.8, height*0.2); rotate(frameCount / 200.0); star(0, 0, 3, 9, 3); popMatrix(); if (dist(x, y, a, b)<300) { isPigOnDisc = true; } // background(#98D2F7); // image(bgImage, 0, 0, width, height); if (millis() % 1000 < 10) { // check if the file is already playing score += 10; } textAlign(LEFT); textSize(30); //text("millis(): " + millis(), width-200, height/2-50); //text("millis() % 1000: " + millis()%1000, width-200, height/2+50); fill(255, 0, 0); text("Score: " + score, 50, 50); //println(millis()); //background(#98D2F7); fill(#F2CE58); noStroke(); ellipse(a, b, 500, 500); fill(#4D4C4C); noStroke(); ellipse(a, b, 300, 300); fill(#F2CE58); noStroke(); ellipse(a, b, 100, 100); a= a+xspeed; b= b+yspeed; if (round==500) { xspeed = 4; yspeed = 2; } if (a > width-250 || a <250) { xspeed = -xspeed; } if (b > height-250 || b <250) { yspeed = -yspeed; } image(image1, e, f, 135, 100); e= e+1.5*xspeeed; f= f+yspeeed; if (e > width || e <1 ) { xspeeed = -xspeeed; } if (f > height || f <0) { yspeeed = -yspeeed; } if ( dist(a, b, e, f)<300) { xspeeed = -xspeeed; yspeeed = -yspeeed; } image(image2, g, h, 135, 100); g= g+1*xspeeeed; h= h+yspeeeed; if (g > width || g <1 ) { xspeeeed = -xspeeeed; } if (h > height || h <0) { yspeeeed = -yspeeeed; } if ( dist(g, h, a, b)<300) { xspeeeed = -xspeeeed; yspeeeed = -yspeeeed; } //if ( f >= b -500 && f<=b+500) { // yspeeed = -yspeeed; //} if (sensorValues[0]>-100 || sensorValues[0]<width+100) { speedX = map(sensorValues[0], 100, 400, -75, 75); x= x +1*speedX; x = constrain(x, 0, width-80); speedY = map(sensorValues[1], 100, 400, -75, 75); y = y-1.*speedY; y = constrain(y, 0, height-135); } } image(image, x, y, 180, 200); if (start==false) { fill(50); textSize(30); String s= "Stand on the balance board and press ENTER to start! Try to keep Piglet on the board and stay away from rocks!"; fill(50); text(s, 500, 500, 1000, 100); } if (dist(x,y,e,f)<5) { //pig and rock background(0); fill(255); textSize(64); text("YOU DIED", width/2-100, height/2); score = 0; } //background(0); //fill(255); //textSize(64); //text("YOU DIED", width/2-100, height/2); //score = 0; //long gameoverTime = millis(); //while (millis() - gameoverTime < 5000) { // println("gameover"); // delay(10); //} //start = true; //x = width/2; //y = height/2; //println("Start Again!"); ////delay(1000); ////start = false; //} if (dist(x, y, a, b)>350) { //when pig falls off the disc if (isPigOnDisc == true) { isPigOnDisc = false; falloffTime = millis(); } if (millis() - falloffTime > 3000) { if (start == true) { gameoverTime = millis(); } background(0); fill(255); textSize(64); text("YOU DIED", width/2-100, height/2); score = 0; start = false; if (millis() - gameoverTime > 5000) { start = true; x=500; y=500; a=500; b=500; e=700; f=700; g=200; h=700; } //println("Start Again!"); } } } //void gameOver() { // background(0); // fill(255); // textSize(64); // text("YOU DIED", width/2-100, height/2); // x=500; //pig // y=200; //pig // a=650; //disc // b=300; //disc // start = false; // score = 0; // e=100; //shark // f=100; //shark // delay(1000); //} void star(float x, float y, float radius1, float radius2, int npoints) { float angle = TWO_PI / npoints; float halfAngle = angle/2.0; beginShape(); for (float a = 0; a < TWO_PI; a += angle) { float sx = x + cos(a) * radius2; float sy = y + sin(a) * radius2; vertex(sx, sy); sx = x + cos(a+halfAngle) * radius1; sy = y + sin(a+halfAngle) * radius1; vertex(sx, sy); } endShape(CLOSE); } void keyPressed() { if (key == ENTER) { start = true; } else { start = false; } } void setupSerial() { printArray(Serial.list()); myPort = new Serial(this, Serial.list()[0], 9600); // WARNING! // You will definitely get an error here. // Change the PORT_INDEX to 0 and try running it again. // And then, check the list of the ports, // find the port "/dev/cu.usbmodem----" or "/dev/tty.usbmodem----" // and replace PORT_INDEX above with the index number of the port. myPort.clear(); // Throw out the first reading, // in case we started reading in the middle of a string from the sender. myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII myString = null; sensorValues = new int[NUM_OF_VALUES]; } void updateSerial() { while (myPort.available() > 0) { myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII if (myString != null) { String[] serialInArray = split(trim(myString), ","); if (serialInArray.length == NUM_OF_VALUES) { for (int i=0; i<serialInArray.length; i++) { sensorValues[i] = int(serialInArray[i]); } } } } }