In recitation today, we manipulated media and used Arduino as a controller to induce media output through processing. This exercise was simpler, after working with AtoP and PtoA using multiple values from last week’s recitation.

Steps

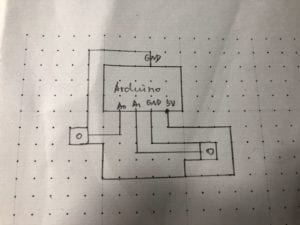

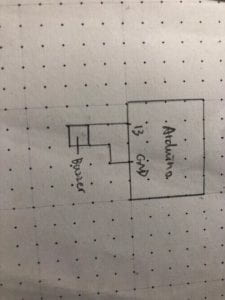

For my task, I used a potentiometer to control the translation and rotation feature of my sunset image that I took. After putting the image in a new folder in my big folder, I called on the image and then manipulated the pushMatrix and popMatrix functions to allow my image to rotate as I twisted my potentiometer for interactivity. I looked at a few of the example codes given to us in class earlier this week and adjusted the movie rotate code to fit into my image rotating code. I also put the relevant ledPin in the Arduino code for the two programs to connect.

Reading

After reading the article “Computer Visions for Artists and Designers” by Golan Levin, it made me reflect a lot about technology and the course of interaction lab as a whole, as we manipulate technology with media in the class. As computer programming develops, the ways people use it evolves as well. Whereas before, people mostly coded for hardware or software for websites, businesses or other mediums, today, coding can also be used to display art and media. One of the quotes in the article stood out to me, “many more software techniques exist, at every level of sophistication, for detecting, recognizing, and interacting with people and other objects of interest” (Levin). This is very interesting because the interaction between technology and art is possible through these developments in specific levels of detection and interaction that allows the audience to communicate with the subject. I was inspired by how this article displays many different art projects to present art in a new fashion, through technology. In my project, I was able to combine media with interaction using technology, which is both innovative and interesting not only to look at but also to create. I was also inspired by how I can use technology as a medium to communicate and interact with the audience in an amusing and modern way.

Video

Final Code: Arduino

void setup() {

Serial.begin(9600);

}

void loop() {

int sensor1 = analogRead(A0);

int sensorValue = map(sensor1, 0, 1023, 0, 255);

Serial.write(sensorValue);

delay(10);

}

Final Code: Processing

// IMA NYU Shanghai

// Interaction Lab

// This code receives one value from Arduino to Processing

import processing.serial.*;

Serial myPort;

int valueFromArduino;

PImage img1;

color mouseColor;

float mouseR, mouseG, mouseB;

void setup() {

size(1086, 724);

img1 = loadImage("SUNSET.jpeg");

background(0);

printArray(Serial.list());

// this prints out the list of all available serial ports on your computer.

myPort = new Serial(this, Serial.list()[13], 9600);

}

void draw() {

// to read the value from the Arduino

while ( myPort.available() > 0) {

valueFromArduino = myPort.read();

}

println(valueFromArduino);//This prints out the values from Arduino

pushMatrix();

translate(100, 100);

rotate(radians(map(valueFromArduino, 0, height, 0, 500)));

image(img1,100,100,width/(valueFromArduino+1),height/(valueFromArduino+1));

popMatrix();

mouseColor = img1.get(mouseX, mouseY);

mouseR = red(mouseColor);

mouseG = green(mouseColor);

mouseB = blue(mouseColor);

println(mouseR+" "+mouseG+" "+mouseB);

set(width/2,height/2,mouseColor);

}