CONCEPTION AND DESIGN:

- Research on Social Awareness, Inclusiveness, and Interaction

To do this project, we researched the current issues of climate change. And human’s massive impact on it. We referred to the NASA website about the fact of climate change. They have already collected significant evidence to show that human is causing a severe climate change effect. Among them, carbon dioxide is a fundamental cause of it. It also addresses that de-desertification and forest are the ways to ease climate change for a while. Therefore, Vivien and I think that it is significant to address this issue in an interactive way, which could arouse the public’s awareness. As described in the documentation before, combining Vivien and my definition of “interaction” together, we believe that an interactive project with open inclusiveness and significance to the society is essential. The most significant research projects we referred to are bomb and teamLab.

- Core Theme

Therefore, in this project, we want our users to get the information on the accelerating climate change pace. Guild them through a reflection on themselves: In what did I contribute the climate change? How possible could I pay an effort to eliminate it?

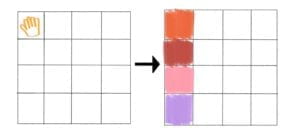

- Game-like Art Project – ending without winning

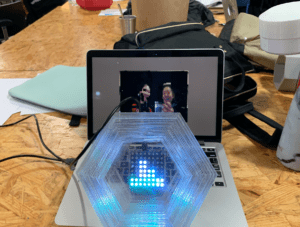

Our project is a game-like art project that asks the user to plant trees. In return, they can compensate for their effect on climate change virtually. However, since it is a universal consensus that humans cannot stop causing climate change unless we stop all our lives and movements, meaning death, humans must keep on paying effort in the career of environmentalism. Once they stop, the climate situation will start to get worse again. To express this information to the uses and make them aware of it, we intentionally design the ending as a tragedy – explosion only, meaning that there’s no “winning” situation. Again, our project is NOT a general defined game but an interactive art project that engages the public in the conversation of preservation for climate change.

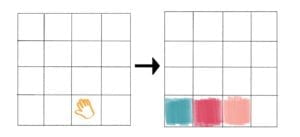

- Collaborative instead of Competitive

We humans or all the creatures in the globe are one entity. Facing climate change, we do not compete for resources within groups. Therefore, it is instead, a process that requires all humans’ collaborative efforts. So, in our project, we also make it collaborative instead of competitive. At most four users can shovel the dirt to plant the same tree together at the time to accelerate the growing speed of the tree, which results in a more positive effect to reduce the increasing carbon dioxide. As more users join in, the trees will grow faster; the planet will last for a longer time.

- Materials

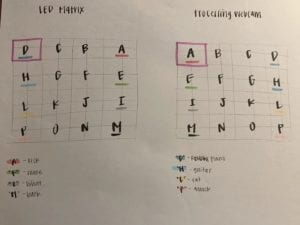

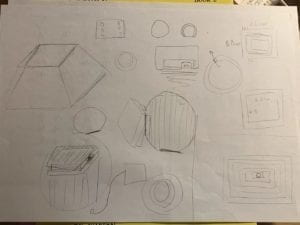

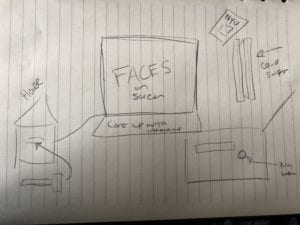

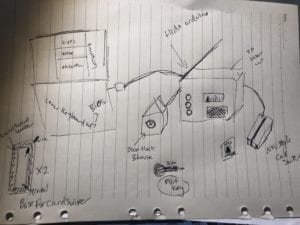

We initially intended to apply four masks to detect (or fakely detect) the users’ breath. Use four shovels with sensors to detect their movement. And apply the computer screen to show the virtual tree graphs to the users. Below is a rough sketch of them.

- Abandon mask

However, after presenting our project to the class, we collected feedback about the concerns with the masks. Even though applying masks sounds reasonable for our project, it may be not making sense to users. Also, since multiple users will experience our project, it is complex and not environmentally friendly to change the mask every time. Wearing masks may also affect the users’ comfortable experience. Considering all these factors, we abandoned the use of masks and replaced it with a straightforward instruction on the screen that “human breath consume O2 and produce CO2.”

- Authentic experience with dirt

We tried to experience shovel with the tree growing animation. However, due to two reasons, we decide to have some solid materials to shovel instead of only ask the users to present the movement of shoveling. First, since the user’s behaviors and movements are not predictable, we could not think out of the way to use specific sensors to count the times a user shovel accurately. Second, it is very dull and stupid to shovel nothing. By applying dirt, we can detect the movement of the shovel by detecting the changing weight of soil; users will also have a more authentic experience of planting the tree.

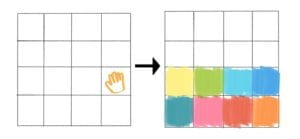

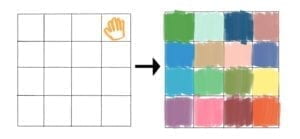

- GUI

To make the users have a sense of their progress by “planting trees,” we designed a GUI for users to keep track of everything. We apply multiple signals to alert users in the process of their interaction. Two bars regarding the level of oxygen and carbon dioxide, changing the background color, growing trees, simple texts, notification sound, and explosion animation. Each of these components is designed to orient the user into the project as soon as possible.

FABRICATION AND PRODUCTION:

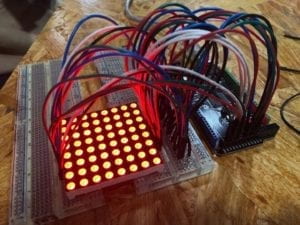

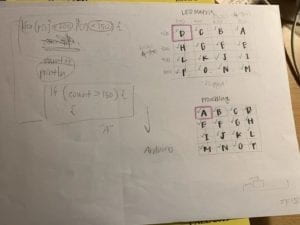

- Programming and Weight sensor

By referring to our course materials and online references, we did not meet with any unsolved programming issues. We applied OOP, functions, media, etc. into our program. This is the first version of our program.

To detect the movement of “planting trees,” we decided to use a weight sensor. However, when I first got it from the equipment office, I had no idea how it should work.

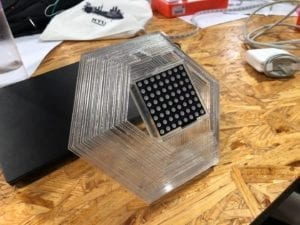

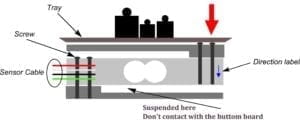

Then I looked up the official website of the producer about this sensor. It explains all the information about this sensor in detail. The only problem I met is that the sensor I got is broken. I successfully repaired it by myself. As instructed, it needs to work with a weight platform. The sketch is below.

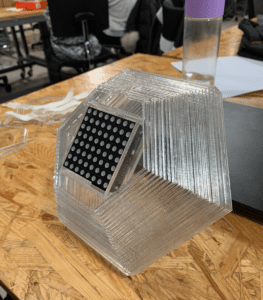

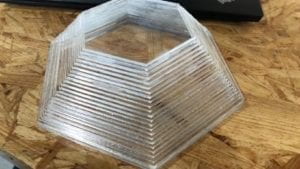

However, the website that details the platform is no longer available. We first intended to design one by ourselves and 3D print it out. However, without detail information, it is too hard to design it within days. We then consulted the lab assistant and received the suggestion to hang this sensor in the air instead of putting it on the ground. Therefore, in the final presentation, we took advantage of the table to fix the sensor hanging on it.

- User testing: user-friendly redesign

During the user testing session, we received much feedback regarding some problems with our project that makes it less friendly to the users. First, the program is not stable. Sometimes, when the box is just swinging in the air, the program will sense it as the user has put sand/dirt into the box. This misinformation may mislead the user to shovel sand out of the box instead of into the box. So, we changed some algorithm in the program to only sense larger-scale change of weight and only allowed the tree to grow a little each time regardless of how much weight is added to the box. Also, we planned to add a fake tree in the middle of the box to signal the user to put dirt into the big box. Second, the use did not get timely feedback once they shovel the sand into the box. We then add a notification sound to notify the user. Also, we mirrored our small computer screen to a bigger monitor and changed the size of some graphs in the GUI later in the presentation to make sure the user can keep track of their processes easily. Also, since we are putting the sand into different containers, some users may be misinformed by it since it looks like a competition. However, we intend to design it as a cooperating job, not a competition. So, we carefully relocate the position of the containers and shovels to make it at least look collaborative. Last but not least, some users also think it hard to build a logical connection with sand and planting trees. To avoid confusion, we changed sand to dirt so that the correlation between them should be straightforward enough. After this process, I also learned how significant it is to have the user test our project to collect their feedbacks to avoid my fixed mindset. Therefore, we can fix some design flaws to make it more accessible and friendly to users.

- Fabrication

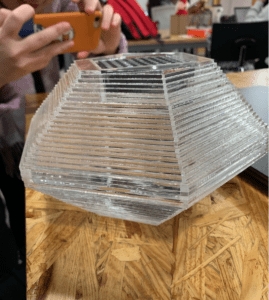

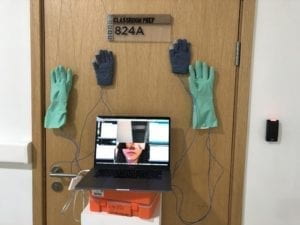

Our fabrication process includes drilling holes on the box, 3D printing a tree model, and build the whole project into the revenue in advance. Vivien devoted much to this process!

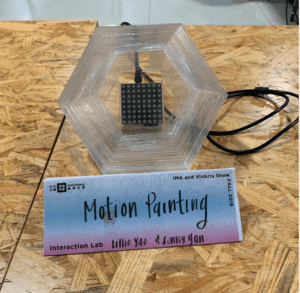

Final project presentation:

CONCLUSIONS:

The most important goal of our project is to arouse the public’s awareness and reflection on a more significant issue of climate change. We want it to be an interactive project that addresses social problems; it is fun to interact with; it is inclusive to many users, both those directly interacting with it and others overserving it. These criteria also correspond to our understanding and definition of “interaction.” First, I think it is indeed a fun and inclusive experience for the users who interact with it. They are paying effort to shovel the dirt and get constant feedback from the program. For others who are observing the interaction, it is also fun to view the whole process, which will also leave an impressive impression on them. The only pity is that the layout of the containers, dirt, shovels, and the monitor may not be very perfect for making sure everyone has a comfortable place to view and move. And the users indeed interacted with our project in the way that we design in the last presentation. Regarding addressing social awareness, we have some successes and some failures. According to our discussion after the demonstration, they successfully get the basic information about the human’s severe impact on climate change issues and the possible actions humans can do to address it. However, we have a debate on the ending of the project. There is only one option of the ending: O2 drops to 0 and the planet explodes. Some argue that it gives out a negative message that whatever you do, it will fail in the end unless four users keep on shoveling without stop. We fully understand this pessimistic thinking pattern. However, regarding the real-word situation, we still think this reflects how severe the climate change issue is and it indeed requires humans’ continuous efforts on it or else it will cause ecological disasters. I believe this is an issue that is worth a full seminar time to discuss. We are open to any interpretation of the design of “no winning, always failure” since that is not a flaw, but part of our special design of the project. Regardless of this problem, we think there are some improvements we can make in the future according to some other feedbacks in the presentation. First, as we are running out of the dirt, we can make the box as an hourglass so that the soil could be recyclable to use. Second, we can redesign the layout of the project, use a larger projection, and have all the dirt directly on the ground if we have a bigger revenue so that all the users and observers will have a better experience. Third, if necessary, we can add a winning situation that could be hardly achieved (still need more discussion, as I state before). I also learned a lot from the process of designing and building this project both technically and theoretically. I gain many skills in programming, problem-solving, fabrication, crafting, etc. I learned how to make a project better fit for the audience by testing and hearing form their feedback. This experience also sheds my understanding of interaction deeper in its meaning that it could be so flexible that it involves many characteristics. By address the climate change issue, I also have a reflection of myself, of my understanding of the issue, and it engages me to think further and discover it.

Climate change is happening. I believe our project significantly addresses this issue in an innovative way of interaction. It is an art, meaning that the audience may have various understandings of their own. However, the presentation of the issue and their authentic experience interaction with our project makes our core theme impressive to them. Big or small, I believe we are making an impact.

Code: link to GitHub

Code: link to Google Drive

Works Cited for documentation:

“The Causes of Climate Change.” NASA. https://climate.nasa.gov/causes/

“Climate Change: How Do We Know?” NASA. https://climate.nasa.gov/evidence/

“Weight Sensor Module SKU SEN0160,” DFRobot, https://wiki.dfrobot.com/Weight_Sensor_Module_SKU_SEN0160

“Borderless World.” teamLab. https://borderless.team-lab.cn/shanghai/en/

Works Cited for programming:

KenStock. “Isolated Tree On White Background.” pngtree. https://pngtree.com/freepng/isolated-tree-on-white-background_3584976.html

NicoSTAR8. “Explosion Inlay Explode Fire.” pixaboy. https://pixabay.com/videos/explosion-inlay-explode-fire-16640/

“success sounds (18).” soundsnap. https://www.soundsnap.com/tags/success

“explosion.” storyblocks. https://www.audioblocks.com/stock-audio/strong-explosion-blast-rg0bzhnhuphk0wxs3l0.html