Project Title: Won Color el Stage

Descriptions of the project can be found in my last article. No big deviation from the initial configuration. https://wp.nyu.edu/shanghai-ima-documentation/foundations/interaction-lab/cx592/fnl-prjct-essay-by-changzhen-from-inmis-session/

1. The Strategy

Three players must cooperate as importantly as compete to win the game. After all, mixed color indicates it’s at least two colors.

If only one player inputs too much, the score would go to one of her opponent. E.g. If it’s solely red who inputs, mixed color will be judged purple instead of orange that’s counted to be her score.

If they three input too much, the score decreases for all. The larger input for each, the worse decrease.

2. Digital Design

The video parts will be displayed on 1920*1080 (16:9) TV screen. The video image is made of the MikuMikuDance video in the center, three primary colors on the left with each party’s score, mixed color on the right, and the match on the top.

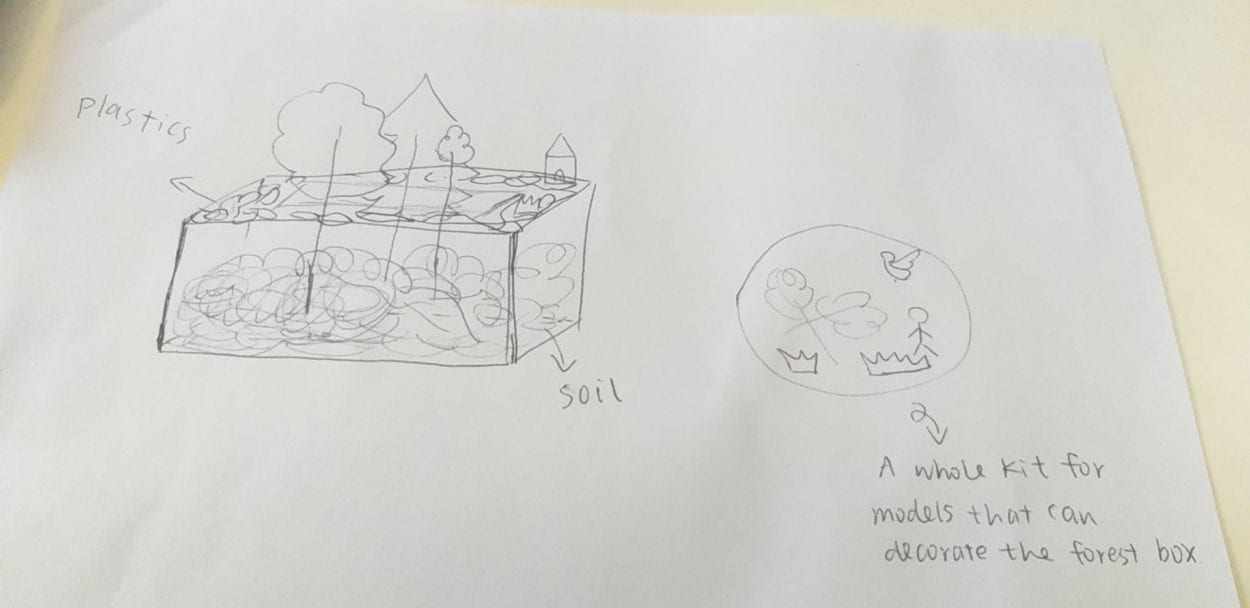

3. Physical Design

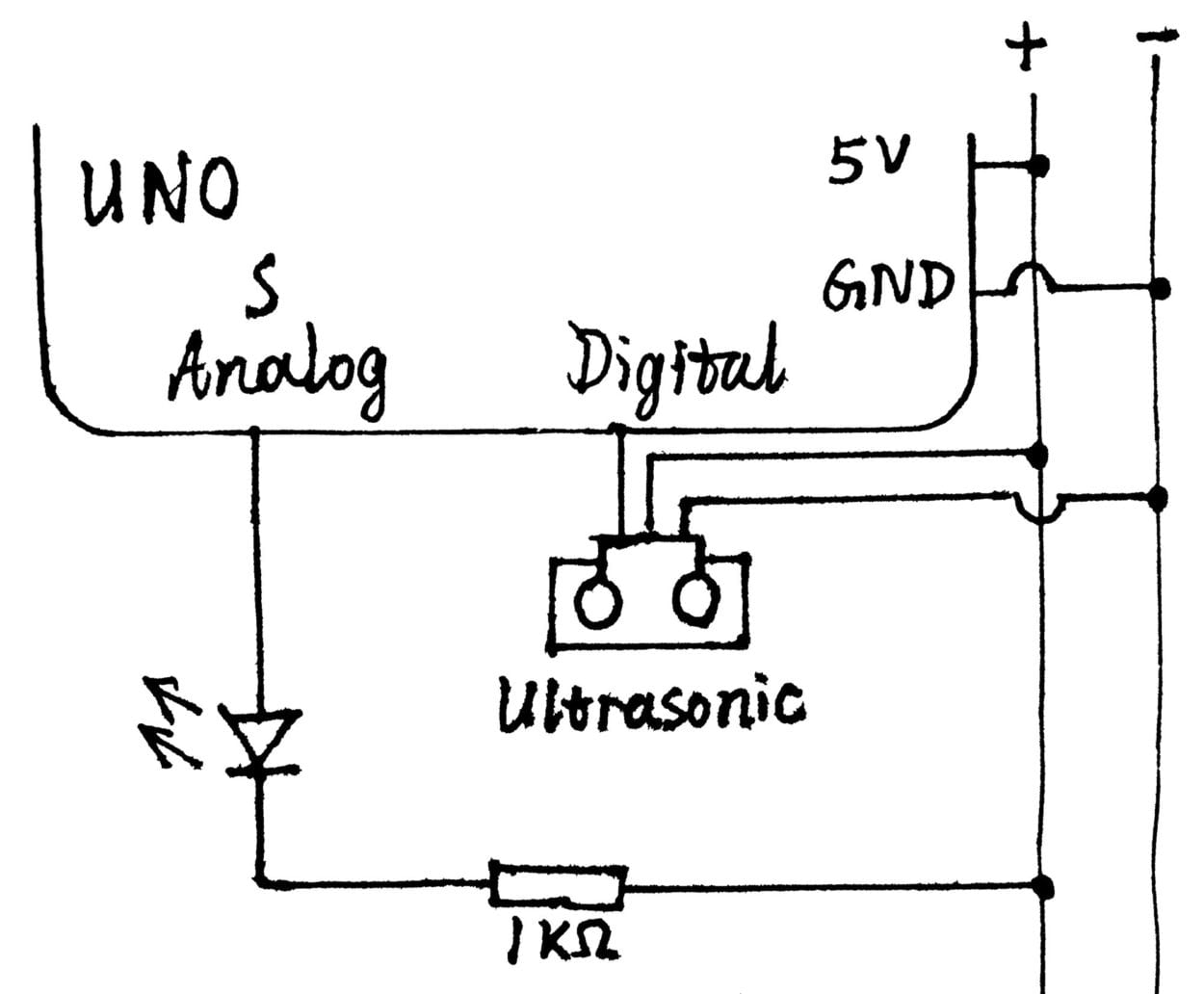

The circuit is hidden in a triangular prism. There are holes laser cut on each side face to fix an ultrasonic distance sensor and an LED indicating her input color. The sensor senses the distance on each side 0~30cm, and the brightness of the LED changes in response. The prism is made of shiny reflexible materials, because it’s designed to be a stage. Around each angle of its top is pasted a small paper with the anime idol printed on.

To my surprise, the ultrasonic distance sensor requires a library and is connected to digital pin on arduino. This sketch is symmetrical for each player.

4. Objections and Suggestions to This Project

Marcella firstly suggests I get rid of the video and let the event just happen on the physical stage. The idol who holds stage will be identified by color stage lights. Secondly, she puts forward the reason that if three players watch the screen in the same direction, the triangular prism design loses its meaning. Thirdly, the input method may be better if it’s to sense the movements of the player dancing. As for the first, I regret not to have taken this advise, because I had limited time to make adjustment. As for the second, I think VR glasses instead of a TV on one side will also make players play face to face forming a triangle. Her third idea is awesome.

Christina Bowllan and her friend objected to the video content. By negligence, I didn’t take feminists like them into account. If I had known that who knew little about anime, comics, and games will not accept the artistic appearance of such anime female characters dancing, I would change the content like I didn’t adopt the idea of mixing color to draw flags, which is political. I might choose landscape videos or so.

Professors suggested I reveal each party’s score so that players know it’s a match and knows where they’re more clearly. I adjusted that on the IMA festival day. And I dismantle the paper cover tent above the stage by their suggestion to make the stage easier on the eyes.

5. Conclusions

Marcella and other professors’ suggestions remind me that each component of the project shall have a focused purpose to account for what the project is about and how it’s played.

The feminists’ objections remind me that I shall consider when and where a project is allowed. It’s intended for all in the context of this inter lab class, so I shall make it acceptable to all.

The core of this project is its strategy. It suggests the real life situations. Victory doesn’t come to a single person; it comes to groups that corporate and compete. So the strategy can be applied to more than this idol dancing thing to create other colorful intellectual projects.

Arduino Code

//include distance sensor and hook up to digital pin 2, 4, 7

#include “Ultrasonic.h”

Ultrasonic s1(2);

Ultrasonic s2(4);

Ultrasonic s3(7);

void setup() {

Serial.begin(9600);

}

void loop() {

//read the distance in cm

int d1 = s1.MeasureInCentimeters();

int d2 = s2.MeasureInCentimeters();

int d3 = s3.MeasureInCentimeters();

//restrict the range within 30cm

if(d1 > 30) {

d1 = 30;

}

if(d2 > 30) {

d2 = 30;

}

if(d3 > 30) {

d3 = 30;

}

//control the brightness of color LED according to the distance

analogWrite(9,255-d1*255/30);

analogWrite(10,255-d2*255/30);

analogWrite(11,255-d3*255/30);

//serial communicate to processing

Serial.print(d1);

Serial.print(“,”);

Serial.print(d2);

Serial.print(“,”);

Serial.print(d3);

Serial.println();

}

Processing Code

import processing.serial.*;

import processing.video.*;

import processing.sound.*;

//create instance; movie is the video of the dancing idol of that color; BGM is background music

Movie orange;

Movie green;

Movie purple;

SoundFile BGM;

String myString = null;

Serial myPort;

//3, because there are three datas for each idol in one serial line

int NUM_OF_VALUES = 3;

int[] sensorValues;

//mixed color

color c;

//r, y, b are the incremental score counted by millis(); O, G, P are the accumulative scores of each player

long red = 0;

long yel = 0;

long blu = 0;

long Ora = 0;

long Gre = 0;

long Pur = 0;

// play BGM only once

boolean play = true;

void setup() {

//TV screen size

size(1920, 1080);

noStroke();

background(0);

setupSerial();

//get the files

orange = new Movie(this, “orange.mp4”);

green = new Movie(this, “green.mp4”);

purple = new Movie(this, “purple.mp4”);

BGM = new SoundFile(this, “BGM.mp3”);

textSize(128);

fill(255);

text(“mix”, 1655, 450);

}

void draw() {

updateSerial();

printArray(sensorValues);

//m1, 2, 3 are multipliers, the input amount, derived from distance

float m1 = 1-float(sensorValues[0])/30;

float m2 = 1-float(sensorValues[1])/30;

float m3 = 1-float(sensorValues[2])/30;

//play BGM only once

if (play == true) {

BGM.play();

play = false;

}

//display each’s color and the mixed on screen as rectangles

fill(255, 255-255*m1, 255-245*m1);

rect(0, 0, 288, 360);

fill(255, 255-10*m2, 255-255*m2);

rect(0, 360, 288, 360);

fill(255-255*m3, 255-105*m3, 255);

rect(0, 720, 288, 360);

c = color(255-255*m3, 255-(255*m1+10*m2+105*m3)*255/370, 255-(245*m1+255*m2)*255/500);

fill(c);

rect(1632, 540, 288, 360);

//judge the mixed color and who earns score at the moment; whose accumulative score is highest, whose idol’s video shall be played

if (m1+m2+m3 < 2.4) {

if (m1+m2 > 3*m3 && m2 > 0.1) {

red = millis()-Ora-Gre-Pur;

Ora += red;

}

if (m2+m3 > 3*m1 && m3 > 0.1) {

yel = millis()-Ora-Gre-Pur;

Gre += yel;

}

if (m3+m1 > 3*m2 && m1 > 0.1) {

blu = millis()-Ora-Gre-Pur;

Pur += blu;

}

}

if (m1+m2+m3 > 2.4) {

Ora -= 100*(m1)/(m1+m2+m3);

Gre -= 100*(m2)/(m1+m2+m3);

Pur -= 100*(m3)/(m1+m2+m3);

orange.stop();

green.stop();

purple.stop();

}

println(Ora);

println(Gre);

println(Pur);

textSize(128);

if (Ora > Gre && Ora > Pur) {

if (orange.available()) {

orange.read();

}

fill(0);

rect(288, 0, 1632, 324);

fill(255);

text(“Orange Girl on Stage”, 350, 200);

orange.play();

green.stop();

purple.stop();

image(orange, 288, 324, 1344, 756);

}

if (Gre > Ora && Gre > Pur) {

if (green.available()) {

green.read();

}

fill(0);

rect(288, 0, 1632, 324);

fill(255);

text(“Green Girl on Stage”, 350, 200);

green.play();

orange.stop();

purple.stop();

image(green, 288, 324, 1344, 756);

}

if (Pur > Gre && Pur > Ora) {

if (purple.available()) {

purple.read();

}

fill(0);

rect(288, 0, 1632, 324);

fill(255);

text(“Purple Girl on Stage”, 350, 200);

purple.play();

orange.stop();

green.stop();

image(purple, 288, 324, 1344, 756);

}

//end of game and show who wins

if(millis() >= BGM.duration()*1000) {

background(0);

fill(255);

if (Ora > Gre && Ora > Pur) {

text(“Orange Girl Wins Stage!”, 240, 500);

}

if (Gre > Ora && Gre > Pur) {

text(“Green Girl Wins Stage!”, 240, 500);

}

if (Pur > Ora && Pur > Gre) {

text(“Purple Girl Wins Stage!”, 240, 500);

}

}

fill(0);

textSize(32);

text(int(Ora), 20, 180);

text(int(Gre), 20, 540);

text(in(Pur), 20, 900);

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[3], 9600);

myPort.clear();

myString = myPort.readStringUntil(10);

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil(10);

if (myString != null) {

String[] serialInArray = split(trim(myString), “,”);

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}