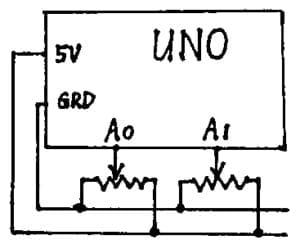

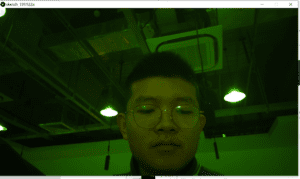

I just went to my favorite singer’s concert and I thought the lighting in the concert is very suitable for the tint function. I set the blue and green value to random and use one potentiometer to control the red value. An important thing is to map the values from the potentiometer to (0,255).

This is the final result:

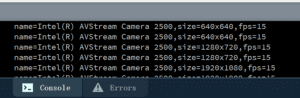

This is my processing code:

import processing.serial.*;

import processing.video.*;

Movie myMovie;

String myString = null;

Serial myPort;

int NUM_OF_VALUES = 1; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

void setup() {

size(1280, 1080);

//myMovie = new Movie(this, "dancing.mp4");

myMovie = new Movie(this, "hcy.MOV");

myMovie.play();

setupSerial();

}

void draw() {

updateSerial();

printArray(sensorValues);

float hcy = map (sensorValues[0],0, 1023, 0,255);

// use the values like this!

// sensorValues[0]

// add your code

if (myMovie.available()) {

myMovie.read();

}

tint(hcy, random(255), random(255));

image(myMovie, 0, 0);

//

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 1 ], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port "/dev/cu.usbmodem----" or "/dev/tty.usbmodem----"

// and replace PORT_INDEX above with the index number of the port.

myPort.clear();

// Throw out the first reading,

// in case we started reading in the middle of a string from the sender.

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), ",");

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}

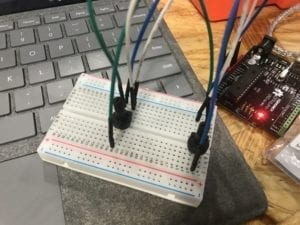

this is my Arduino code

[code] // IMA NYU Shanghai// Interaction Lab

// For sending multiple values from Arduino to Processing

void setup() {

Serial.begin(9600);

}

void loop() {

int sensor1 = analogRead(A0);

//int sensor2 = analogRead(A1);

//int sensor3 = analogRead(A2);

// keep this format

Serial.print(sensor1);

//Serial.print(“,”); // put comma between sensor values

//Serial.print(sensor2);

//Serial.print(“,”);

//Serial.print(sensor3);

Serial.println(); // add linefeed after sending the last sensor value

// too fast communication might cause some latency in Processing

// this delay resolves the issue.

delay(100);

}

[/code]

Reflection about the ways technology was used in your project:

After reading the texts, I realized that technology is just one approach to convey my meaning in the project, to enhance the interactive experience. technology itself is not the main part of the project, the most important things are first, the message behind. Second, the user’s experience.

In the text, it introduced Rafael Lozano Hemmer’s installation Standards and Double Standards (2004). I like this project because first it involves full-body movement. Second, it’s thought-provoking. Are those belts a representative of humans? The first time encounter it, I was not impressed by how fancy technology is used in this installation but to think about the deeper meaning behind this project.

In our final project, we also want users to have full-body interaction so that we plan to use multiple sensors. Then, we want users to think about the relations between human and nature while experiencing this project.