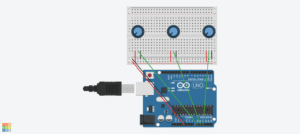

For this recitation, we first had a quick workshop about the map() function. I reviewed when to use this function, and what the formats are. After this workshop finished, we were asked to choose a workshop to attend. The choices included media manipulation, serial communication, and object oriented programming. In our final project, we will focus on how to send data between Arduino and Processing, and how to draw and control the image of the marionette in Processing. Therefore, my partner attended the workshop about serial communication, and I chose the workshop about object oriented programming.

In the workshop, Tristan gave us a detailed explanation about what “object” means, and what the parts of it are, which include class and instance. We thus went over the process of writing codes of object oriented programming from bigger parts (class, instance) to the smaller ones (variables, constructor, functions). Using the emoji faces as example, we started to work on the code together. Based on the code that we wrote during recitation, I started to create my own animation as exercise.

We needed to use classes and objects, and the animation should include some level of interactivity. As requirements, we needed to use the map() function and an ArrayList. I decided to use the mousePressed function as way of interaction. The shapes would be created at the mouse’s position. After searching a basic function for a star online, I modified the code to meet the requirement of using arrays. Creating a star needs to use the vertex() function for ten times. I let the stars to fall down to the bottom of the screen by setting the yspeed to random(3,7). I wrote a bounce function, so that if the stars hit the boundaries on the left and the right they will turn to the opposite direction. Finally, I used the map() function to limit the area where the stars can be created.

These are my codes in Processing ( 2 parts):

ArrayList<Stars> stars = new ArrayList<Stars>();

void setup() {

size(800, 600);

noStroke();

}

void draw() {

background(130,80,180);

for (int i=0; i<stars.size(); i++) {

Stars s = stars.get(i);

s.move();

s.bounce();

s.display();

}

float x=map(mouseX,0,width,width/6,width-width/6);

float y=map(mouseY,0,height,height/6,height-height/6);

if (mousePressed==true) {

stars.add( new Stars(x,y));

}

}

class Stars {

float x, y, size;

color clr;

float xspeed, yspeed;

Stars(float tempX, float tempY) {

x = tempX;

y = tempY;

size = random(10, 100);

clr = color(255, random(180,255), random(50,255));

xspeed = random(-3, 3);

yspeed = random(3, 7);

}

void display() {

fill(clr);

beginShape();

vertex(x,y);

vertex(x+14,y+30);

vertex(x+47,y+35);

vertex(x+23,y+57);

vertex(x+29,y+90);

vertex(x,y+75);

vertex(x-29,y+90);

vertex(x-23,y+57);

vertex(x-47,y+35);

vertex(x-14,y+30);

endShape(CLOSE);

}

void move() {

x += xspeed;

y += yspeed;

}

void bounce() {

if (x < 0) {

xspeed = -xspeed;

} else if (x > width) {

xspeed = -xspeed;

}

}

}