Partner: Jonathan Lin

Slides: https://docs.google.com/presentation/d/157TcDINGDDBCXFQA7j2hS8LGTQBwk2NKFEYD5TLOgdk/edit?usp=sharing

Concept and Design

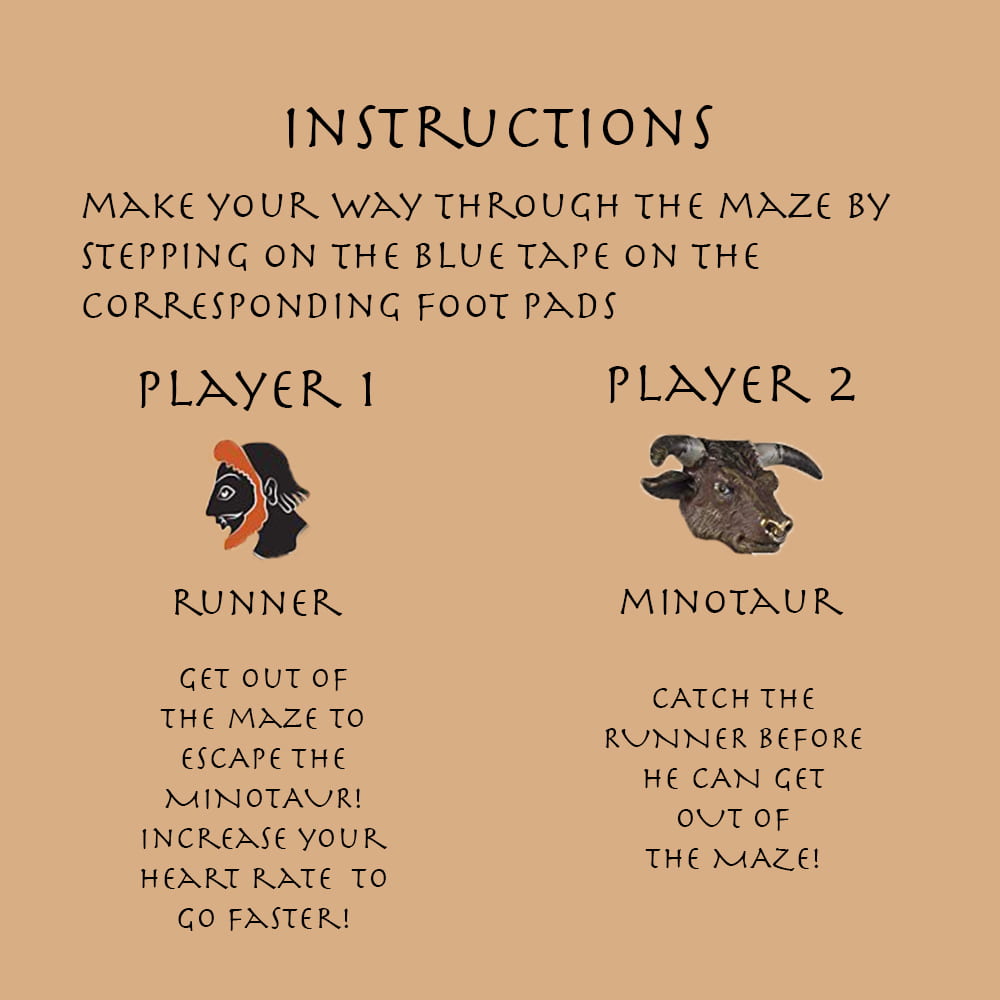

In the initial stages of designing our maze game, the interaction between the user and game was emphasized on using heart rate sensors to determine a faster speed with a higher heart rate. In moving the players within the maze, we decided to simply use a joystick, since it would easily control the X and Y axis. However, after receiving class feedback, we determined that using a joystick would not make the game challenging or unique enough, since people are already so accustomed to using joysticks in many other games. We decided to go along with advice that Malika gave us to use a Dance Dance Revolution foot pad to control the players, since it would simulate walking within the maze.

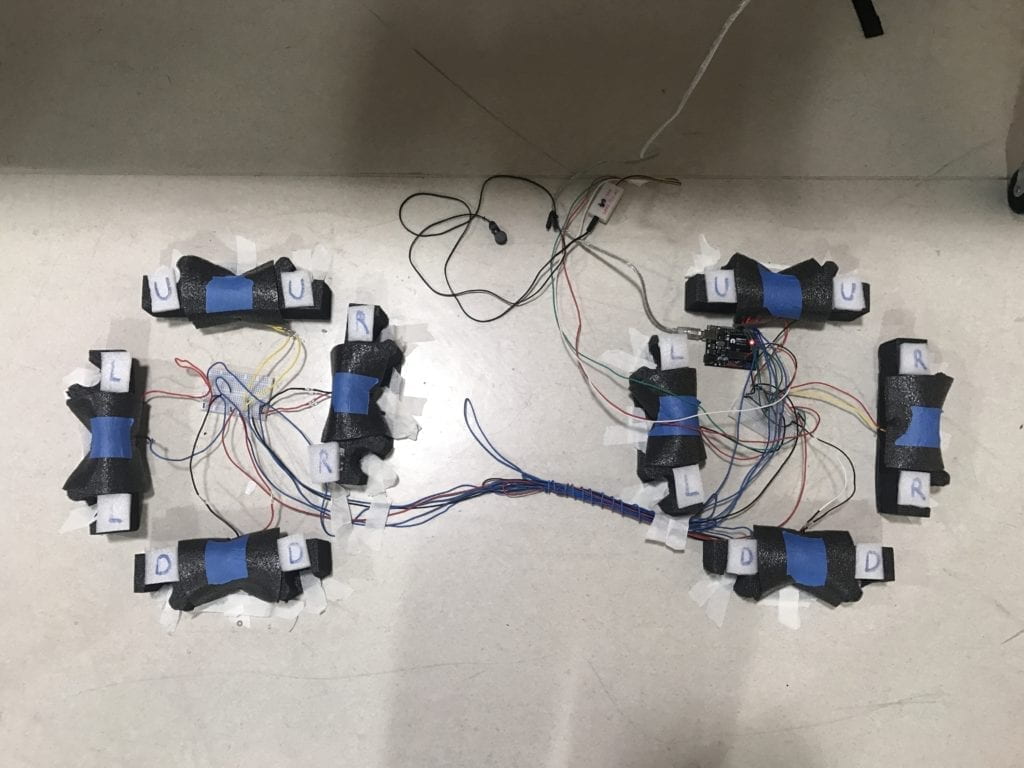

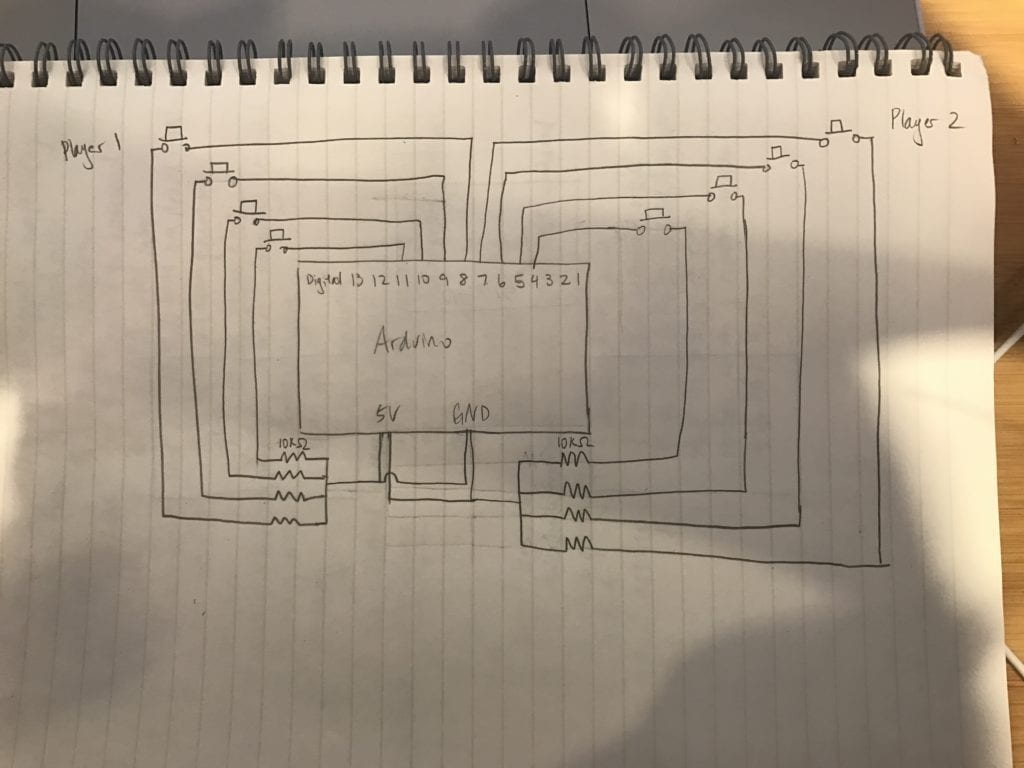

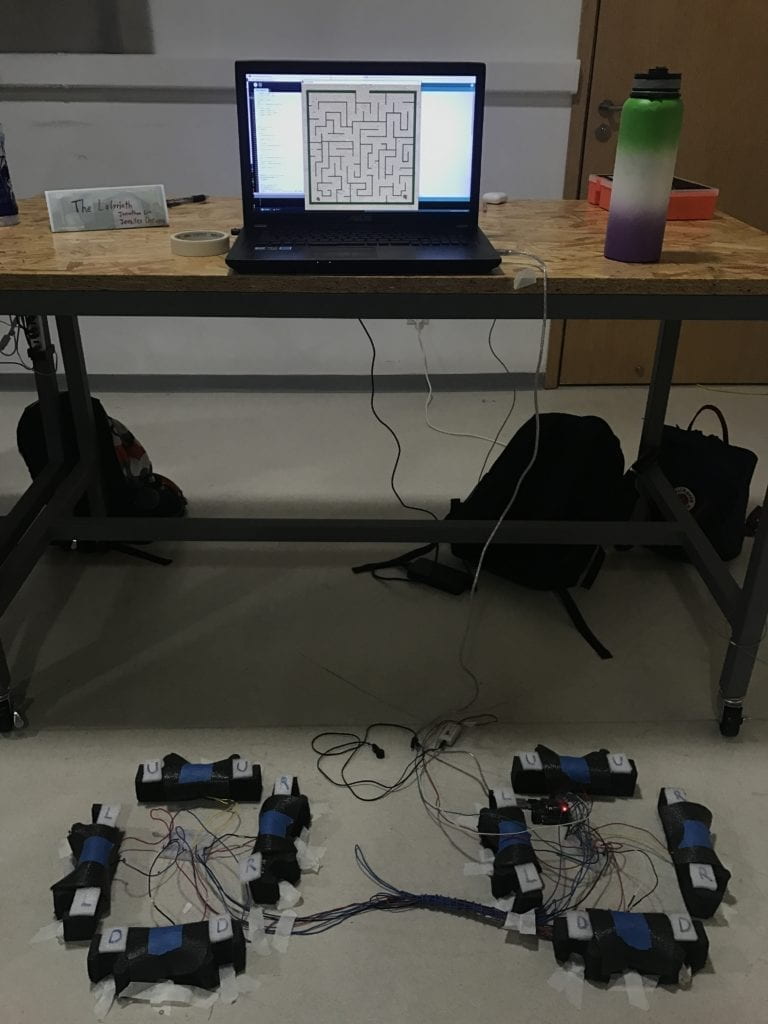

Since we did not have access to an actual DDR pad, I made a similar one out of arcade buttons encased with packaging foam conveniently found in the cardboard room and secured with wire. I chose to use foam because it would safely encase the buttons and prevent them from breaking when people step on them. While it was not the most aesthetically pleasing construction, the material was easily customizable to fit the needs of the project. Using cardboard, 3D prints, or laser cut boxes would not have been as successful, because they are not as forgiving to people’s forceful stomps.

Fabrication and Production

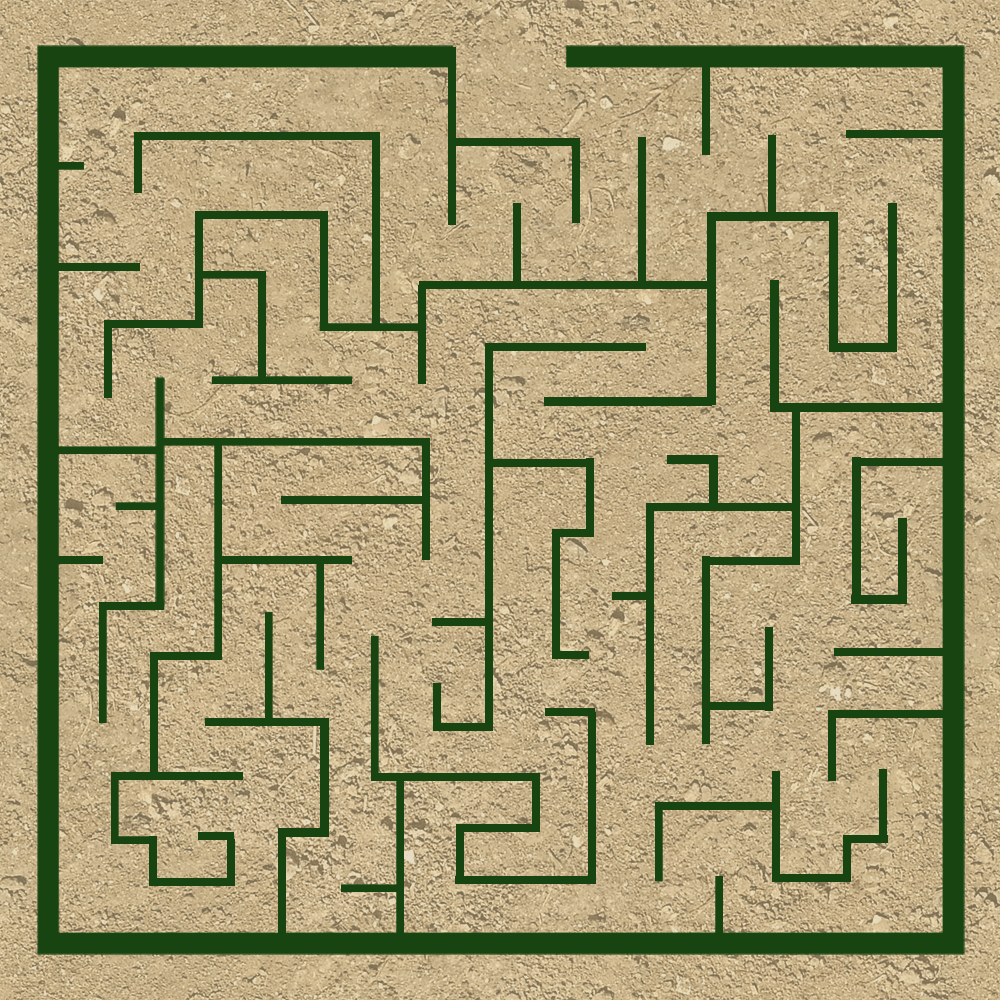

I took over design and production, while Jonathan covered code. I began by designing a maze from scratch in Photoshop. I didn’t want to use a preexisting maze because I needed to make the game fair for both players and thus needed to put their characters in different parts of the maze to start, since they compete against each other and cannot start in the same location. Once the maze was done, we tested the playability with the computer keyboard first. A video summarizing the myth of the Labyrinth and instructions were added in the beginning of the game so that users would be able to get the full idea of the context and how to play the game.

Then, we moved onto constructing the foot pads. Jonathan thought of making an additional function for the Minotaur to sprint by using pressure sensors, so for user testing, we had Player 1 (the Runner) controlled with buttons and Player 2 (the Minotaur) controlled with pressure sensors. However, we discovered that using pressure sensors were not as effective as buttons in moving the players, because the foam encasing the pressure sensors weakened the force of the foot steps. Additionally, coding would’ve been too much of a hassle to get the same result, so we decided to scrap the pressure sensors and sprinting function and use buttons for both players. The footpads were mostly effective, but when people would step too aggressively, wires had a high chance of disconnecting, so sometimes the game would not work properly in the middle of the game.

Additionally to the interactive foot pads, we added a heart rate sensor to Player 1 (the Runner) who would be able to run faster by getting their heart rate up. However, the nature of the long and unstable wire attachments made it difficult for the player to efficiently apply this function to the game. The wires would often disconnect, and the player would be too focused on moving within the maze to remember to get their heart rate up to go faster.

Conclusions

Our goals in this project were to create a fun, engaging game that also educated users of the myth of the Labyrinth. Our project aligns with interaction because users control their players’ movements in a cyclical cause-effect relationship by stepping on the foot pads. It does not align with interaction in some other ways, because our attempts to make it more interactive with the addition of the heart rate sensor were not that effective since people did not really make use of this function. Ultimately, players interacted with the game by engaging their bodies and mind to control their movements around the maze, making it a fun experience for both players. If I had more time, I would have wanted to make the heart rate sensor a bigger part of the game. Instead of only having one used within the maze, both players would use it at the start of the game, so they could first get their heart rate up then focus on playing the game. I’ve learned that it takes good communication and clear goal setting to reach a solid finished product, since both collaborators need to be on the same page to jointly create good output. Our project not only engages the body and mind at the same time, it also seeks to educate others on Greek mythology and inspire them to further explore more of the genre. It was a valuable learning experience for me to combine both my visual design in creating the maze, as well as functional design in creating the foot pads.