Project Link:

http://imanas.shanghai.nyu.edu/~ac6596/Internet_Art_Project/

Project Proposal:

The project was conceived as an Internet-based audiovisual novel. The project attempts to answer the question: “What if someone were to narrate your life?” In order to answer this question, two perspectives are presented: the narrator, and the character. The narrator is unable to hear the character’s thoughts and vice versa. This creates an interesting parallel for the user to explore, and perhaps think about. The main ‘point’ of the project is to illustrate this odd phenomenon and how it is often explored in story writing for world-building. I try to illustrate this by allowing the user to choose whether or not to listen to either perspective, which presents a different side of the story.

However, this is not to say that they will be isolated from the other perspective; audio stops each time each perspective is swapped, but the audio from the other perspective is presented by the text. Additional sound is added to aid image associating with the text.

Project Description:

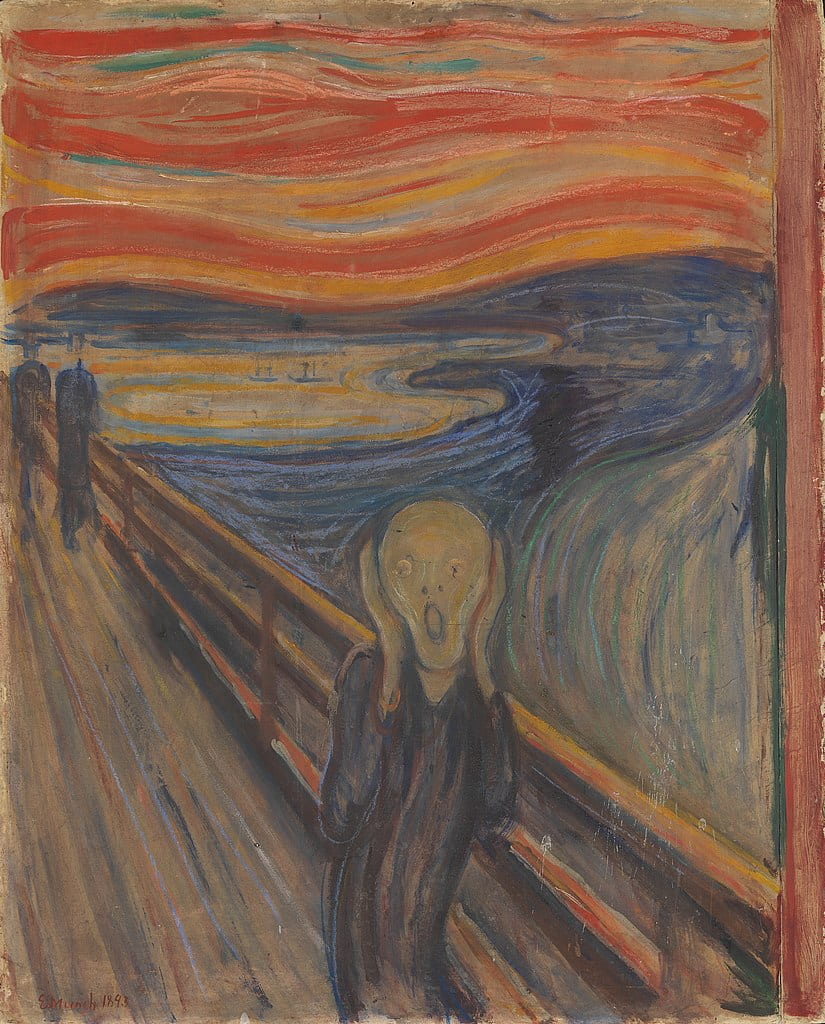

The work I have created is an audio-visual novel presenting the dichotomy of a third-person and first-person narrative. The idea is to represent how words can often disturb and create a different perception of reality. I have attempted to do this by presenting two different texts, with the ability to switch between them. They both tell the same story (i.e. a girl’s walk to her father’s grave), but with the inability to communicate with each other, except by what both can be heard (the voice of the main character -Miki). The project is script-based, based off of a side by side comparison of a movie script and storyboard in black and white.

Process:

Despite the rather realistic advice of my peers, I decided to work on my project alone. I felt unsatisfied with the mediocre effort reflective of previous group members and so I thought it would be best to rest all of the responsibility on my shoulders. This, I felt, would push the boundaries of what I would be able to practice with, and make sure that I felt motivated to complete my final project. After all, if you work alone, who do you blame but yourself?

Initially, I was going to make an interactive music video, replicating an artist style that I loved. The artist’s I was going to replicate was named in love with a ghost. Essentially, the style was that a few chords were repeated, a relatively high voice was used, and the sounds of the environment were looped to create a beat. This I felt, could be applied to the setting of the IMA Lab, which is often bustling with activity. It definitely would have been a project I would have liked to see come to life.

However, I had a change of heart. The process started around the time when my previous project was being done, where I was also tasked to write a 1000 word short-story for participation in a creative-writing program within NYU.

Due to the short word count, I decided to take inspiration from two writers that I know fairly well, Natsume Soseki and Kazuo Ishiguro.

Natsume Sōseki was a Japanese writer who lived through the dawn of the 20th century, and some more. He was considered a prominent member of Japanese literary culture, helping to bring about a profound effect (stylistically) amongst aspiring Japanese writers. His work, Kokoro, chronicles one man’s regret, which ends with his suicide. The piece, in my understanding, illustrates emphasizes the association of memory to guilt, showcased by Sensei’s inability to forgive himself. In my piece, I will be attempting to mimic a light-hearted version of this sensation – instead making a more ‘slice of life’ style. Slight changes within my writing, stylistically, would be trying to keep his descriptive style while eliminating the first-person narration and having a third person narrator.

Kazuo Ishiguro is a Japanese writer based in the United Kingdom who has also received critical acclaim. His book, Never Let me Go, is a dystopian science fiction novel. It chronicles the first-person experience of a woman named Katie, who sees the two people she knows and loves, leave her. The piece plays with a bittersweet feeling of regret and loss, tying in the two for the fated plot-twist that initially shocked me. Foregoing the plot-twist due to time constraints, I chose instead to borrow the unusual inner-peace that the narrator exudes in the end scene, despite the loss experienced.

Their writing style, as well as the gripping tales they told served as inspiration for the story, which ended up being one girl’s journey to her father’s grave, where she lamented her issues surrounding a boy named Jin. The father’s gravestone would be engraved Jin, as a sort of ironic easter egg that I would put in for readers as a subtle hint to my inspirers.

Although I made it my sole purpose to work on the video project as much as I could, I began to wonder ~ what if I made a novel on a website? I, therefore, began to draw assets on my flight back to Shanghai (I had gone to Shenzhen for a weekend), as I thought about this idea.

For the art style that I settled on, I relied mostly on my own style – which was not based in anything at all. In general, I am more used to drawing in an anime style, as I often times try to replicate the artistry of some Japanese animes that I enjoy watching. Therefore, I tried to make the panels simple and hand-drawn, as the initial idea was that the story would be a voice-over of the script that was displayed, a sort of rough draft that would be displayed for the user. It was, in no attempt, a way to replicate or even imitate (visually) real life. This I thought, was something that I wanted to convey through the story and the way that storytelling works to immerse someone in a world, even if they know that the world is not real. The idea that the world is believable, however, was the goal. Therefore, the drawings are made intentionally simple and plain, to emphasize the need to pay attention to the audio.

And so, when I finally finished my debacle of a video project, I began to expand on the story, writing an additional dialogue to explain some of the earlier hints that I had made to the main twist of the story -> who is the main character ignoring and why?

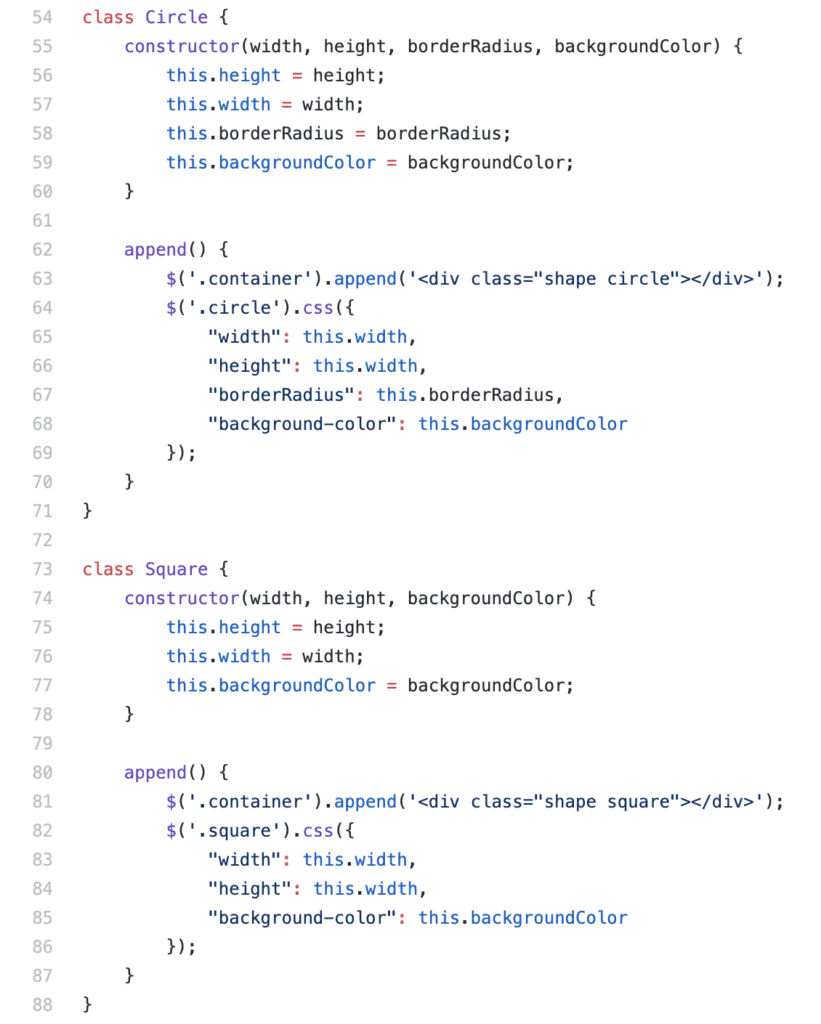

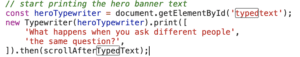

I remembered, initially, that Samantha Shi – an IMA senior and fellow classmate – had an interesting function that typed out script in HTML. I sought out how to do this.

However, when reading her code, I encountered a huge issue: I don’t understand J-query.

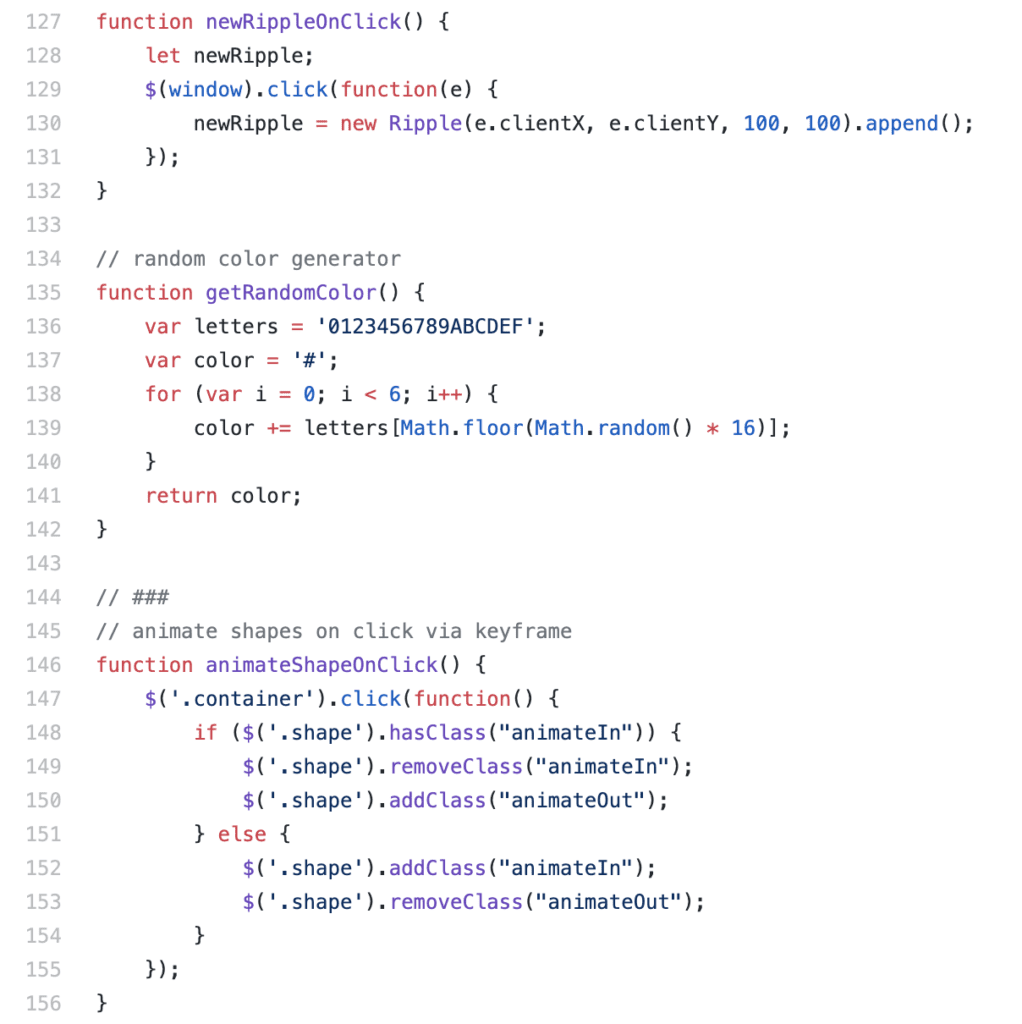

Her code is displayed below.

Here is the code that presents the inner-html, which I understood to be the thing that printed the script.

This script shows the typewriter function, which was used to print out.

The typewriter function is printed here, where a constructor was used in order to make a more efficient version of what I would eventually construct.

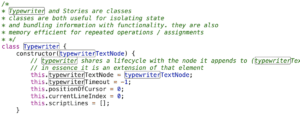

Not wanting to learn an entire library for the sole purpose of a solo project,

I decided that the first task that I had was to translate this into Javascript.

Initially, I started with the basic website and CSS, and learning how strings and substrings worked.

This meant that I had to construct a run function that would print out a line. What I ended up getting looked something like this:

function runtext() {

var str = document.getElementById("scriptwriter").innerHTML;

//changes scriptwriter to equal the text string length minus one character

str = str.substr(0,str.length-1) ;

//gets the character of ss using charAt

console.log(scripttype);

str += scripttype.charAt(ss);

//sets scriptwriter to equal new string"str"

document.getElementById("scriptwriter").innerHTML = str;

After this, the purpose was to automate the function, and so it ended up transitioning into this.

function runtext() {

var str = document.getElementById("scriptwriter").innerHTML;

//changes scriptwriter to equal the text string length minus one character

str = str.substr(0,str.length-1) ;

//gets the character of ss using charAt

console.log(scripttype);

str += scripttype.charAt(ss);

//sets scriptwriter to equal new string"str"

document.getElementById("scriptwriter").innerHTML = str;

// adds to counter of var ss

//autoscrolling feature

var write = document.getElementById("script");

write.scrollTop += 100000;

//if (Boolean(stopplease = true)) {

console.log('whatthefuck')

//look at this

while (stopplease == true){

ss++;

console.log('yeet')

}

console.log('excuseme')

//}

// stops the text from running after narrator[0] reaches 0.

//else

if (ss > scripttype.length) {

clearInterval(gottagofast);

console.log('Ihopethisworks')

//idk why this works but it just does -> autoplay feature but delays the play after 500 miliseconds

setTimeout(play, 500);

document.getElementById("scriptwriter").innerHTML += "<br><br>";

}

document.getElementById("scriptwriter").innerHTML += "_";

}

This whole process took about a week of work, with me borrowing the help of Tristen and Dave for extensive periods of time. BIG SHOUT OUT TO THEM.

In the meantime, to make sure that I had enough time to do the rest of my work, I decided to finish the majority of the artwork that I would be using.

There were no switching perspectives yet, it was just an idea to have one huge line of text print and have images displayed.

However, I went to Nimrah just to check in with my concept, where I realized that my concept was lacking: In its current state, the project did not fulfill my initial goals, and some suggestions came to light. What about contrasting the views of the narrator and the main character?

And so, I had more work to do with the initial script function that I had initially made out.

Having two run side by side, as well as functionality – that proved to be a very difficult task for me alone. Thankfully, I had the time and patience of the fellows to help work with my issue.

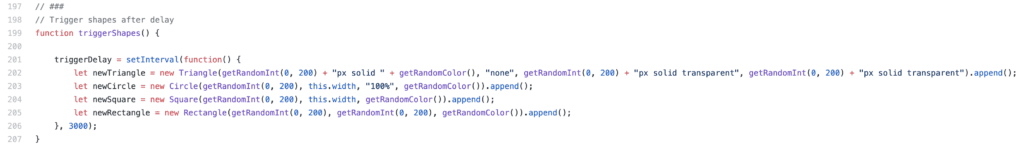

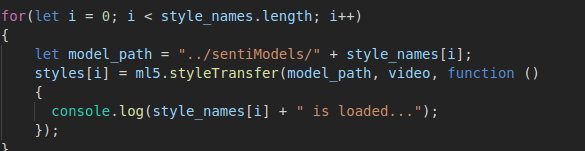

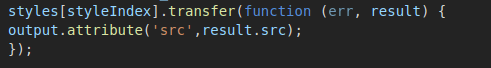

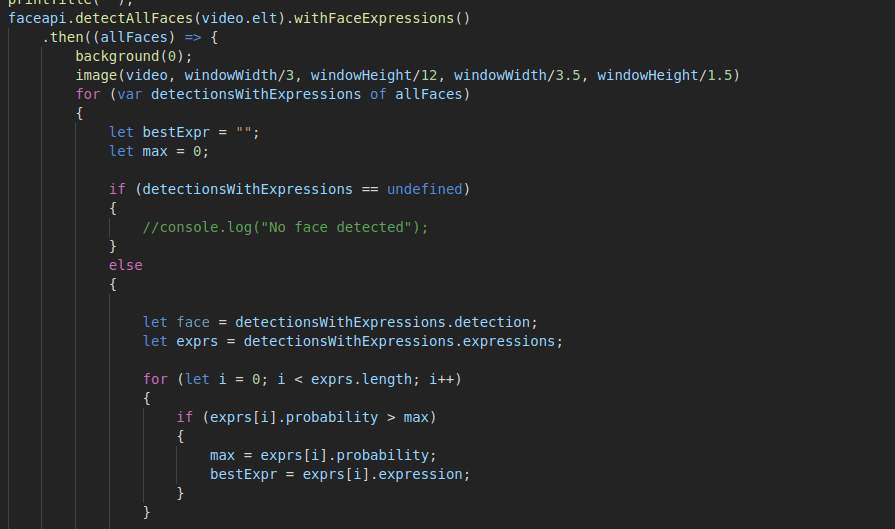

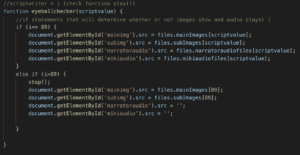

Prints out each script line individually at the same time (this is how the scripts can be swapped:

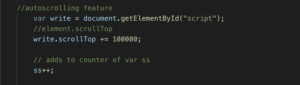

Autoscroll Feature for the text (so that the user does not have to scroll down):

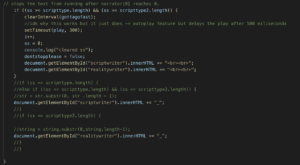

Stops function if line printed length is larger than script length:

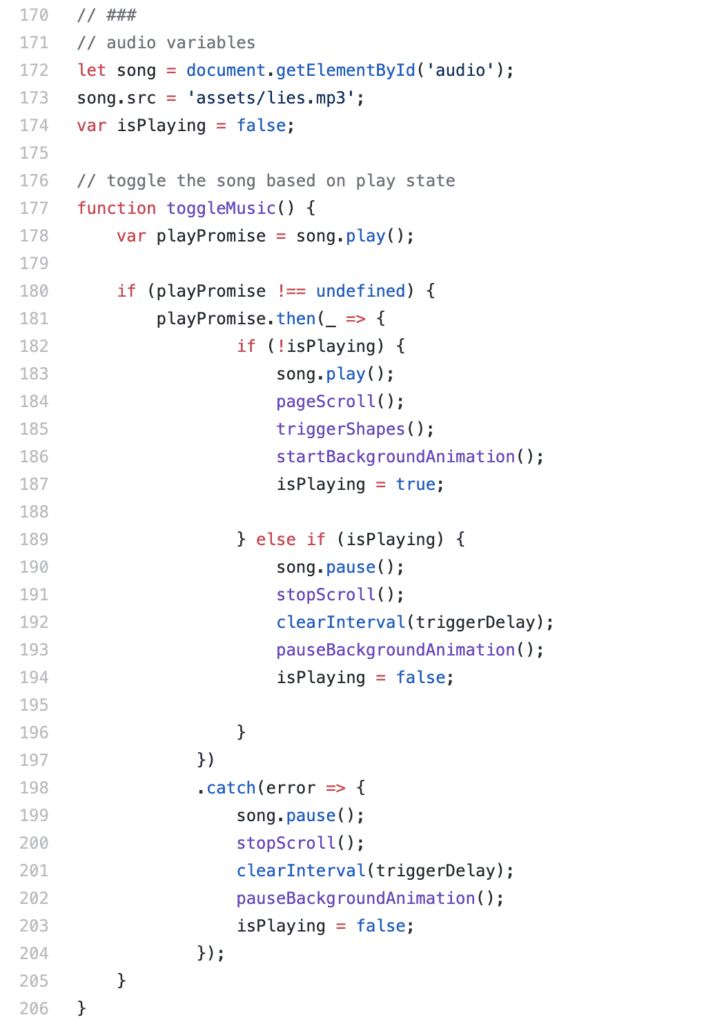

Pause Function:

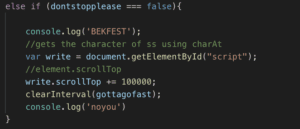

The final code looks something like this, with booleans attached in order to improve functionality when the script is paused and played.

Essentially, the hardest part of this was constructing and learning the pre-programmed functions I was using in javascript – this is why I have so many comments in my code.

A huge part of this project was learning how to read my own code, understand, and reuse terms in order to fix functionality. Unfortunately, I am still lacking in skill – I still need more practice in order to really become comfortable with these functions. However, I think the instruction I received in the duration of this project is quite nice.

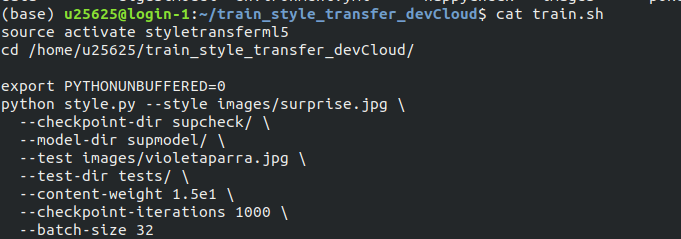

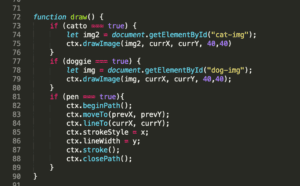

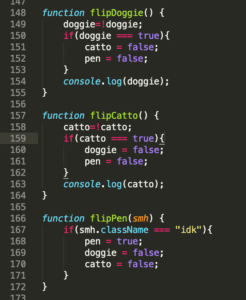

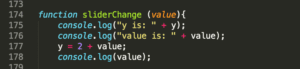

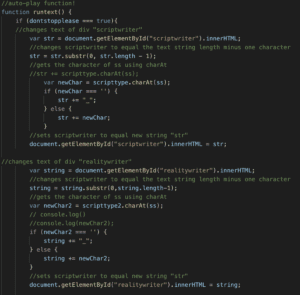

After this was figured out, the efficiency of my code was considered.

Because I was calling three separate files to play/display during different lines, I had to have something more efficient than writing out ‘document.getElementbyId(”).src = ”;

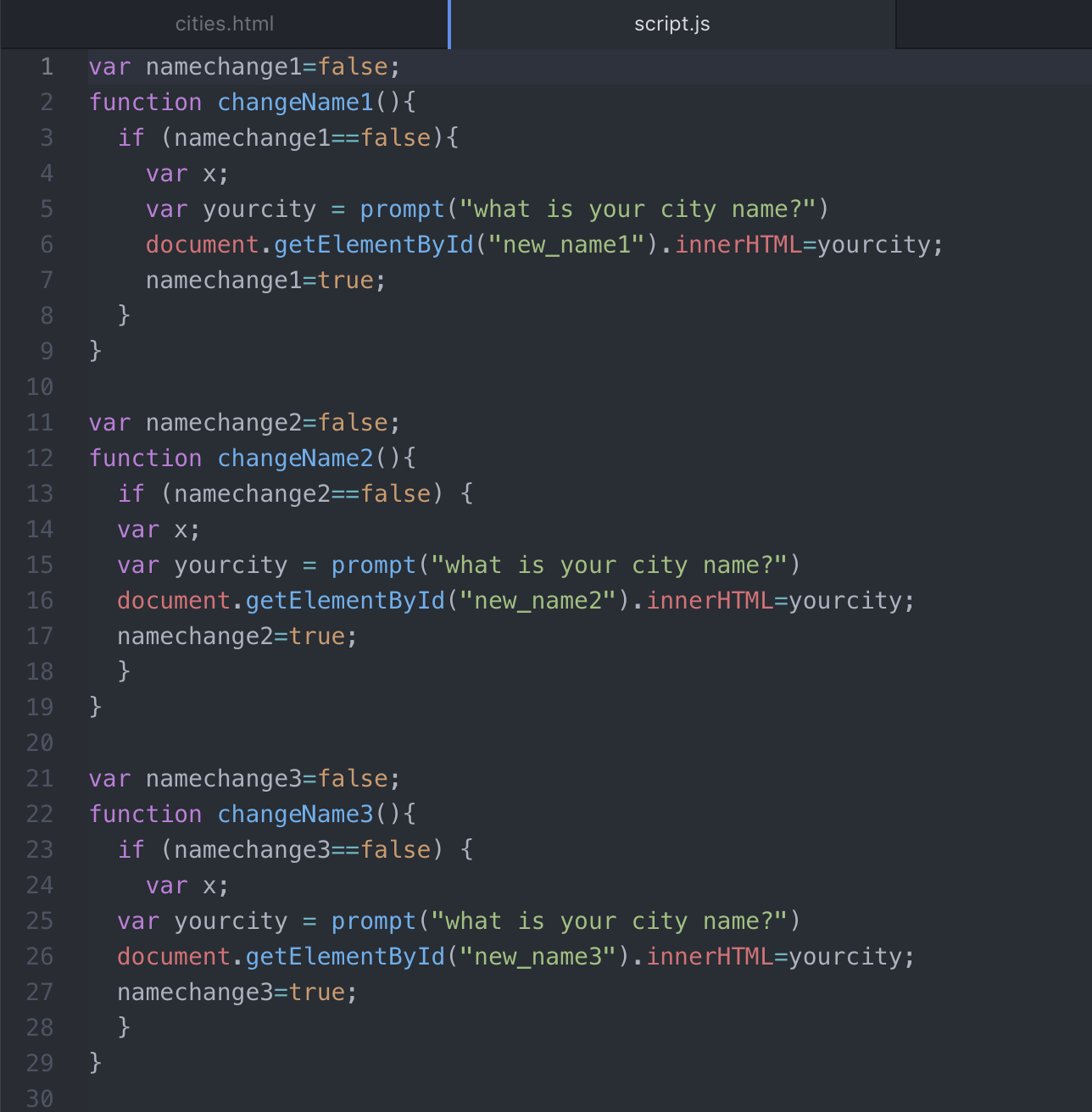

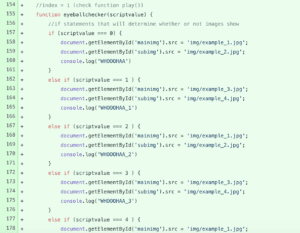

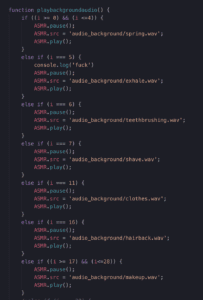

Initially, the “if/else if” method I was using looked something a little like this, for a few hundred lines of code:

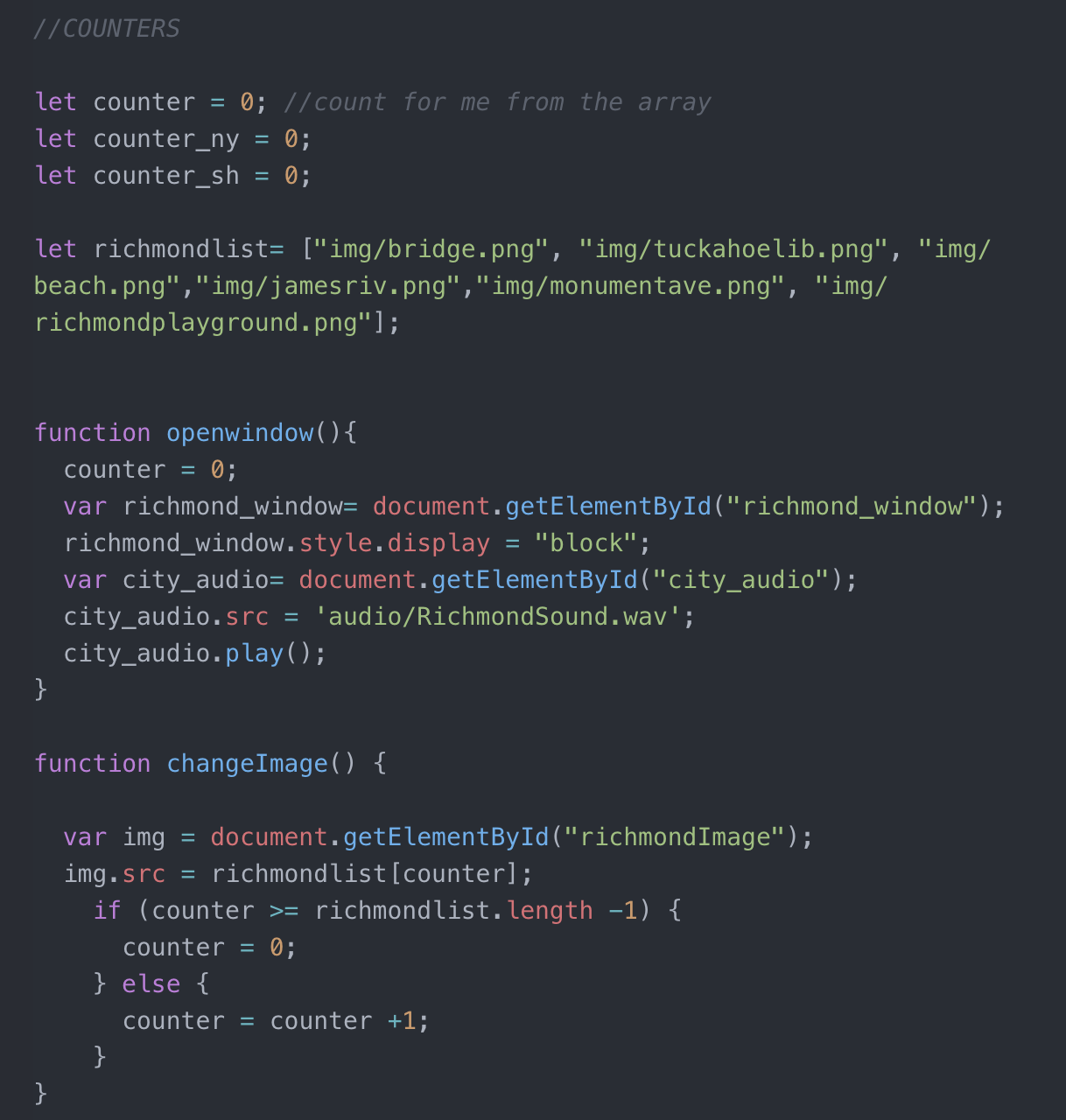

Therefore, I was recommended a ‘dictionary’. which proved to simplify this method a thousandfold.

Essentially, the few hundred if/if else statements turned into about 14 lines of code, which makes it very efficient.

I also learned the use of arrays and how to call arrays within arrays, which was very useful.

The structure of the dictionary is like so:

var: files – mainimages – subimages – narratoraudiofiles – mikiaudiofiles .

Two separate arrays were used to maintain consistency, which are called story and story 2 for the different scripts that they were to type out.

I cannot post an image of the dictionary, but because of the amount of files that it holds, it goes from line 484 -> 1404.

An incredibly vast dictionary indeed, for the 90 audio files and 53 image files that I created.

I think the majority of my time was spent on making the dictionary, as I needed to hard code in all of the images, audio files, and text and make sure that they were all working. There were often times where I needed to go back and recreate assets because of this, as there was much confusion surrounding it.

Additionally, even after finishing all of that, I found that I was not done with my project.

On Monday, two days before my final presentation, I was suggested to add some audio besides the voice-overs that I had because of the awkward silence when no one was talking. Juggling studying for finals and 2 essays that I had to write, I eventually came up with a way in order to incorporate more audio, which included (at dave’s suggestion), an edited version of a vinyl record playing where I pitched down the audio, applied distortion, and lowered the volume.

Source: https://www.youtube.com/watch?v=41lrxMvhBdc&t=67s

Additionally, other audio files were added in order to ‘set the scene’.

Another if/else statement was constructed in order to emulate these sounds overlayed with the narration, which I found to have positive results on my project.

Additionally, if I may add, adding css to this website and changing the values from pixels to vh, vw and various %s took me a day to fix up. most of the time I was simply scrambling to figure out why many of my divs were displaced and all over the place, but eventually (after like 6 hours of sitting on my ass in the IMA Lab), I was able to figure it out.

Also, managing 150 different files and critiquing them so that they all fit was a pain to figure out. This was even harder than the video project because I didnt have software to mediate the interaction between files and play function like in the video project. Therefore I had to write down everything that happened and when it happened, and which image and audio files that it correlated to on a seperate sheet of paper, look at that while constructing the audio files, and then listening to them while they played out on the website and further critiquing and adjusting them. This is what I did for the final 3-4 days before presentation, while also fixing the various coding issues that I encountered. A handful of time that I just spent working alone. This is sort of why I wished that I had a partner, although I know that not many people in the class would actually sit down and actually do what I did since people had a lot of other work in other class that they were probably rushing to do. Such is the undergraduate career. It really be like this sometimes.

Issues:

it was especially hard to create the audio for this project, as it required certain pacing in order to keep in line with the text printing. It might be noticed by a user that the audio is not entirely synced, and I would have corrected it if I had more time, but it would have meant more hard coding, adding on to the 1407 lines of code that I already had.

Additionally, I wished that I could have incorporated some assets of p5 to paint a picture of the scenery that I wanted. However, it also would not have aligned with the story, which was the main focus of the project.

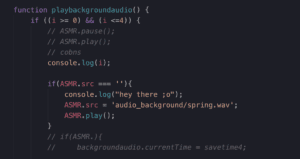

Unfortunately, there is one bug that I was unable to solve, at all.

Within my background audio function, there are several source file changes that occur, and these correlate with various line numbers that increment in separate functions (i.e. the variable i).

Within the if statements that contain range functions, there are issues that occur where the function creates a new source file, but, within the range, refreshes the source file, making the file play out every time a line change occurs. I was unable to pinpoint how this might have happened, and it might be a bug within the code that I am currently unable to fix.

The idea was that the source file would not change, the audio would not pause and restart within each line change of the if condition playing out.

However, this is a minor consideration that could have been seen as intentional.

Project Reflection:

This project took an excessive amount of work to create and manage, but I felt as though it was worth it in the end. I am glad that this project, unlike other projects before it, is actually completed and I adhered to the time schedule that I created for myself.

If I were to add onto it, I would most likely try to paint and spend more time making a more animated project, as there is not that much movement within the piece which takes away from the ‘slice of life’ style of writing.

Fortunately, I suppose this could turn into a summer project that I would go back to and fix up the assets a bit. Also if I had more time, I would probably include more text files, and add a skip and return button function so that the reader could go back and hear the text and audio play out again.

I did not include this function initially because I thought that It would clutter the space, and I would have to reformat the CSS in order to make sure that these things aligned.

Essentially, I learned a lot and had a lot of practice with javascript functionality and the usage of many of the ideas that were covered in communications lab, while also applying some various skills in other software that I would not have been given the chance to work on otherwise. Even if this project was incredibly taxing, I would say that I enjoyed it. However, I still hunger to make something better. Perhaps the best way to describe how I feel about this project is how I presented it, which was with my feet propped up on the table, confident that it worked, and knowingly displaying my project as it was. I could work on this more if I wanted, but I am perfectly fine with not.

I think, however, if anything else was to be added, I would try to add like a color gradient in the background of the image files, so that there would be some sort of simple aesthetic that backgrounded the images. Perhaps this would come to be like a light transition from like pink to purple to blue to red to green or something, something very subtle that would set the mood of the story to be more emotional perhaps. This was something that I scrapped due to the issue of not having much script to work with (because of asset production) but essentially this would probably be the next thing that I would work on.

It does make me sad though, that I do not find my project very interesting anymore. Maybe i’ve just been looking at it too much. Perhaps i’ll go sleep and forget about the fact that I’ve been working on this so long.

TLDR: I guess a satisfactory personal project that adheres to the project guidelines, but I am incredibly sick of working on this project.