from Joyce Zheng

Title

Wild 失控

Project Description

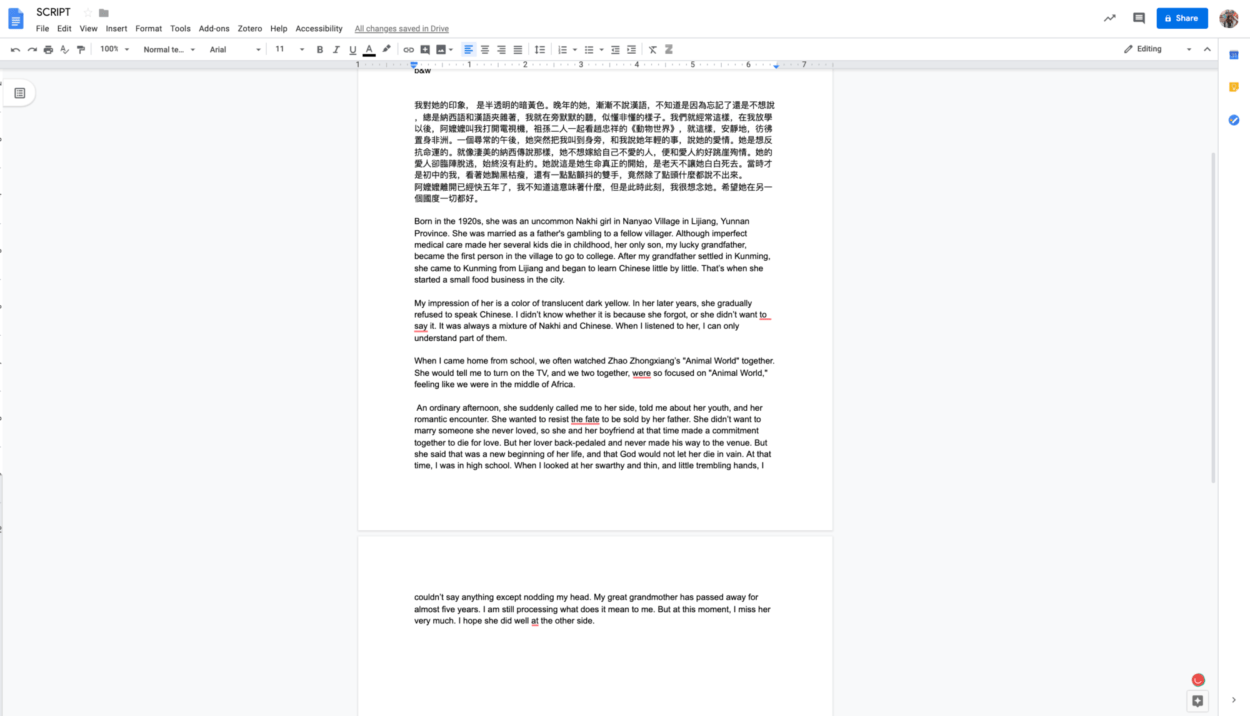

Our project is based on a modern background where people rely heavily on modern technology. We took off the initial concept of cyberpunk but we are still trying to express the losing control on those technologies and the disasters followed. The use of Kinect controlling and the sound of breaking glass with flickering white screen works as a transition part, while before is the prosperity and rely on technology in modern society after the transition is the crash of society.

Like the intermediate between live cinema and VJing, we are trying to tell something rather than just creating patterns. The videos we have added to make our realtime performance more like realistic stories instead of fiction. By connecting reality with fiction, we want to raise people’s awareness of how addicted we are to phones, how our life is changed, also controlled by modern technologies.

Our inspirations firstly come from the videos we have seen in class. The raining window provides us with our first idea of shooting different videos in reality. Real-time VJ and Real-time motion graphics to redefine the aesthetics of juggling are our biggest inspirations, where we decide that in the project we should have something that we can control the real-time movement on the screen.

Perspective and Context

One of our project’s most important parts is the transition, where the sound fits the visual effect the most. Although our music is made separately from our visual part, we still try our best to make them echo with each other and to cohere the whole performance. From Belson and Whitney’s experience, Belson’s work is trying to “break down the boundaries between painting and cinema, fictive space and real space, entertainment and art”. Belson and Jacobs were moving visual music from the screen out into “three-dimensional space”. While trying to combine the audio and the video, we are also trying to interpret music in a more three-dimensional way.

Jordan Belson’s work also provides us with a significant frame to perform. Film historian William Moritz comments that “Belson creates lush vibrant experiences of exquisite color and dynamic abstract phenomena evoking sacred celestial experiences”. His mimesis on celestial bodies inspired us to make something to be centered – it doesn’t have to be specific, but as a representation of something: maybe the expansion of technology or technology itself.

According to Live Cinema by Gabriel Menotti and Live Audiovisual Performance by Ana Carvalho, Chris Allen states that live cinema is “deconstructed, exploded kind of filmmaking that involves narrative and storytelling” (Carvalho 9). Our idea of creating the live performance corresponds to this concept, where we transfer our idea into a few breaking scenes and jump between one and another. Moreover, going back to Otolab’s Punto Zero, we can see they set strong interactions between their artwork and the audience. Initially, we wanted to invite an audience to come and interact with the Kinect, and see how the visual effects will change with the audience’s movement. Due to limitation, Tina performed that part instead of inviting someone, but we would choose someone whose identity is an audience to do this if possible.

Development & Technical Implementation

Gist: https://gist.github.com/Joyceyyzheng/b37b51ccdd9981e507905ddcbbda988d

For our project, Tina is responsible for the creation in Processing, including the flying green numbers and how it can interact with Kinect, thus can interact with her. She also works on the combination of max patch and processing. I am responsible for the generation of audio and the creation of the max patch, and process the videos we shoot together.

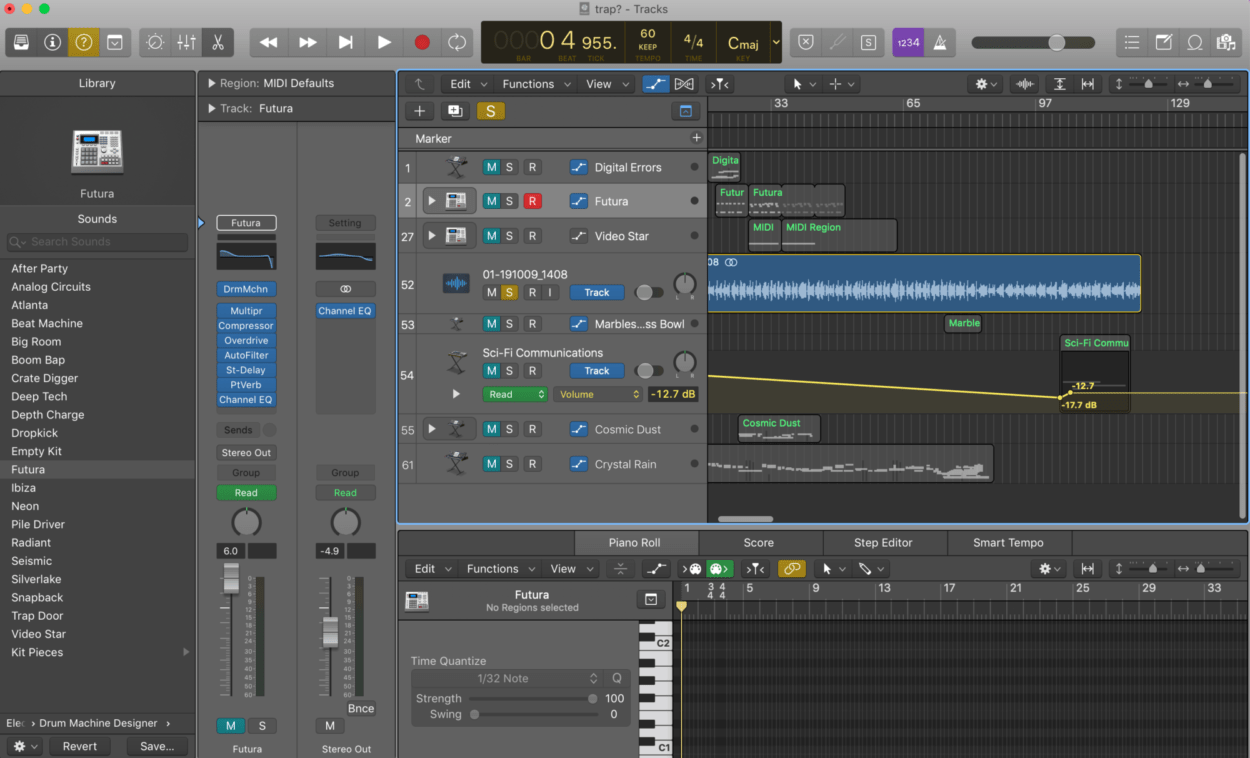

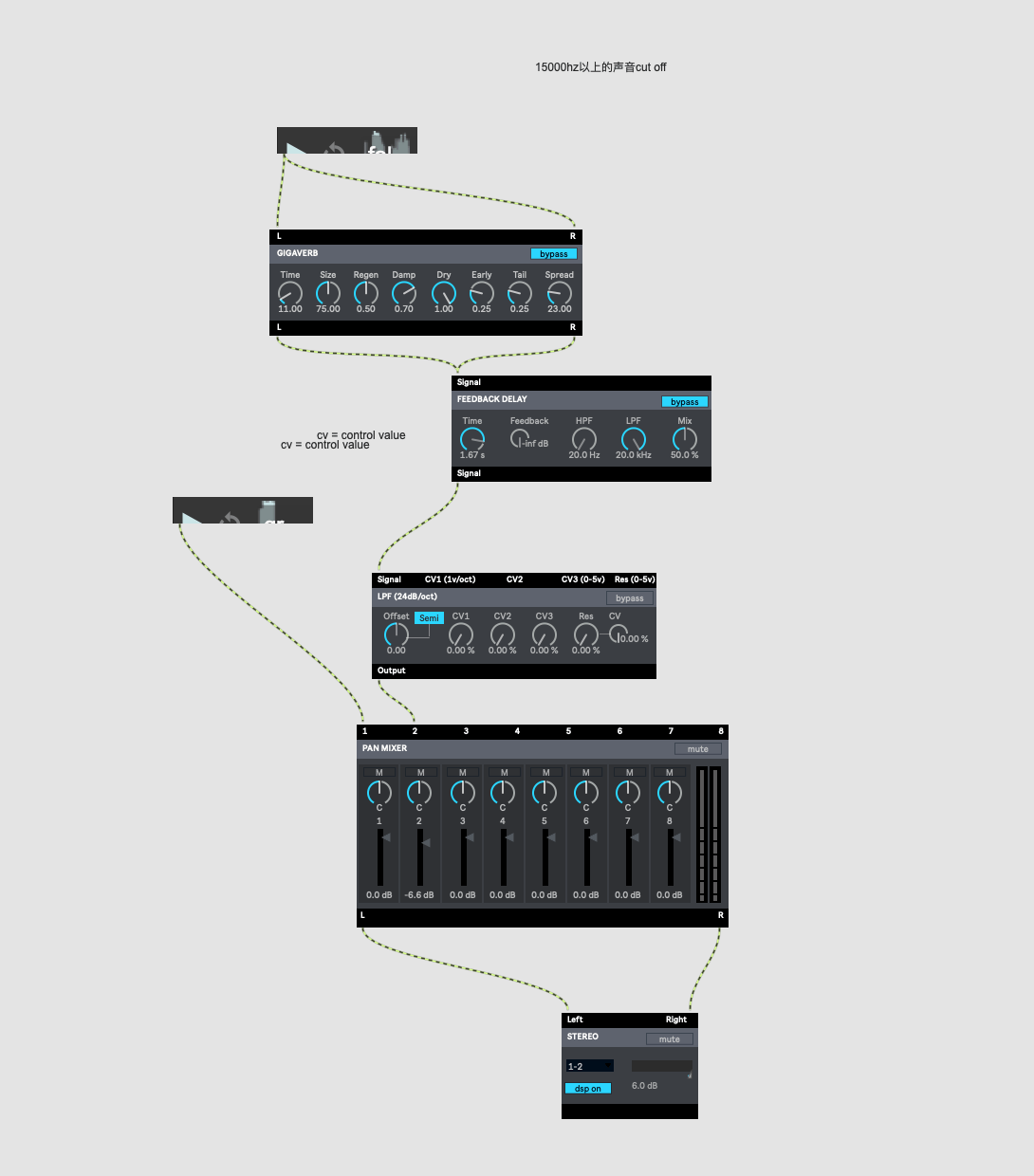

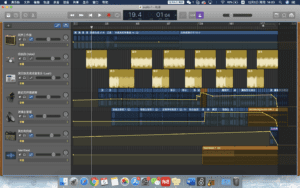

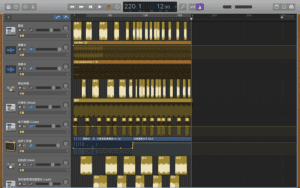

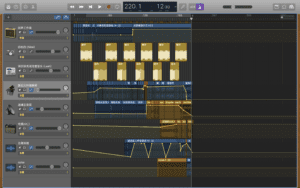

As I mentioned previously, we developed our audio and video separately, which is somewhere I think we can improve a lot. I mainly develop the audio in GarageBand, generated by mixing different audio tracks containing audio samples from freesound.org and different beats and base. I tried to use an app called Breaking Machine to generate sound, but later I found with the time GarageBand would be the best choice. I listened to a few real-time performances (e.g. 1, 2) and some cyberpunk style music and decided the style I wanted. Firstly I generate the audio looks like this:

Tina and our friend’s reactions to this was that it was too plain – it is not strong, thus not expressing enough of our emotions. Eric suggests that we should add some more intense sounds as well, like the sound of breaking glass. With those suggestions and some later small adjustments, I selected audio samples that I think would be proper for the audio and came up with the final audio patch. I decided to use a lot of choirs since it sounds really powerful and different types of base intersecting with each other on the same track. The creating process of video generally was to make it stronger and more intense. At first, we wanted to put the audio into Max so we can control them at the same time with video, but later we found inside Max we cannot see when it is playing, and it is not feasible for us to have two patches (since one is not enough) to control at the same time, so what we do is let it stay in GarageBand.

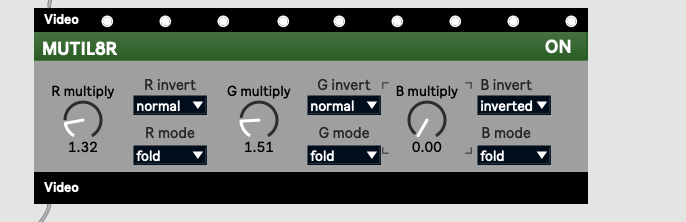

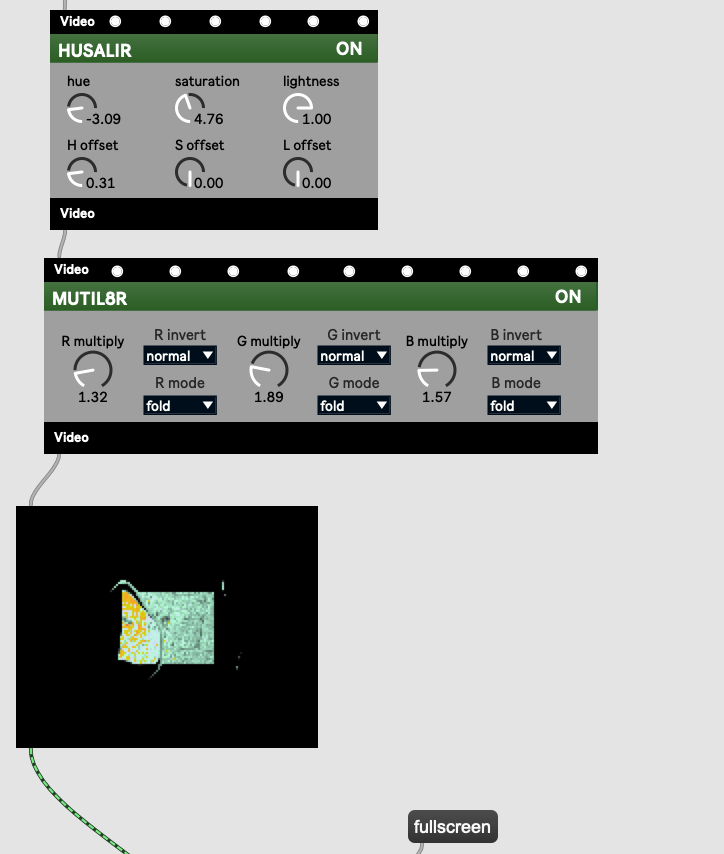

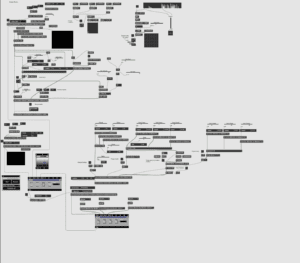

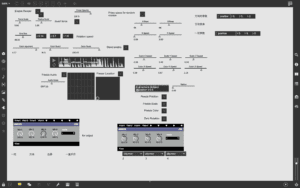

For the max patch, we turned to jit. instead of filters like Easemapper. I searched for a few tutorials online and build the patch. The tutorials are really helpful, and the final video result produced by Max is something we would like to use. I combine the two objects that Max creates together and with Eric’s help, they can be put into the same screen simultaneously, which contributes to our final output. After the combination, we started to add videos in and put all the outputs on one screen. One problem we have met was we tried to use the AVPlayer to play more than 8 videos, which made our computer crashed down. With Eric’s suggestion, we used the umenu which was much better and allowed us to run the project smoothly.

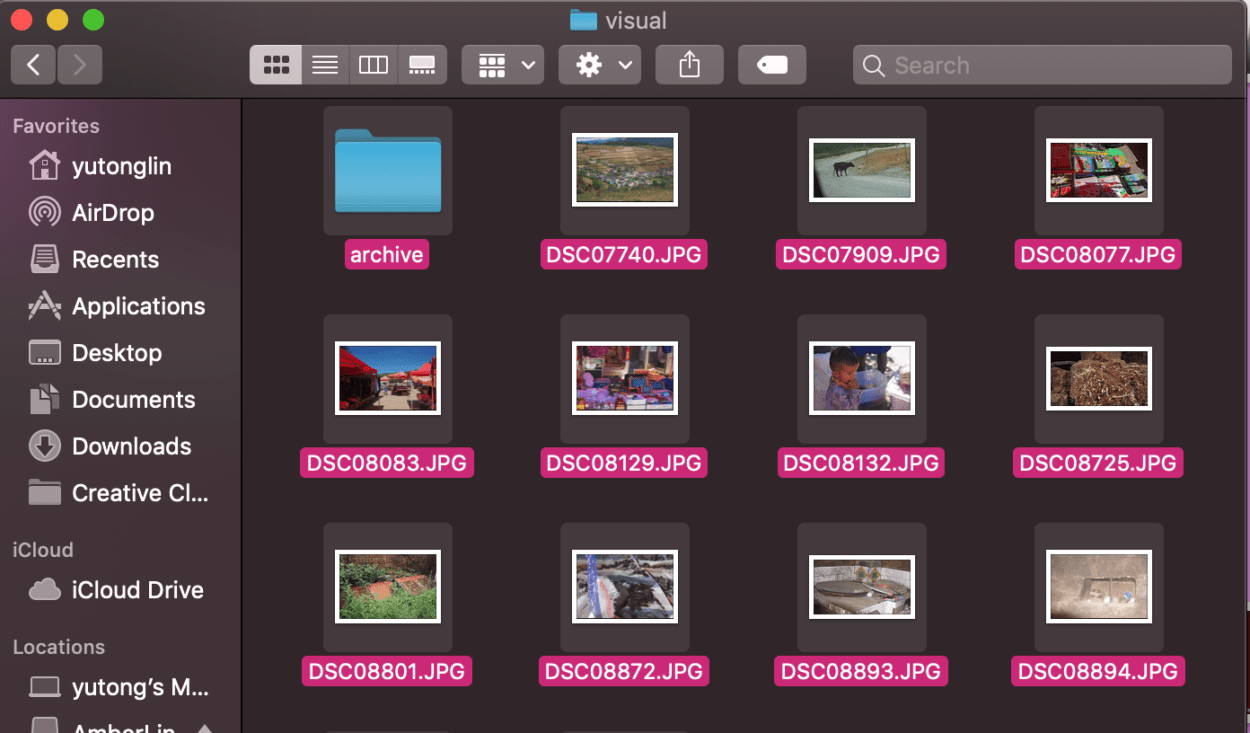

We shot the video together and I processed the video with different filters. Here are screenshots of the video we have used. They are all originally shot, and we mainly want to express the prosperity of the city and how people rely heavily on technologies by city/hand/email/we chat message video, and how the world crashes down after the explosion by other videos. Therefore, our max patch somehow works more like a video generator: it generates all the input sources, including Kinect input, videos, and jit.world.

Performance

The performance in Elevator was an amazing experience. We were nervous but generally, everything went well. During the show, I am mainly responsible for the control of videos and audio, while Tina was responsible for the control of the cube and the keep-changing 3D object. When she is performing through the Kinect, I am responsible for the control on the whole patch. There were a few problems during the performance, the first one is that the sound was too loud especially of the second half part, and I could feel the club shaking even on the stage. Second is that there are a few mistakes during the performance since Kinect doesn’t work unless it is directly plugged into the computer and Tina’s computer had only 2 type-c sockets, we need to unplug the MIDIMIX and plugin Kinect and start Processing during the performance. After the part where Tina interacts with the Kinect ends, we have to replug in MIDIMIX. Though we rehearsed several times, I still forgot to reload in the videos so when the white screen flickering, you can still see the static video from Processing, which is a little terrible. Something surprising also happened, while in the end our cubes suddenly became only one cube (we still don’t know why till now), and it looks pretty good and echoes with the concept of the “core” of technology as well.

When Tina was performing in front of Kinect I was hiding under the desk so that I would not be captured by the camera, which causes that Tina could not hear my word when I reminded her to left her arms up so that audience would be able to know that it was her who was having realtime performance. Sadly she didn’t understand me and I thought that this part could go much better. What’s more, I think the effect will be much better if we combine the audio into the max patch. But our performing time has to close precisely to the music’s breaking point, so we leave the GarageBand interface and mainly work on the realtime video during the performance.

Here is the video of the selected performance.

Conclusion

To be honest, my concept of raps came more clear after watching everyone’s performance. If I could do this once again, I think I will not going to use any element of the video but to explore more on Max of the combination of other apps with Max. The initial idea of the project came from our common idea: something mixing reality and fiction. So from research to implementation of the whole performance, we work separately on different parts and combine them in the end – so the performance might not be coherent either. We wanted to achieve the effect that a human on the screen can control the 3D object or at least something not limited to the numbers, but Tina said only Windows could achieve that, so we had to give it up.

However, we did try hard with limited time and skills. I want to say thank you to Tina who worked on an app that we never learned before and created one of the best moments of our performance, as well as Eric’s help through the whole project. We explored another world on Max, and how we should shoot the video to make more “raps”, how to modify the audio so it is not pieces of different music/usual songs anymore.

I would work more on the audio and max patch for future improvements. I am not sure if it is influenced by the environment or not, but when I communicated with a lady from another university, she thought that most of our performances or our music were not like club music – people cannot dance or wave their bodies with it, and she felt it was because “it is still a final presentation for a class”. For this reason, I would generate more intense videos like granular videos through Max and stronger rhythmic music with better beats and audio samples.

Sources:

https://freesound.org/people/julius_galla/sounds/206176/

https://freesound.org/people/tosha73/sounds/495302/

https://freesound.org/people/InspectorJ/sounds/398159/

https://freesound.org/people/caboose3146/sounds/345010/

https://freesound.org/people/knufds/sounds/345950/

https://freesound.org/people/skyklan47/sounds/193475/

https://freesound.org/people/waveplay_old/sounds/344612/

https://freesound.org/people/wikbeats/sounds/211869/

https://freesound.org/people/family1st/sounds/44782/

https://freesound.org/people/InspectorJ/sounds/344265/

https://freesound.org/people/tullio/sounds/332486/

https://freesound.org/people/pcruzn/sounds/205814/

https://freesound.org/people/InspectorJ/sounds/415873/

https://freesound.org/people/InspectorJ/sounds/415924/

https://freesound.org/people/OGsoundFX/sounds/423120/

https://freesound.org/people/reznik_Krkovicka/sounds/320789/

https://freesound.org/people/davidthomascairns/sounds/343532/

https://freesound.org/people/deleted_user_3544904/sounds/192468/

https://www.youtube.com/watch?v=Klh9Hw-rJ1M&list=PLD45EDA6F67827497&index=83