For my midterm project I decided to create a interactive game with a focus on sound and pitch recognition using the ml5 crepe model.

Inspiration:

There have already been multiple games on the market that utilizes audio as opposed to normal mouse/keyboard interaction.

don’t stop eighth note

utilizes the amplitude of the sound as the control for a platformer game

Yousician/Rocksmith

utilizes pitch recognition to learn musical instrument

Twitch Sings

utilizes pitch recognition to score singing in real time for streaming purposes

Application of this Project/Why I chose this Topic:

Application of this Project/Why I chose this Topic:

Perfect Pitch/ Ear Training

Interactive games are the best way to train skills in a fun and interactive way, keeps the user more engaged and also gives realtime feedback on performance. Visual aspects of the game also gives the player more information and helps with the learning process.

Entertainment

A game that does not require traditional mouse/keyboard interaction is really fun to play because the traditional interactions are not natural to the human touch and takes away the fun. Which is why playing on an actual instrument as the controller, or even using your voice can get rid of the barrier of bad controls and get the player in the mood of the game.

I decided to give this game a little spooky feeling because it fits really well with the discord and harmony element of music. A intense mood for the game can also enhance the immersiveness of the overall experience, also because halloween is coming up at the time of this projects deadline.

Final Product Demo Video:

Development Process:

Setting up the pitch recognition system:

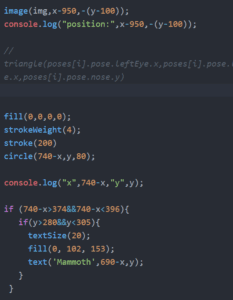

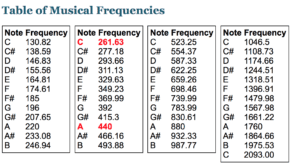

The first part of the Process is to set up the pitch recognition system, this process includes the importing of the ml5 crepe model. After crepe is set up, it accurately returns the frequency of mic input. But since it only returns frequency value, I have to convert frequency value to note value. This is achieved by using modulus% , I modulus the frequency by the base values of the note and if the modulus value is very low, then it means the frequency value is very close to a multiple of the base note frequency.

for example the low frequency of the note C is 261.63, the C on the higher octave will be 261.63*2 and so on. So by using the modulus% we can find the remainder of the division process and decide whether the frequency corresponds to a note.

Filtering the ML output to increase accuracy and stability:

there is a problem with detecting pitch due to the affect of harmonic overtones, where when you play one note, a higher resonation of another note will also be present in the frequencies. The pitch detection model sometimes gets confused by that so I have to set up filters to make sure the recognition is accurate.

First Filter: accepting frequency range of +- 10 hz

first I added a filter of only detecting pitch that is within 10hz range of the expected note. For example if frequency%noteexpectedfrequency <= 10, then the frequency is accepted as that note. This enhances the performance of the game though it is not as forgiving for out of tune instruments or singing.

Second Filter: Only registering notes when it remains a certain frame count

The second protection procedure I took is to only register the note as being played by the player when it is played for more than a few frame counts. This way small flickers in the frequencies detected will not affect the series of notes being detected.

Third Filter: Trigger when detected amplitude exceeds threshold

The level of volume can be detected without using the machine learning library, and could be used at the same time along with pitch detection. By setting the threshold of registering sound, we can be sure whether a sound is meant to be played, or just something that is picked up by the surroundings.

Sound Designing/Ambient Sound/Background Music:

when I am creating the sounds that are used in this project, I payed a lot of attention to the details of the feeling of the game. I named the project “Discord” and I really want to highlight the emotions that sound can bring to the project.

Lead melody sound:

![]()

I created this flute like sound by layering 3 different flute like sounds at multiple different octaves. Then I processed them with a equalizer and reverb to change the texture of the sound.

ambient sound and sound effects:

I decided to use an ambient eerie sound in the background to add to the mood of the game. I also included multiple sound effects that triggers upon certain actions in the game. breathing and percussive sounds get louder as the hp of the player goes down to add to the tension, giving players the pressure to play correctly.

I also experienced a lot with harmony and discord, making the winning music and losing music the same melody but one with harmony and one with discord

Harmony:

Discord:

Game Structure:

I designed the game with multiple levels, scaling in difficulty. An HP system so that the player can feel nervous when playing the wrong notes, clearing a level restores small amount of health to make gameplay smoother and more sustainable.

I also implemented a menu page and win/lose page each with its own background music that fits the overall theme of the project.

Attribution:

background music:

menu – Hellgirl Futakomori OST – Shoujo no Uta

win/lose page – Jinkan Jigoku – Anti General