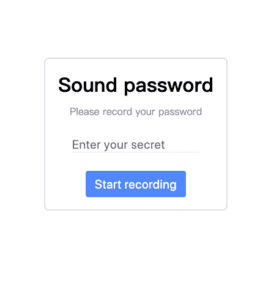

Task:

Create an artistic or practical interface that utilizes KNN Classification. Be as experimental as possible with the real-time training feature. Avoid that random inputs trigger random effects.

Final Product:

Process:

I started by experimenting with the in class KNN code, I realized that aside from the cool effect that it creates, it can be a great tool for recognizing objects.

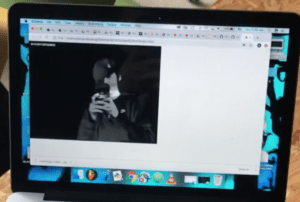

By taking a snapshot of a still background, we can now trace any moving figure that is different from the original rgb values of each specific pixel value.

A clear human figure can be seen as I walk around the canvas.

I decided that this would be a great application for the generation of a greenscreen effect.

improvements from inclass code:

I implemented a training portion to the code, unlike the snapshot that just takes one frame. The code now takes in real time footage until the average difference is lower than a threshold value, when most pixel value stays still and constant, the training will be finished and the trained model will be stored unless the user presses the space bar to train again.

Then I filtered out value by pulling values that are close to 0 to 0, and value that are high to 255.

using this filtered difference value, I adjusted the opacity value of the output pixels, making the pixels that are very different to be completely transparent, revealing the realtime camera footage underneath, while pixels that are the same as the trained model will be changed to green.

the one disadvantage to this approach is that if the color of the moving object is close to the still background, there will be extra green on the moving object.

If this program is ran in a properly lit room, with clear differences in color between objects, then it will work great.