Overview

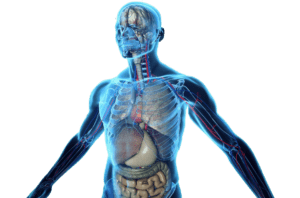

For the final project, I’m planning to build an educational game that tests the users about their biology knowledge about human body by asking them to assemble their own body parts. It’s called “Dr. Manhattan”.

Inspiration

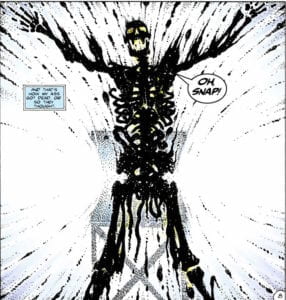

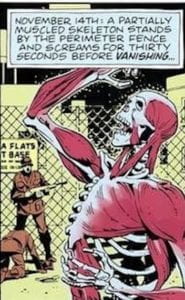

The project was largely inspired by the superhero character “Dr. Manhattan” from Watchman. Jonathan Osterman, aka the Dr. Manhattan, was a researcher at a physics lab and one day an experiment went wrong, the lab machine tore the body of the researcher into pieces. However, in the following months, a series of strange events happened in the lab. It turned out that the conscious of Dr. Manhattan survived from the accident is progressively re-forming himself, from a disembodied nervous system including the brain and eyes; then as a circulatory system; then as a partially muscled skeleton, and finally he managed to rebuilt himself as a person. So, in my project the user will become the conscious of Dr. Manhattan that survived from the accident and has to rebuild his body from parts.

.

.

How it works

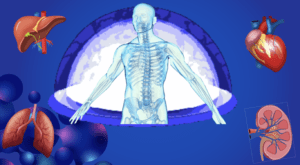

In the beginning of the game, the player can see all these body organs in the correct position on his body in the screen. Then the player can press a key to trigger an explosion that will tear apart the body of the users shown in the screen and scatter the body parts. I will train a style transfer model and apply it here to make the image look less disturbing and cooler. The players will then use their hands to retreat their scattered body parts, organs and assemble them correctly to rebuild their body. I will use PoseNet to track the position of their body and “conscious” and to calculate the correct position of their body organ that the player should place the specific body organs on.

Machine Learning techniques used:

- PoseNet : to track the position of the player and calculate the correct position the organs need to be on

- Style Transfer