Inspiration:

Still working off of my midterm “cancer project” I wanted to take the idea in a new and very different direction. I was HAUNTED by the suggestion that the project was a snapchat filter. I wanted to do serious work and I realized that if I wanted to covey serious topics I had to frame and present the work in a way that conveyed my intentions.

Instead of focusing on beauty and growth I wanted to reframe the project as a visualization of the dehumanization of cancer patients. I wanted to have a very delicate floral design on top of the user, covering them up completely. Somehow I wanted to show how their life was still separate from the cancer and treatments.

The main question was… how. (I wan’t even sure when I proposed this project… so the journey to the final result was long.)

Starting with the Fun Part: p5!

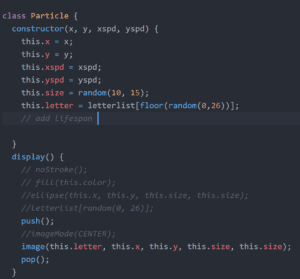

The only salvageable part of my midterm was the idea of a p5 overlay of graphics. The question was, if not Henna style flowers, then how was I going to make something super delicate looking. I very randomly went to a workshop for I think another class entirely led by Konrad. It was there that we worked on intricate sin/cos drawing creation that was far more advanced than my dotted style.

This was going to be the graphic I spring-boarded from, it would ultimately be re-colored and pixelated… but that is a later step I’ll get to a little later.

KNN- The Struggle is Real

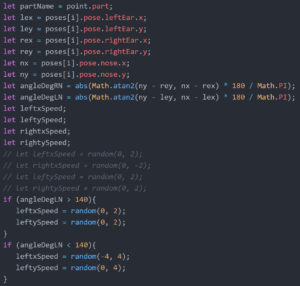

KNN and I… we don’t get along. I think that is the simplest way to put it. I had previously done a homework based on Southern Chinese Sign Language. It didn’t “suck”, but basically I realized how limited KNN and PoseNet was. It doesn’t really detect your fingers. In my case, I realized this was once again going to be the issue if I wanted to do ALL of the cancers. There was clearly overlap if you are just taking wrist position into account. An example is colon and stomach. None the less, I selected about half of the cancer from the sample list I had in my proposal.

I will say, just because I am so painfully awkward, I found myself saying well I have… ____ cancer, I have _______ cancer, more like trying to show that I was training a set trained on a given cancer form… not that I actually have cancer. (No seriously, I am totally fine, I just couldn’t English for a little bit… sleep deprivation is at fault I promise.)

KNN in my case was a modification of the “rock,paper,scissors” example like last time. I added about 200-ish data points for the version I presented at the IMA show, which was modified to work when the user was sitting down. (I didn’t save that particular version… I mean I did but it was on IMA Computer 20, and I didn’t think to save it until I began writing this post). I added an empty example set as well just to help in the accuracy, but once again that is something that was for the show specific version.

Pixel Manipulation – Moon is a Literal Wizard

Now, I had this idea in my head that I wanted the user’s final image to Peter Parker out of existence. (I am including a GIF of that because that scene from Infinity War is just that good.) Just because they, the user, don’t have cancer so they don’t have to live with the consequences of having cancer.

I had never done anything close. Basically we applied things I had learned with BodyPix, like grid size to help splice and separate the image into pixel squares.

Then it was a matter of understanding logarithmic functions to make them move. This took… one and a half office hours to understand on my part.

What the Flower Means – Deep Thoughts

The dotted white line, represents the treatment cancer patients have to go through… so doctors appointments, checkups, hospital stays, etc. The flower is colored based on the “diagnosed cancer” and represents the life of the patient. The patient is lured out as, they as a person cease to exist as all anyone wants to talk about is the cancer.

Bringing it All Together – Intervals and Frame Countmlnimlni

Using the FrameCount method from the midterm, I was able to effectively stop the flower from drawing more than I wanted it to. Then by adding an arbitrary number (like 250) in my case, I was able to stop the pixel manipulation sequence from going on forever. Using intervals, I was able to give a secondary threshold to the KNN, so in the case of the final it was 200 frames of a pose to cause the floral image to draw. In the case of the “normal demo” copy, it is 60 (that’s mainly because my personal laptop is just WAY slower than an IMA laptop, sorry).

Saving the Results- Space Bar for Flower, Ending of the sequence for entire Image

I implemented two saving mechanisms. These saving mechanisms are meant to be able to allow for printing of the user’s experience so they have a lasting impression after. It’s a last touch added after the presentation on Wednesday.

Conclusions-Next Steps

I like my project, if I had another month to just add data points to the KNN I would. Ideally the project is presented standing up, but sitting works as well just do long as the data set is modified. It would be cool if I could use the same dataset for both sitting and standing sequences.

Ideally, I want this work to be published or shown in a big setting, once I have more of the bugs worked out. The message is important. It is something that resonates with millions of families round the world.

Special Thanks:

Professor Moon for your help, your presence in the lab during the finals crunch is much appreciated.

LA Jessica, for teaching me abut empty examples.

My roommate Sarah, who served as the “user” during the IMA show to make the entry display always active so people had something to explore and talk about the second they entered the room.