Reading reflection:

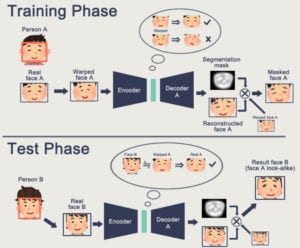

Computer vision is a game changing technology that have already entered our daily lives, applications being the scanning of QR codes or the recognition of car number plates replaces physical labour of humans at a parking lot. Just recently our campus and dorms also started the usage of facial recognition as an alternative to scanning student ID cards. Aside from these practical impacts on our daily lives, computer vision also has many applications to the creation of art. Having the power to recognize objects, it gives the computer power to perform specific operations using its understanding of the images, as opposed to traditional image manipulations where the computer only reads in pixel values but not understand what is being processed. I think the most obvious example would be applications that manipulates the human face, after recognizing that it is the face of a human, while leaving the rest of the image alone.

Zero UI project research:

After some research on the recent developments of Zero UI projects, I came across a project by googles advanced technology and projects team which they named project Soli . Project Soli is a chip that uses miniature radars to sense hand gestures and is exactly what I would consider the future of zero UI. Users can now control their devices without physical contact with their devices, and have all their control gestures be natural, as if controlling a physical device.

Technology:

I believe that the chip collects radar information of hand movements, then uses software to make interpretations of what the gesture means, I believe this could definitely benefit from computer vision/machine learning since the interpretation component of this technology requires the computer to predict what gesture the user is trying to input.

Current application:

Project Soli started around 2014. But just recently, Google is planning on releasing their newest phone model called “Google Pixel 4“. This phone is one of the most anticipated phone of 2019, as it planned to incorporate the Soli chip into the phone. There are many leaks and rumors online, building up a lot of tension before the release of the phone, which is expected to be in September/October 2019 which is really just around the corner.

Connection to Zero UI and potential future applications:

I think this technology could be very interesting and useful as it provides a very natural way to interact with machines, just like the ideas of zero UI. It could also be used in creative ways such as new control for gaming, or new tools for art creation. Just the new way of interaction opens up doors for endless possibilities on the application of this new interaction technique.

Videos:

Sources:

https://atap.google.com/soli/

https://www.techradar.com/news/google-pixel-4

https://www.xda-developers.com/google-pixel-4-motion-sense-gestures-leak/

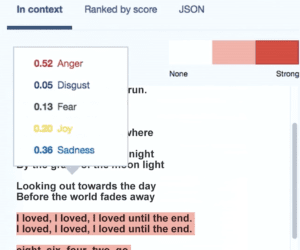

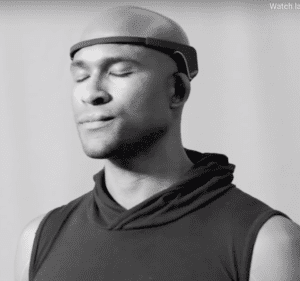

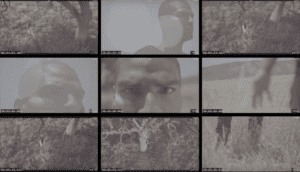

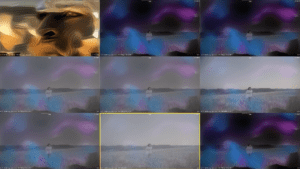

The film put together a different kind of “film crew” comprising A.I. programs including IBM’s Watson, Microsoft’s Ms_Rinna, Affectiva facial recognition software, custom neural art technology and EEG data. Together, they produced the film “Eclipse,” a striking, ethereal music video that looked like a combination of special effects, photography and live-action. The movie is conceived, directed, and edited all by machine. In the behind the scenes video, we can hear the team members explain how they teach the machine to tell a story.

The film put together a different kind of “film crew” comprising A.I. programs including IBM’s Watson, Microsoft’s Ms_Rinna, Affectiva facial recognition software, custom neural art technology and EEG data. Together, they produced the film “Eclipse,” a striking, ethereal music video that looked like a combination of special effects, photography and live-action. The movie is conceived, directed, and edited all by machine. In the behind the scenes video, we can hear the team members explain how they teach the machine to tell a story.