Partner: Tiger Li

Inspiration:

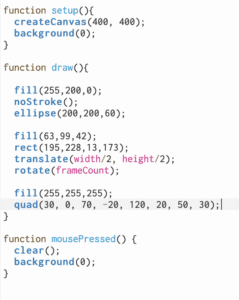

This project is an AI-powered music generator created by codeParade? It utilized two algorithms: It uses auto-encoder and PCA(Principal Component Analysis).

Auto-encoder is a type of artificial neural network used to learn efficient data codings in an unsupervised manner. And PCA can transfer a set of observations of possibly correlated variables into a set of linearly uncorrelated variables called principal components. PCA is often used in exploratory data analysis and for making predictive models.

Our first idea:

What?

We often hear the phrase “dance to the music” and traditionally, a choreographer usually design dance moves based on the melody. But how about “music to the dance”? What if we just use the movements to generate composition?

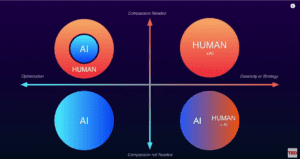

After discussing with my partner, we came up with the idea to use this model to generate different music and melody based on the artist’s movements.

How?

First, we use different choreography as the data to train the model. And then we can use the camera to capture the artist’s movement. Based on that movement, music is generated.

Why?

1.An embodiment of Zero UI

2.Makes a single dancer visually command more space

3.Better more immersive experience for the audience

Our second idea:

What?

The second idea is to use this technology to generate new music based on some specific artist’s style, especially some dead artists.

How?

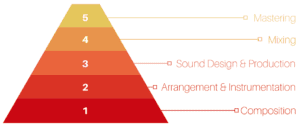

1. Use the artist’s original music as data input to train the model.

2. Use the model to generate new music of dead artists. Coordinate lighting with the music 3.because the music is predictable in a synchronized system

Why?

First of all, there is a huge existing fan base for some dead artists and we have this existing technology. So why not utilize it to emulates the human aspect of a live performance better because of artificial creativity?