Body Flow

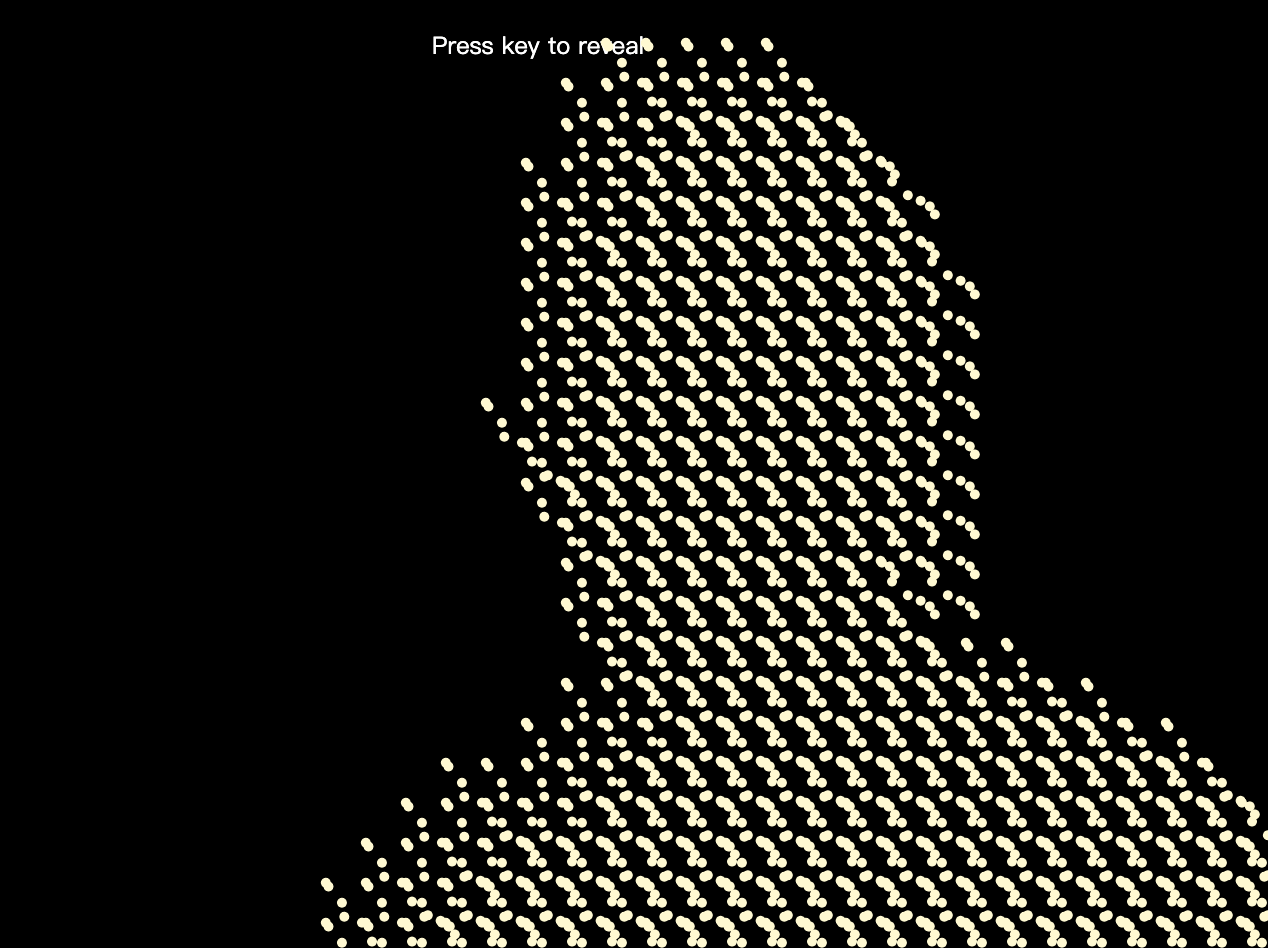

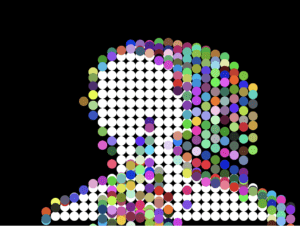

This week I developed a generative animation program utilizing BodyPix in ml5.js and p5.js.

Basically it reads data from BodyPix and stored points contained by the body. To improve performance, I chose grids of 20 pixels. It will draw white circles in areas covered by the body detected. If a circle area was covered by body in the previous frame but not the current frame, a falling circle of random color will be generated. In short, if you move fast in front of the camera, abundant colorful circles will appear on the screen. Also if the area covered by body is large enough, the canvas will be magnified.

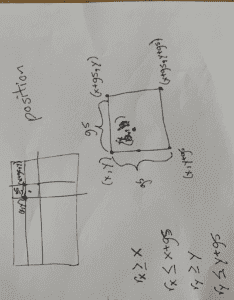

Store BodyFix data

I created some classes to store the results. Instead of using a global variable to constantly track results from the model, I directly store the data into an instance of my class.

Here class BodyMap is a singleton, quite similar to the global variable in the class example. It can draw white circles representing the body, and at the same time keep track of changes of the body. Every time it finishing updating the data, it will compute the ratio between body covered area and the uncovered area. If the index is large, a CSS transform: scale(n); attribute will be added.

Difference between sequential frames

A grid has a boolean attribute active . If the attribute is previously true and currently false, a colorful particle is generated. It will also draw a white circle to represent areas covered by body.

An array of particles is stored in a grid object. The way to clean particles is also very simple. Just check whether a particle’s y-position is larger than the canvas height.

Colorful particles

This is a very simple class. I used a constant number to simulate the gravity.

In practice, since I did not directly modify pixels of image, the rendering process is a little slow. That is the reason why I chose 20 as grid size.

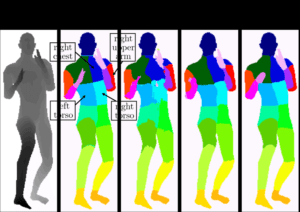

Conclusion

BodyPix is a very powerful tool. I found apart from drawing the whole body, it can also recognize different parts of the body. I think this is a useful feature to develop something interesting. I will learn more about it and maybe utilize it in my midterm project.