Overview

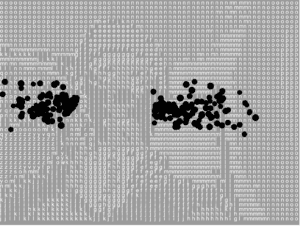

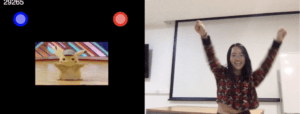

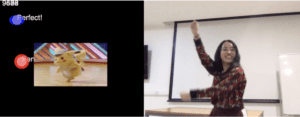

For the midterm project, I utilized PoseNet and p5.js to develop an interactive dance game that requires players to move their whole body according to the signs showed up on screen, thus making their body dance to the rhythm of the music, as well as imitating the move of the Pikachu on screen. I named this project “Dance Dance Pikachu”.

Process of Development

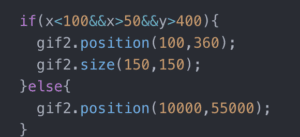

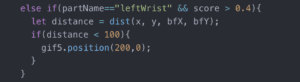

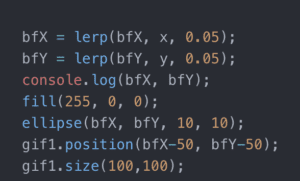

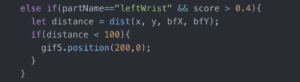

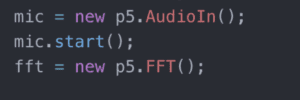

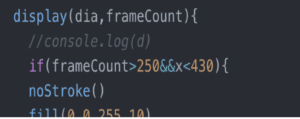

Different signs have different meaning in the game: red means left wrist, blue means right wrist, and when the circle is solid, it requires the user to clap hands. In order for players to dance to the rhythm of the music, the signs show up on the screen must always follow the beats of music. To accomplish this, I used “dia” to get new p5.AudioIn() to get the volunm of the music playing. I used this value as condition for the signs to show up at different times but always according to the beats of the music. Only when this value is higher than a certain number will signs show up. By doing so, the dancer can always dance to the rhythm of the music if he/she follows the signs correctly. I also used the frameCount value when arranging the coordination for the signs to show up. For instance, in the first 10 seconds of the song, during which the frameCount is always under 650, the coordination of the signs showing up would be different than those when frameCount equals 700. By making the coordination of the signs show up different, I’m able to diversify the dance moves in the game.

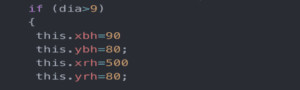

I used PoseNet to get the coordination of players’ wrists and built a counting score system. The more the player’s movement match with the signs, the higher he/her score will be. I also rely on the AudioIn() value to see if the player claps hands when he/she is supposed to. The player will also get a “Perfect” signal when he/she is doing correctly. I also tried my best to make the moves of the dancer identify with the moves of Pikachu in the screen. I did this by manipulating the condition (the frameCount) for signs to show up as the positions for the signs to make them match the posture of the Pikachu Dancing in the middle of the screen. By doing so, the player is able to dance like the dancing Pikachu.

(dancer: Ziying Wang)

Technical issue & Reflection

As is pointed out by the guest critics during my project presentation, the audioIn() mic value sometimes messes up with the time of the sign showing up. I should indeed try to catch the beats of the music with other value to make these signs more stable and matches the music. Other main technical issues I met when developing this project are all due to the instability of PoseNet. The coordination it provides is sometimes desultory, which brings difficulty for the game to return output timely. Sometimes even if the player’s hands are in the correct position, due to the model’s failure to recognize the wrist (sometimes because of the players’ quick movement), the game still shows that the player fails to meet the sign. In addition, the model also takes an unfixed number of time to load. I added a countdown session before the game because I don’t want to start the game when the model isn’t even loaded for the player to play. Originally, I want to use uNet to make the player’s figure behind the Pikachu image, so that the players can see if their movement match with the sign. However, when I use both the PoseNet and uNet, it takes even longer time for the model to load, the game also gets stuck more often, and the voice in uNet also messes with the AudioIn() value for the game to work properly.

If given more time, I will try to solve these problems mentioned above. I still think it would be more fun and easier for players if they could see their figure behind the Pikachu image and know where their hands are in the screen, and uNet creates a sense of mystery that allows players to not see their true identity. In addition, I will also try to make the interface look better. I will also try to diversify the dancing moves to make more dancing posture available.