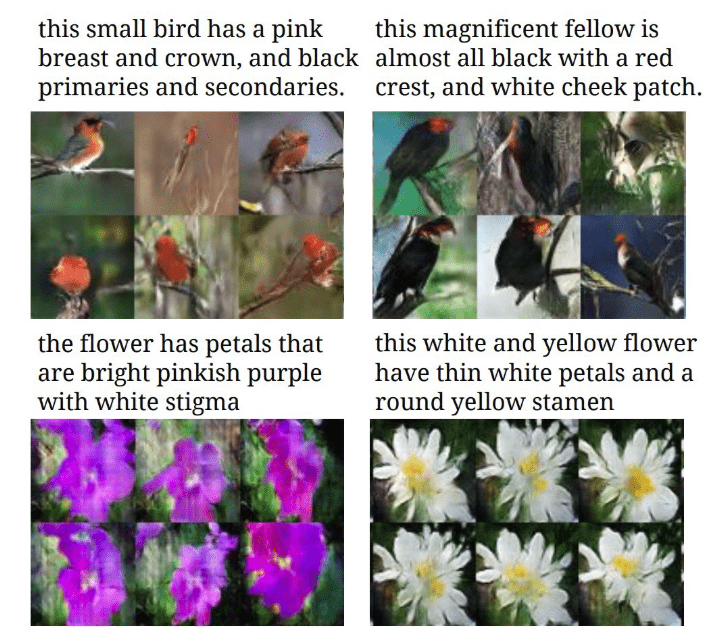

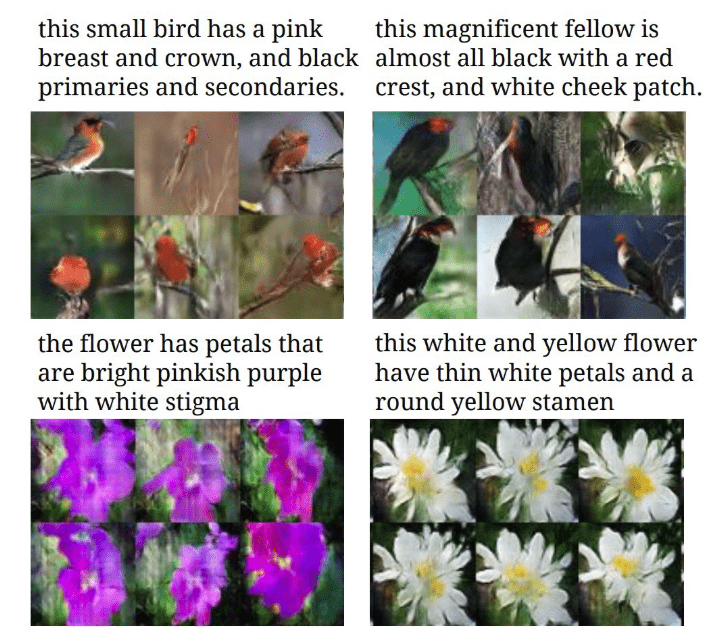

For my final project, my initial idea was to use a GAN to improve my first project, pokebuddy. After doing some research online, I came across a project that used a Bird dataset with a GAN to receieve results like the ones pasted below. This inspired my project ideas in the direction of text to image synthesis.

Idea 1: Pokecreator

Inspired by this, I really wanted to explore the possibility of generating new pokemon based on some descriptions. However, the concept itself was baffling, let alone how I could go about achieving this. The next significant hurdle with this idea would be the dataset. I searched online and tried to source some datasets with multiple images of same pokemon that I could use to train the a model. I also explored expanding my current dataset of pokemon descriptions to include more desciptions for each Pokemon. Another hurdle would be that image quality of GANarated pokemon would be very poor.

The obvious things to do here would be to remove the front-end webcam interface for giving buddies and the PoseNet portion, and replace it with a very simple interface for just getting the description from the user. The same Flask architecture for the entire web-app would be used, where the image would be generated on the backend and then ported to the front end for user to admire.

Idea 2: Repictionary

Mostly since I was unsure whether the idea above would work, I thought of using a gan to create a reverse-pictionary style of game. The inspiration came from two sources – one being the game of Pictionary itself, the other being Revictionary: a reverse dictionary, where users can type in a description and get the word matching the description. The idea then would be to combine these two, using text-to-image synthesis. The format of the game would be 2 player. Each player would enter a desciption which would then be used to generate an image using a GAN. The second user would have to guess the description of the GANarated image and would receive a score based on how close to the answer they were.

An additional idea regarding Repictionary was to have a Human vs Bot implementation. The Bot would be comprised of an AI that would be able to guess an image based on the given image. This ‘AI’ would have a list of descriptoins that it would provide to the game for the player to guess.

This entire project idea has many layers to it: The first being the GAN which would generate images from text descriptions. The second would be a NLP tool that would compare two descriptions and give them a similarity score. If the AI works, then an added layer of image to text classification would be involved.

Direction

It seems like Repictionary is the one I will be going for, possibly using AttnGAN. This pytorch implementation of AttnGan was the one used for the bird project described at the beginning. The plan would be to train this on CoCo dataset and other image datasets to see what kinds of outputs I am able to achieve. Just as a fun side project, I would even try to run it on the pokemon image dataset and see what results I am able to achieve. I plan on using NLTK and Gensim for the Natural Language part, and maybe add spaCy if necessary as well. The AI bot would only be touched upon if time permits, which I’m not sure it will.