This week’s CycleGAN homework had some interesting results in store, especially in the realm of hideous beasts. Wait and watch.

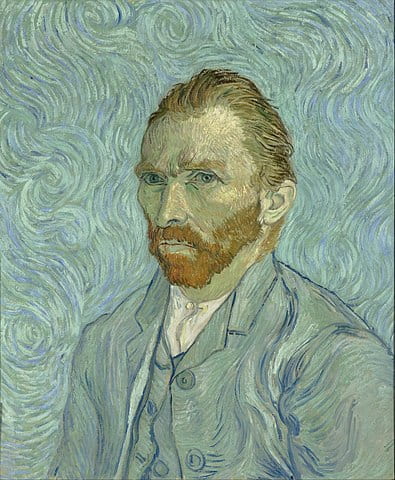

The training was done on colfax, but not by me. I had many problems with many things on my machine, so after many failed attempts, I got the training to work but only to realise that I would in no way finish in time. I cross checked with other people and Abdullah was training on the same dataset (Van Gogh) so I borrowed his checkpoints.

After getting the checkpoints, I followed the steps to actually get the transfer working. To convert, we had to run the code in the file generate_model.sh. After a bit of peeking, I found that all the parameters were actually set to run with monet2photo and not with any dataset in the checkpoints folder. So I replaced the line python3 test_save_pb.py with python3 test_save_pb.py --dataset vangogh2photo and hoped that fixed the issue.

However! the results that I got from putting and image into it were almost the same as the ones in monet2photo. I cross checked, found out that they were the same indeed and had to look through all the files individually, including freeze_graph.sh and convert_model.sh. The files actually were also set up to run the inference for monet2photo, so these had to be modified as well!

Here’s what the files look like after modification, in case you had dummy outputs as well:

freeze_graph.sh:

mkdir outputs

freeze_graph \

--input_graph=../../train/outputs/checkpoints/vangogh2photo/cyclegan_tf_vangogh2photo.pb \

--input_binary=true \

--input_checkpoint=../../train/outputs/checkpoints/vangogh2photo/Epoch_199_400of400.ckpt \

--output_node_names="a2b_generator/Tanh" \

--output_graph=./outputs/frozen_cyclegan_tf_vangogh2photo.pb

convert_model.sh:

tensorflowjs_converter \

--input_format=tf_frozen_model \

--output_format=tensorflowjs \

--output_node_names='a2b_generator/Tanh' \

./outputs/frozen_cyclegan_tf_vangogh2photo.pb \

../tfjs/models

Results

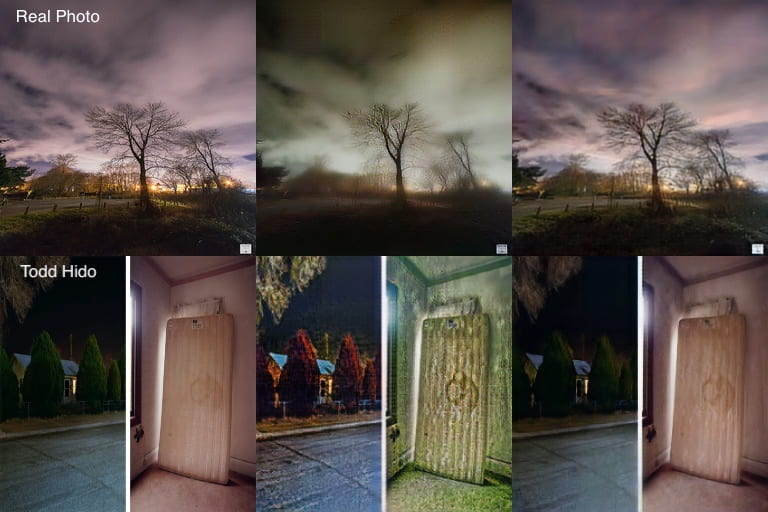

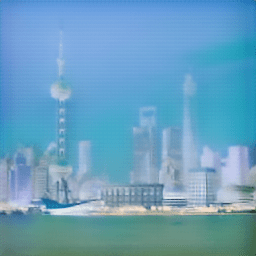

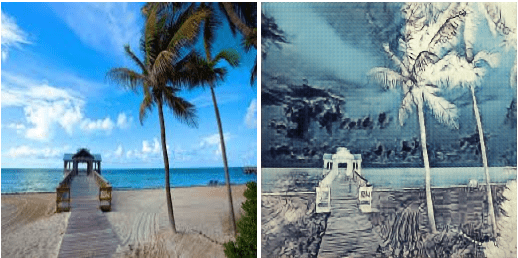

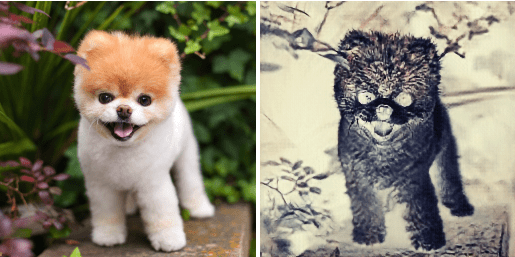

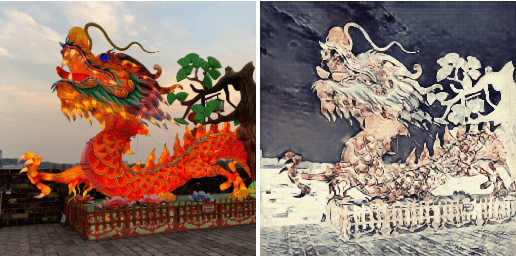

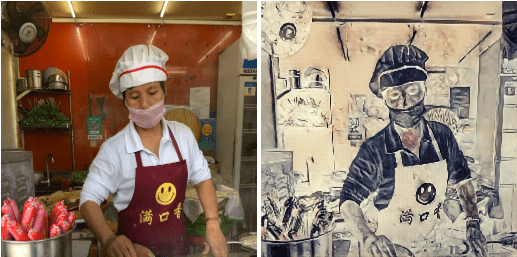

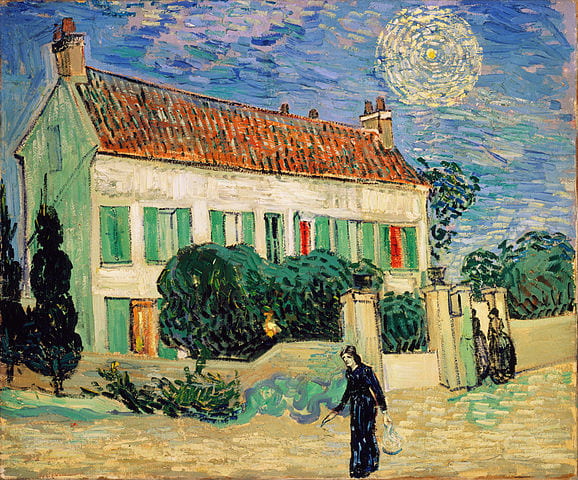

After modifying the files and using the sample code to inference by passing in a few different kinds of images, here’s the effect you actually get, not much of Van Gogh though:

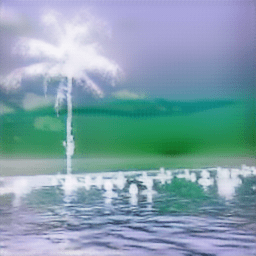

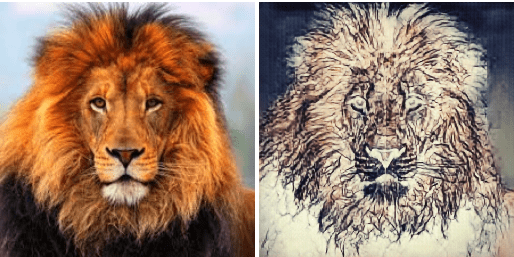

And just to get the goblin-ness off myself, I ran it multiple times using the output as input. Here’s the series:

Turns out that this cycleGAN turned out to be a negative filter after all.