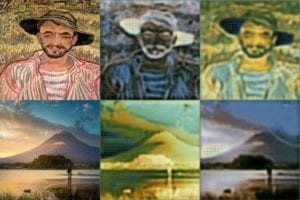

For this week’s cycleGAN project, I ended up utilizing a pre-trained model due to several issues I ran into during training, as well as time constraints. I wanted to incorporate a few photos of Picasso’s cubism paintings and stylize images of childhood cartoons, as I thought the result would be quite interesting. My expectation would be that the characters would still retain some form of similarity to the original photo (whether it be through color, form, etc), but show some obvious changes in structure, caused by the sharp edges of Picasso’s cubism style.

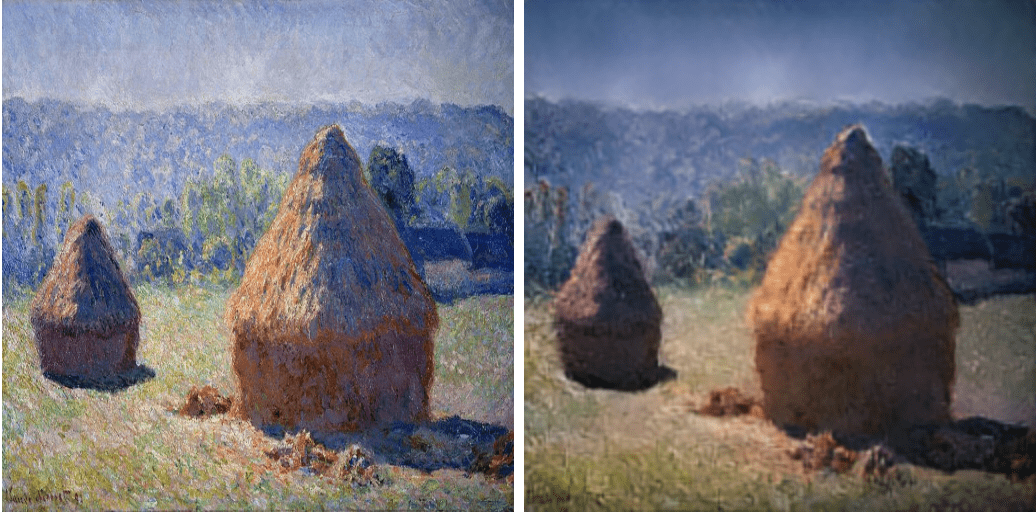

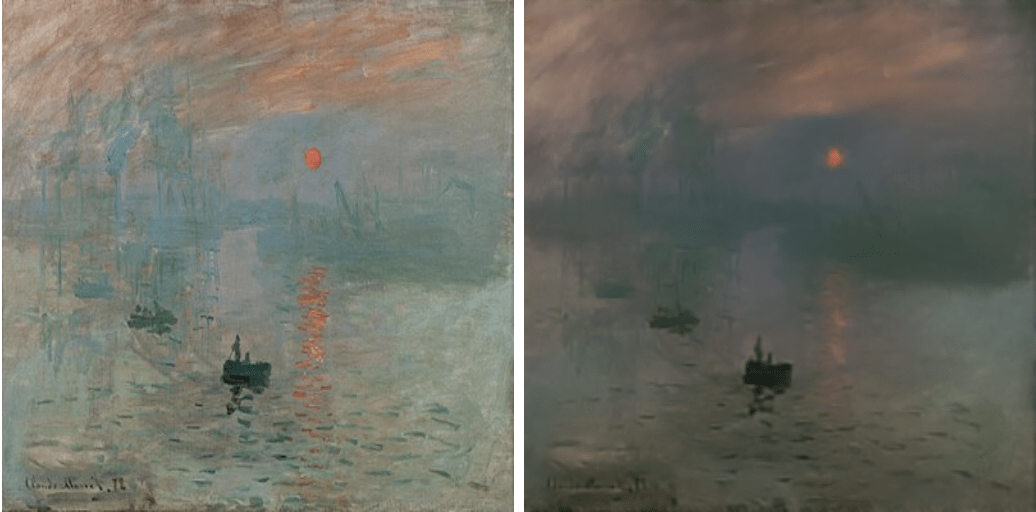

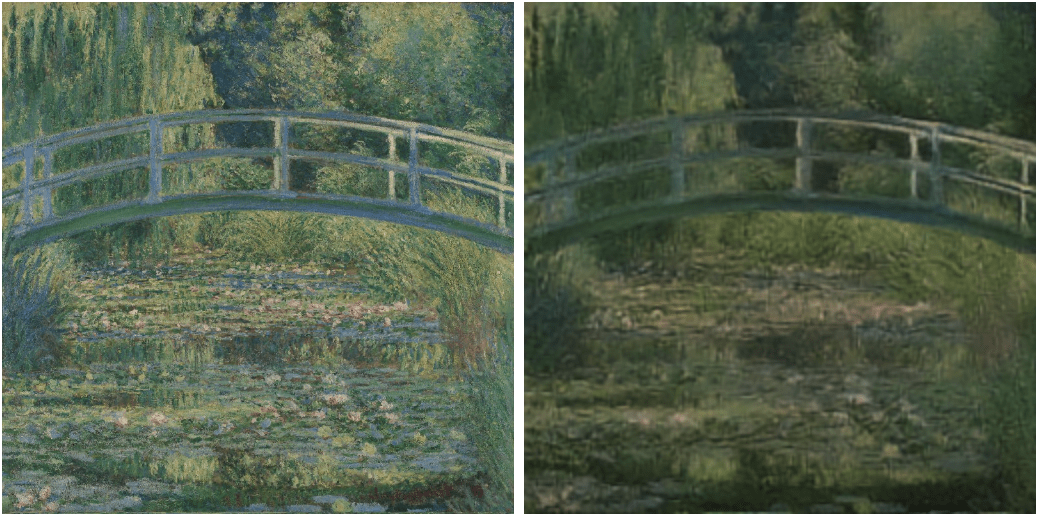

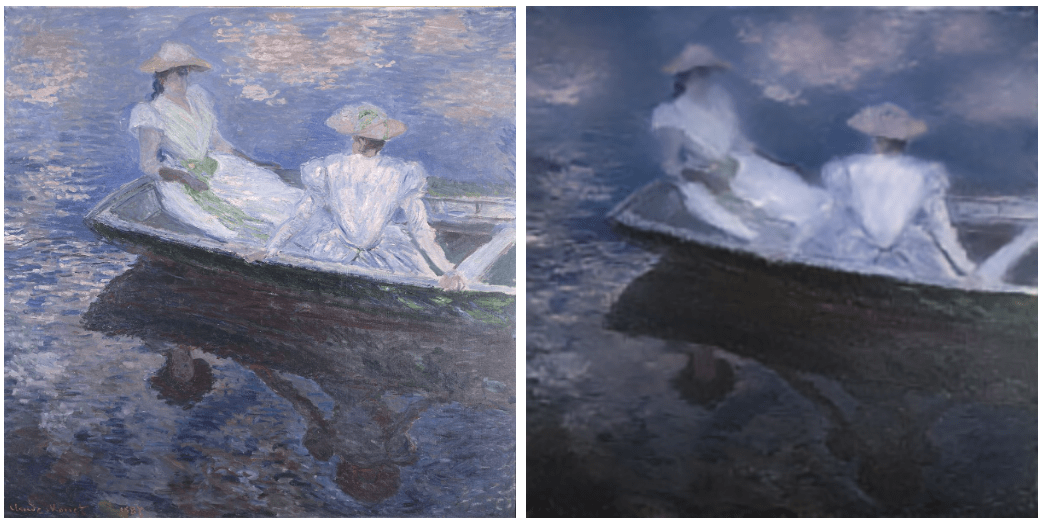

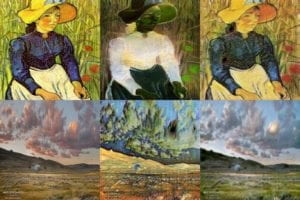

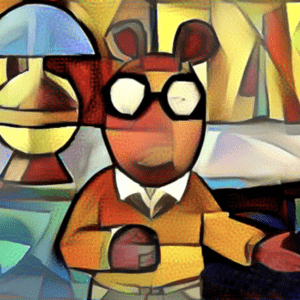

Here are the results:

I think the photos turned out extremely clean cut, due to the fact that the input images contained very defined edges, as they are cartoons. The Picasso cubism style is quite noticeable, although I do think that the results will be a bit worse with photos of real life scenes.