The chAIr project is a series of four chairs co-designed by human designer and artificial intelligence program. The two artists, Philipp Schmitt and Steffen Weiss want to replicate the classic furniture style of 20th century. They first start with training a generative neural network (GAN) model by incorporating 562 chair designs from Pinterest. Then they set their AI model to only focus on the aesthetic part of each chair, without taking any functional requirements into consideration.

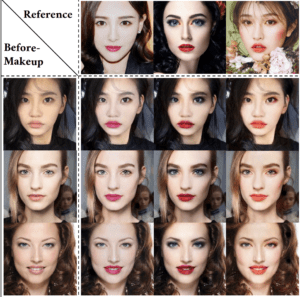

After finishing training the model, they run this model and generate sketches as follows. Although these sketches seem to be abstract and may not be used as design drawings directly, the neural network has already implemented common feature of the database into these new sketches. By picking up old classic icons, AI helps us to generate new classic designs.

Then it comes to the part human designers can get involved in. Based on the sketches generated by the machine, human designers can use these design sketches as a starting point to generate their own design sketches. At the same time, human designers can use their imagination and creativity to redesign the blurry parts of AI designed sketches and fix the unreasonable design concepts.

In the end, human designers can finalize their design and start to make prototypes. In this case, Philipp Schmitt and Steffen Weiss 3D-printed 4 chairs generated from their program. We can see the whole design process here starts from the machine and then pass the basic design framework to human designers. Different from what designers do today, this project reverses the role or the sequence human designers and machine play and act during a whole design process.

The collaborative design process simplifies XX the by training AI to help us stimulate imagination. This kind of collaboration will save time for all kinds of designers and sometimes it may even brings out some surprising outcomes. Philipp Schmitt and Steffen Weiss think that human and AI co-creativity can possibly extend our imaginative capacities in the near future.

I really love this project since it can be easily applied into our daily lives and there are many industries that it can be applied into. I am looking forward to seeing this kind of collaborative design happening in the future.

Designer: Philipp Schmitt & Steffen Weiss

Open Source: DCGAN, arXiv:1511.06434 [cs.LG], Torch Implementation

Project: The Chair Project – Philipp Schmitt

The chAIr project – generating a design classic – Steffen Weiss