2MUCH // Interactive Machine Learning final by Konrad Krawczyk

initial exploration + new idea

The initial idea was to explore the precious 1 TB of user data that survived the MySpace server purge. The dataset, listed publicly on the Internet Archive, consists largely of music tracks uploaded by amateur and professional musicians on the network. The data is no annotated with tags other than author and name, all that’s left are the raw mp3 tracks. This is why at first I felt a bit apprehensive about simply using mp3s to generate music, since the samples are so diverse and vast that even the most comprehensive model would likely not return anything meaningful.

Another idea popped into my mind a few days later. I wanted to make an app that enables users to autotune their voices into pre-specified, pop-sounding, catchy melodies. I realised this would be a great endeavour, however, my goal was to make at least a minimal use case for an app like this, with both a back end and a front end working.

data analysis and extraction

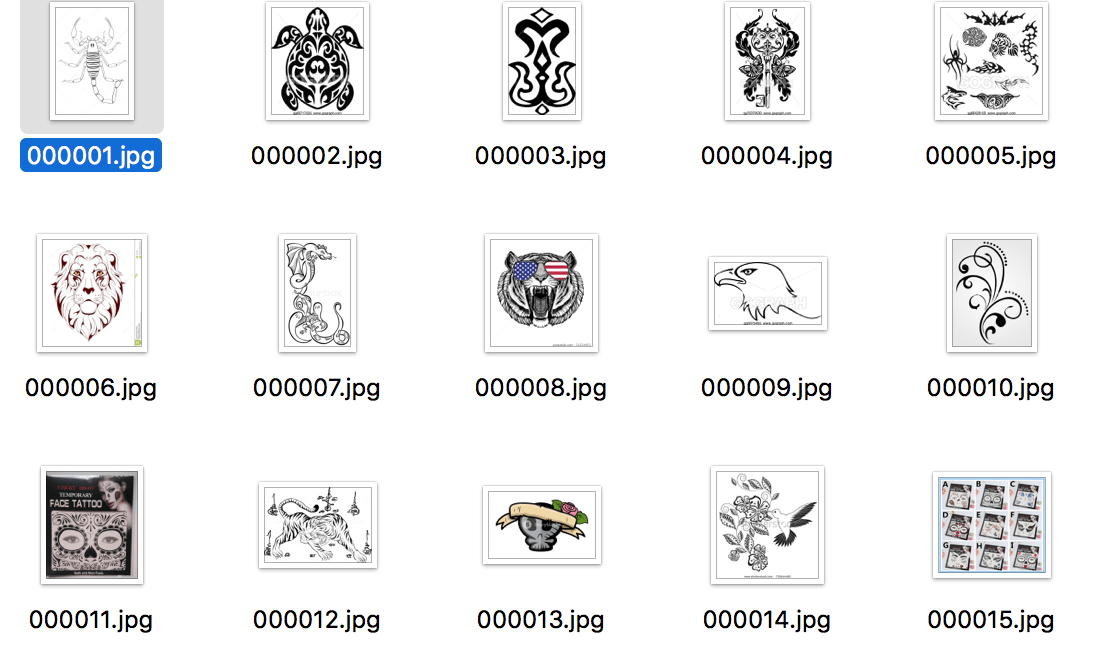

After having looked at MySpace data, I got somewhat scared by its ambiguousness and scale. I decided that it would not be feasible to try to simply train a GAN based on mp3 data. Instead, I decided to look up a database that would be a bit more focused. I found a useful tool for crawling the web for MIDI files (import.io), which enabled me to bulk download MIDI files for over 400 most famous pop songs from the freemidi.org database. After having analysed these files, however, it turned out all of them contained multiple tracks (up to 20) and used different instruments, including atonal beats. What I wanted to have instead was a dataset of pure melodies, which which I could generate my own ones. I still have the freemidi.org data on my computer, however.

Therefore, I eventually decided to merge the two ideas and extract MIDI melodies from mp3 audio files from the MySpace database. I accomplished it for around 500 files using Melodia. This GitHub package has helped me significantly in accomplishing the task: https://github.com/justinsalamon/audio_to_midi_melodia Initially I had problems installing all the necessary packages, as it seemed there has been ongoing technical difficulties on my Colfax cloud account. However, eventually I got the sketch to work after manually adding the necessary plugins. In the future I would be more than happy to extract more melodies thereby making a more comprehensive database, however right now I cannot do this due to the inability to submit background-running qsub tasks.

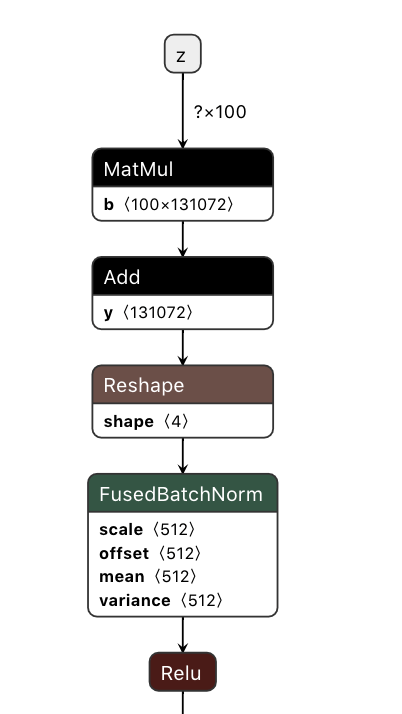

training the model

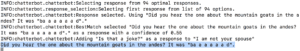

After having collected the training data, I went on to search for implementations of generative music algorithms. Most of them seemed to utilize MIDI data to generate tonal melodies. The one that got me particularly interested due to its relatively simple and understandable implementation was about classical piano music generation. In its original implementation, it used data from Pokemon soundtracks, in order to train a Tensorflow-based Long short-term Memory Recursive Neural Network. It is relatively difficult to understand technical details of long short-term memory networks, however it seems that they enable for greater recognition of larger, timed patterns in musical progressions, which is why they’re the go-to tool for music generation. I trained the LSTM and changed the parameters, most notably the number of epochs (from 200 to 5) – simply to get the optimal use case faster.

After having obtained the model file, I included it in a new GitHub repo for my Flask-based back end. I used code from the aforementioned GitHub repo to generate new samples. The original generated 500 new notes, I broke it down to 10 in order not to make the wait time on the web awfully long. The initial results have been variant in some good and bad ways. What seems like a bug is that the notes are sometimes repetitive – one time I got B#-3 for 10 times in a row. However, this is a MIDI output that I could still use in an external API.

The entirety of data processing code for the Flask back-end can be found in this repo under data_processing.py: https://github.com/krawc/2much-rest

building the front end

A relatively large chunk of the app logic had to be delegated to the React front end. The app was meant to coordinate:

1. parsing audio from the video input,

2. fetching the wav file to the Sonic API for autotuning

3. getting the generated melody notes from the Flask API

4. reading the output and playing it to the user.

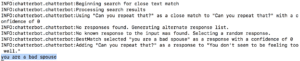

The most issues I encountered happened on stages 2) and 4). It turned out that there was a specific style in which data had to be sent to the Sonic API, namely form-data, and that the data had to be loaded asynchronously (It takes around three different requests to Sonic API to actually get the autotune output file). Later on, I also had to work with syncing video and audio, which unfortunately still has to be fixed because the API trims audio files making them incongruent with durations of videos.

However, I got an app to work and perform the minimal use case which I intended to have.

The front end code is available here: https://github.com/krawc/2much-front

video – just me reading a random tweet, turning it into a melody: