After this week’s class, I continued to explore style transfer by training a model and inferring using ml5. I followed the instructions on training on Intel devCloud and encountered several difficulties before successfully initializing the training. Since my model is still in the training process, I completed the inference part with pre-trained models in the meantime. It was quite informative to look at the differences between output of differing models. And once my own model is completed, I would like to compare the results as well.

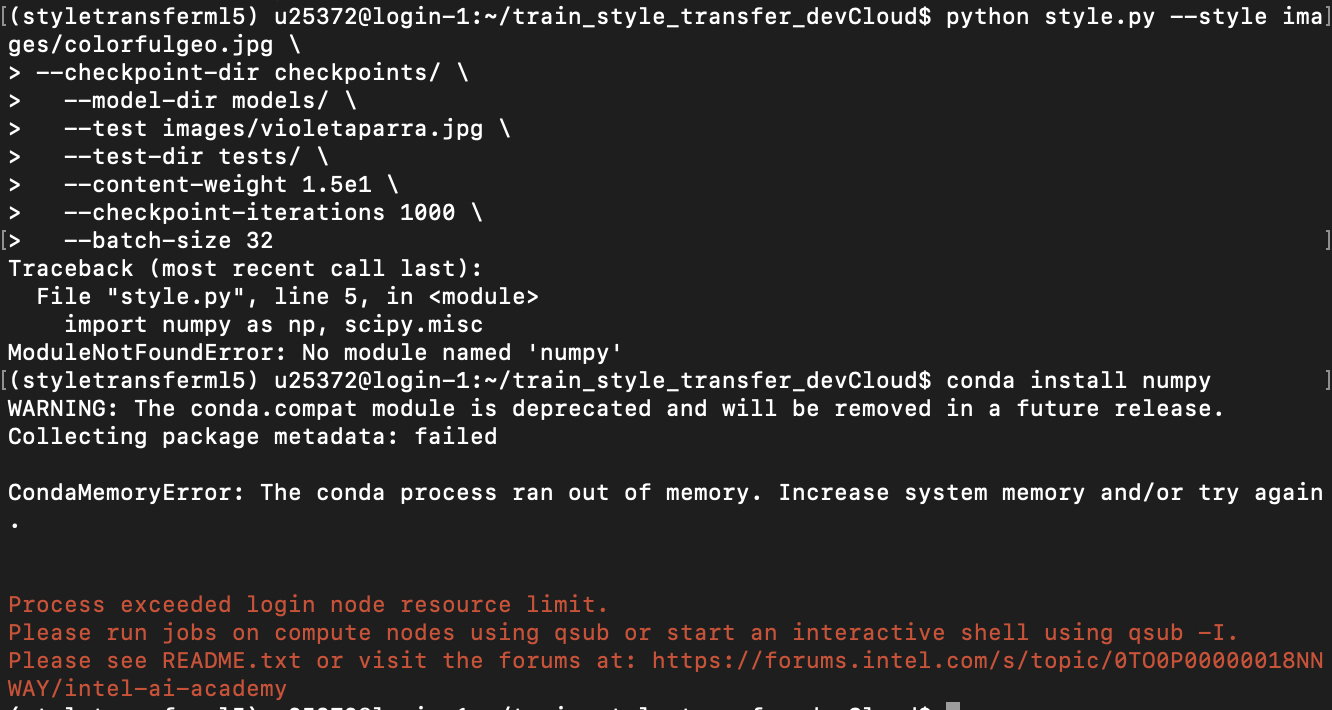

Training:

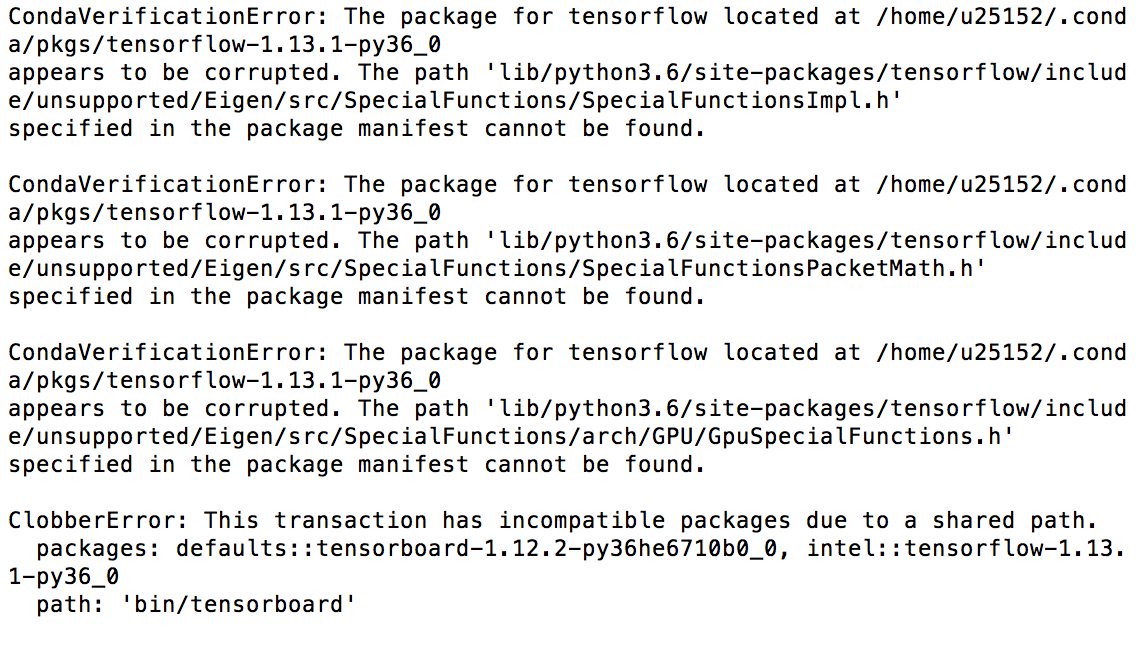

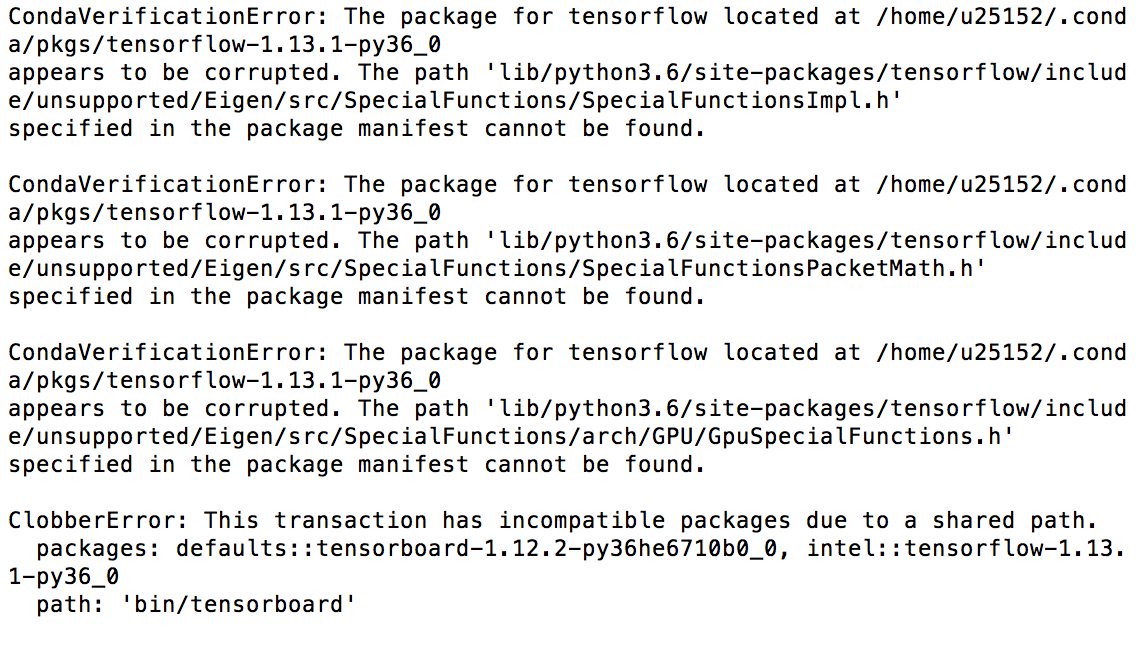

In the very beginning, I was having difficulties just setting up the environment. Because I had download other packages for tensorflow when working for the midterm projects. The packages specified in the environment.yml that needs to be installed seem to come in conflicts with the existing ones. I suspect this is due to having old and new versions of the same package.

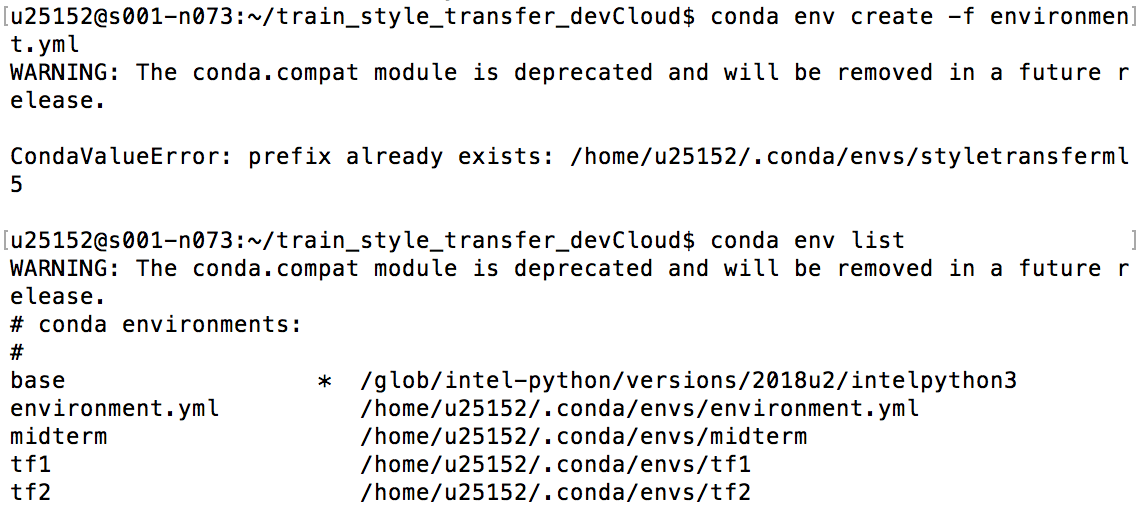

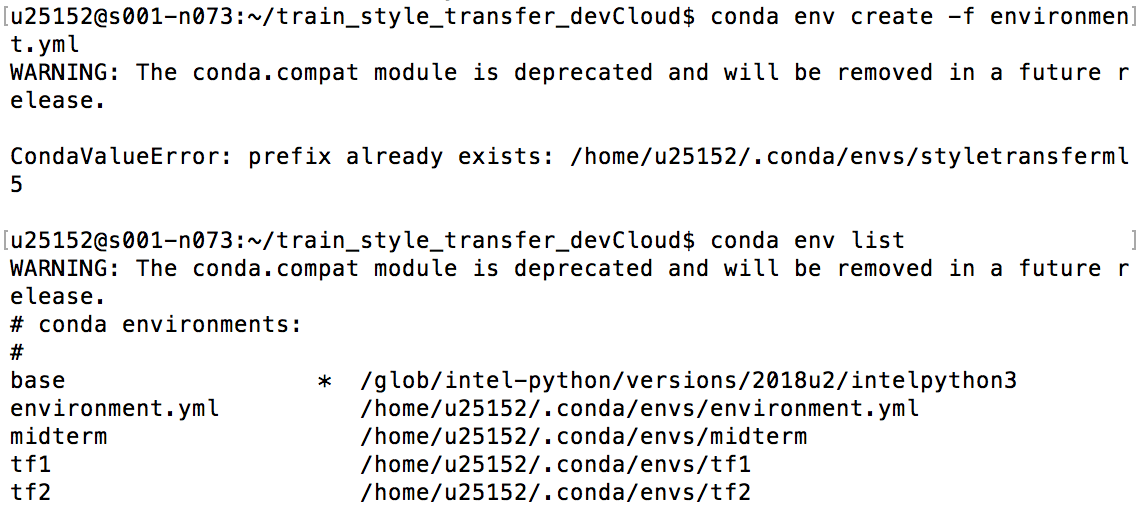

In addition, I kept getting prefix for a conda environment already exist even though it does not exist in my conda env list. The error persists no matter how I try conda remove the environment and try it again. In the end, I edited the environment.yml file to rename the path.

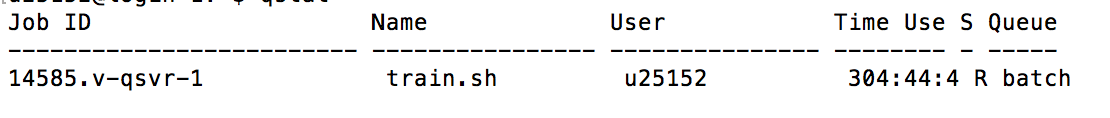

I was stuck on this for a while until Aven pointed to the conda clean command. I removed unused packages and caches and also re-cloned the GitHub repository. After solving some other issues, I was finally able to successfully create a new virtual environment for the purpose of training this style transfer model. Currently, my model is still training and will take more hours to complete.

Downloading the dataset is also an unpleasant experience. It happened to me five or six times the dataset just stopped downloading in the middle and would have to restart. Eventually, the download process was not interrupted and it took about 3 hours to complete.

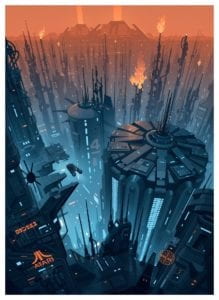

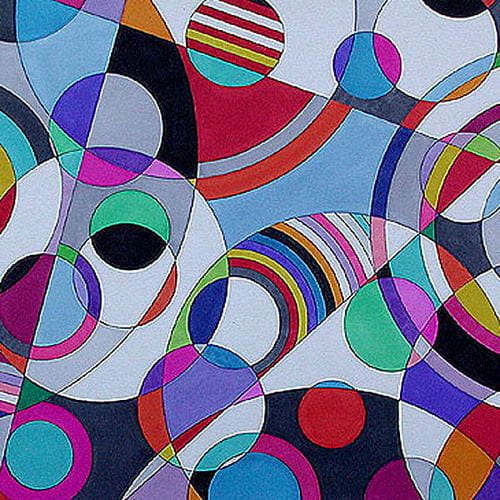

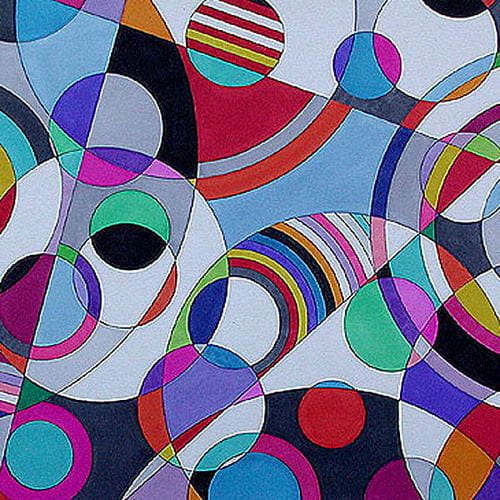

After solving all these problem and issues, I was able to start training. I specifically looked for a picture with clear geometric shapes and contrasting colors for training and hope to get a strong style transfer effect. Currently, the job is still running and will take more hours to complete.

Inference:

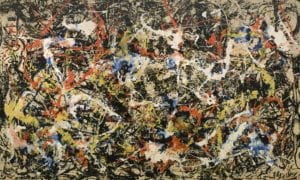

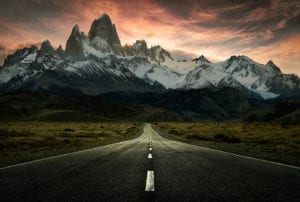

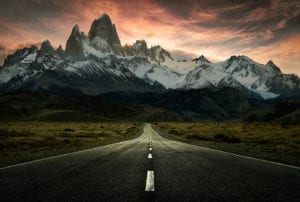

I perfomed the inference on several pre-trained models. The input image is here:

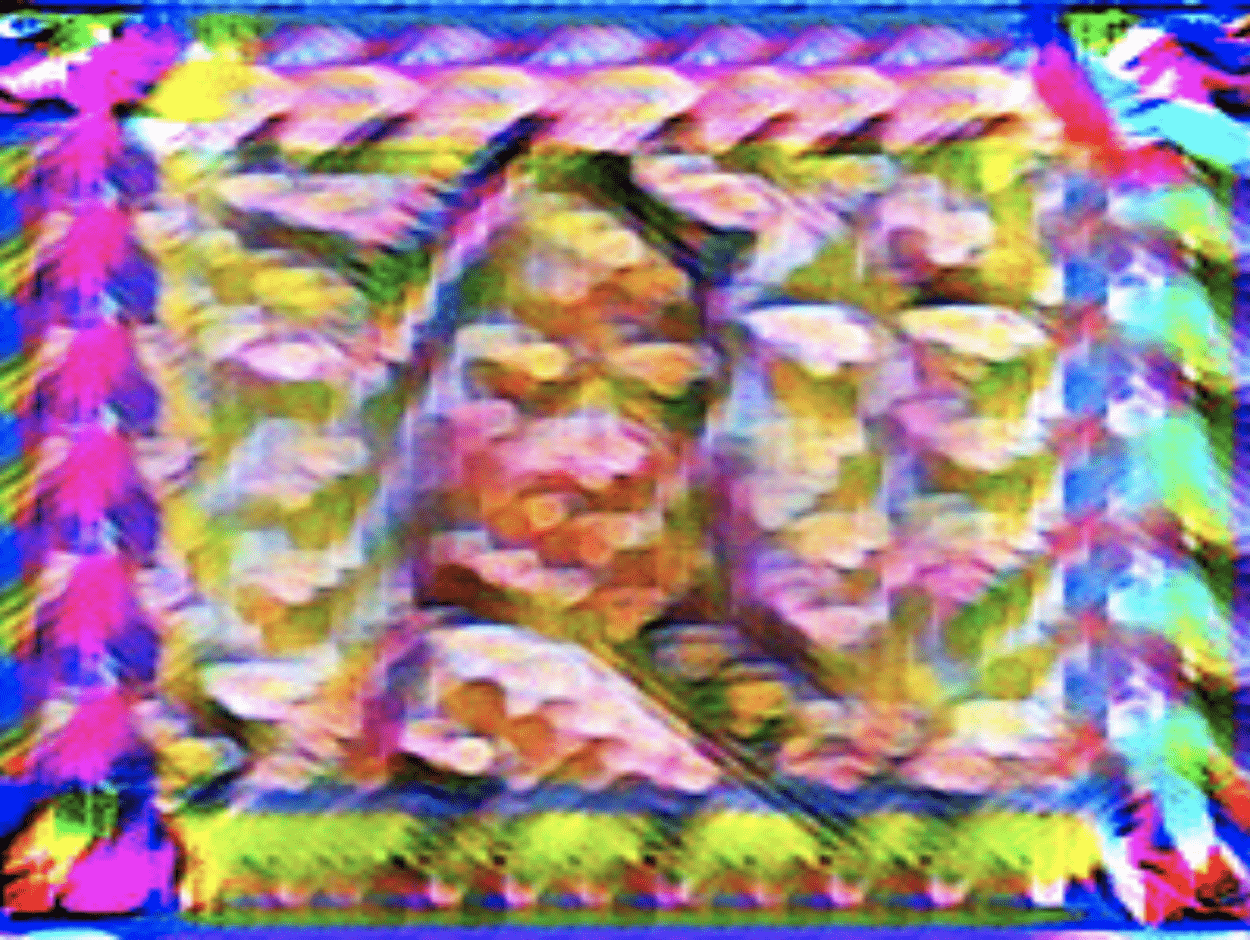

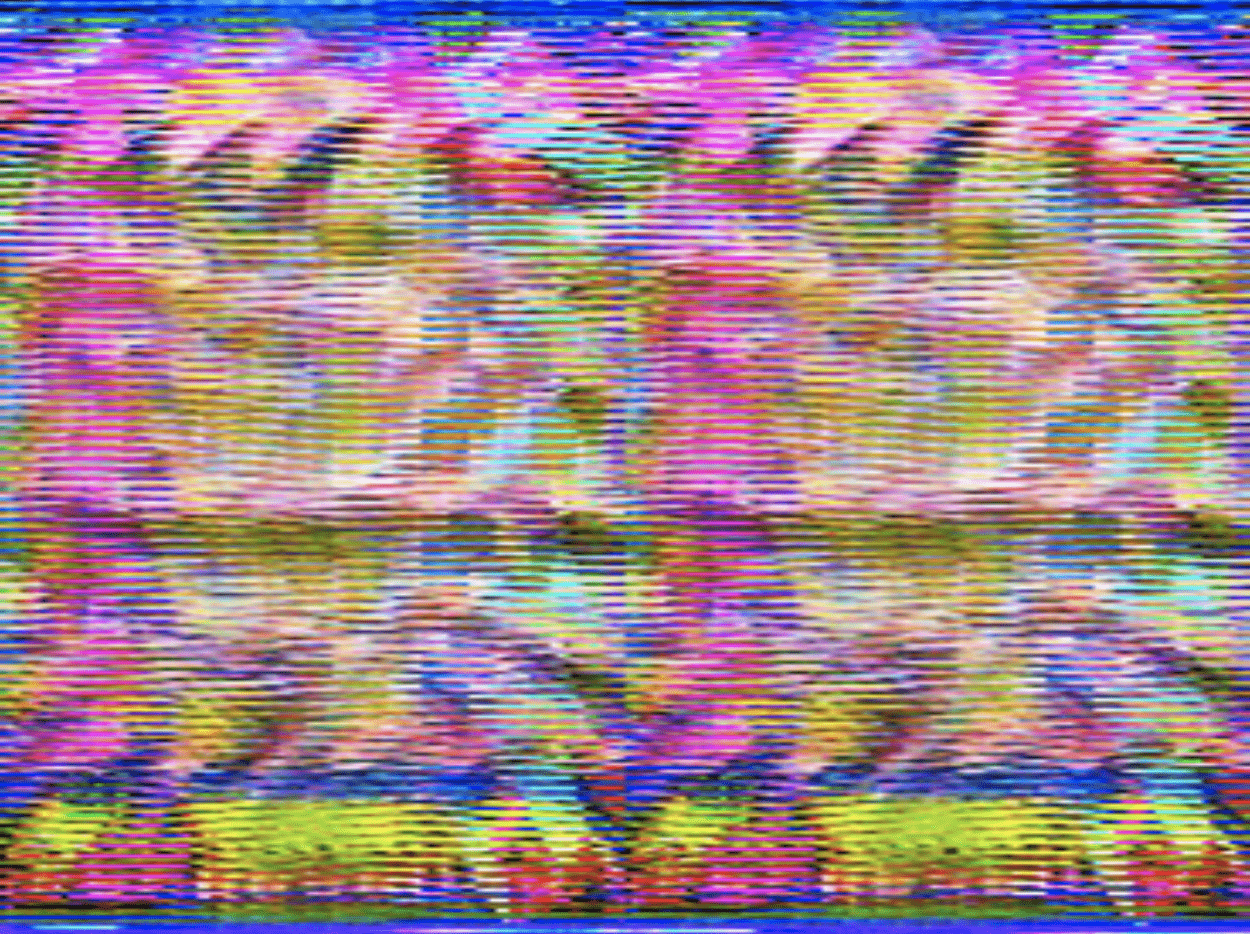

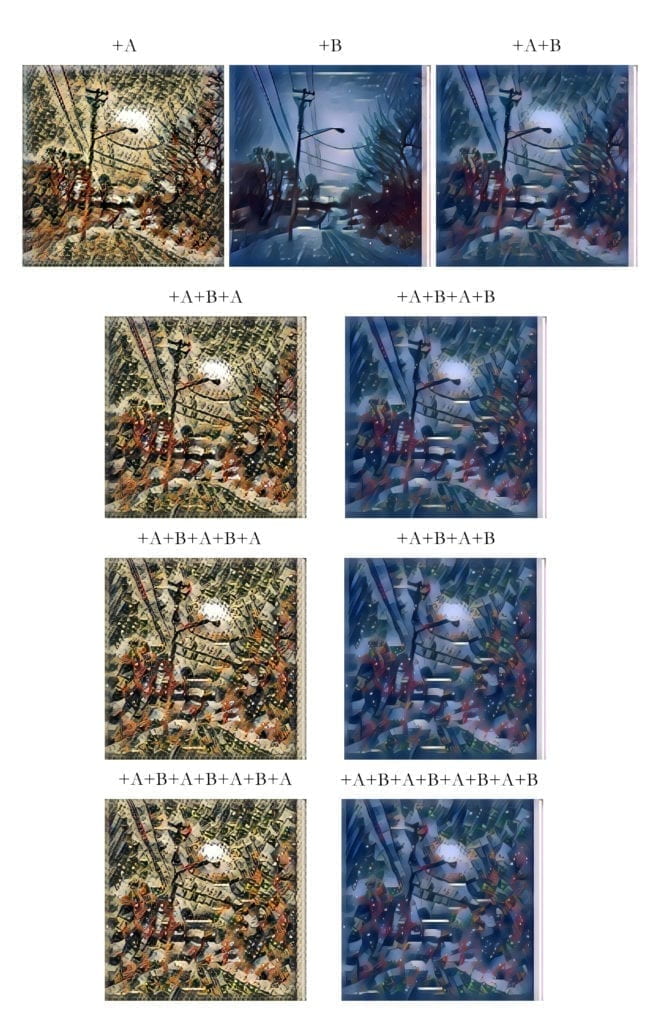

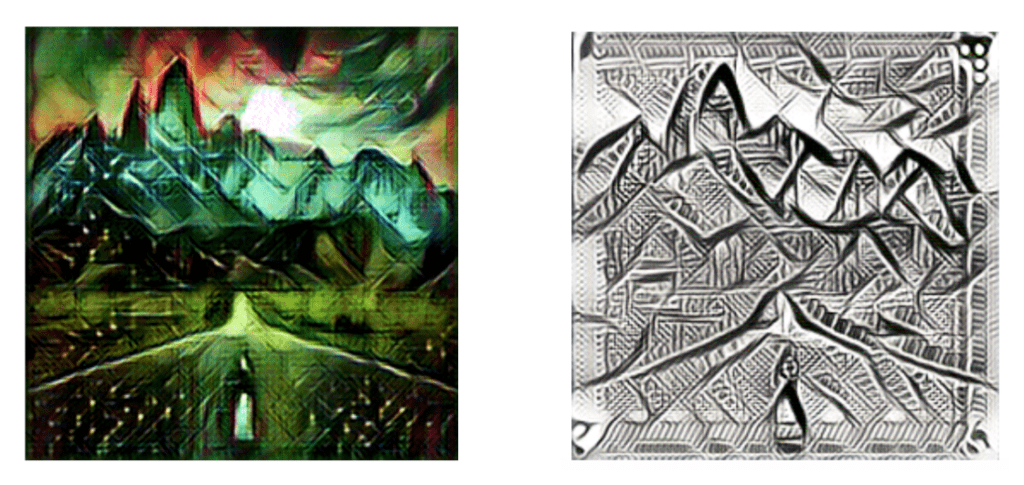

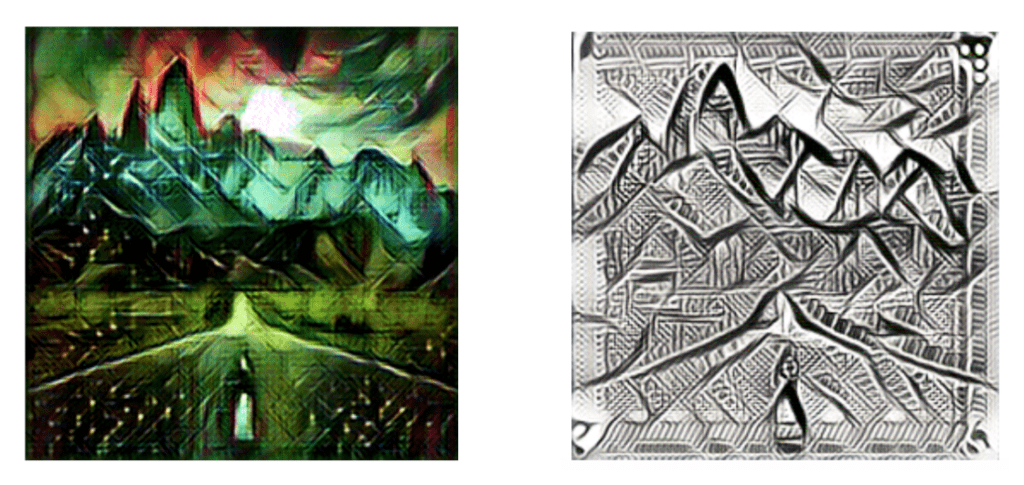

The outputs are shown here:

The models used are: waves, zaha, matta and mathura. I thought the results turned out very good and the style transfered very well to the input image. In these four output images, you can also see how texture has also been transformed. I look forward to applying my own style transfer once the model has been trained.

Overall, style transfer creates a unique artistic effect. It would be interesting to explore the subject further and potentially form new styles stem from this type of machine learning approach.