Inference on Pre-Trained Models:

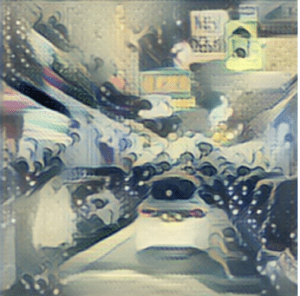

To start off, I found a basic image of a street in Hong Kong, and played around with pre-trained models in ml5.js.

Input:

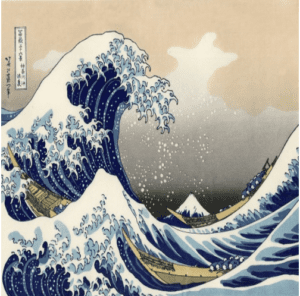

Style:

Output:

As you can see from the output, the style transfer is quite amazing; the wave texture is perfectly captured from the style image and transferred over to the input image, while still keeping the overall structure (such as the cars, signs, and roadway). Personally, I am still not sure how this pre-trained model is able to take the tidal curve texture (which is not overly present in the style image, only on specific waves), and apply it across the input image so nicely.

Training My Model:

First off, I followed the instructions on the powerpoint, and downloaded the data set accordingly. The process wasn’t too bad; took only around 2 hours or so:

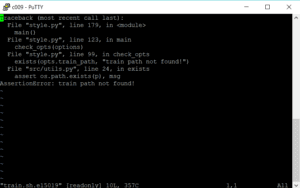

However, after acquiring the data set, I ran into a few errors during the training step:

Turns out there were some issues with my directory and image path, so I had to spend some time fixing those issues.

Idea:

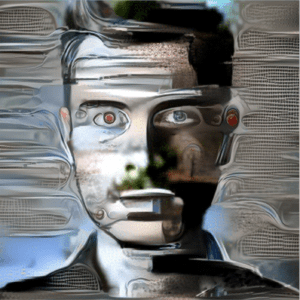

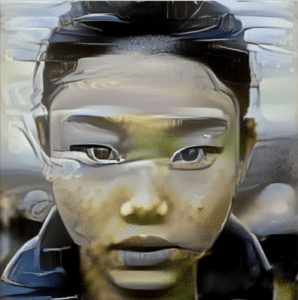

I wanted to experiment with style transfer using faces as the input image, since human faces are one of the most prominent, recognizable features to us. Interestingly, I came across this article which touched upon the strange phenomenon where humans possess the tendency to find ‘faces’ on inanimate objects, especially cars. Personally, I’ve always thought that the fronts of cars have always had a certain personality to them (ie, some cars look happy, some cars sleepy, etc). In the article, it talks about a study published in the Proceedings of the National Academy of Sciences, where researchers had auto experts look at the fronts of cars, and they found that the same area of the brain involved in facial recognition was activated (fusiform face area). I thought this was especially interesting, in that car faces were essentially triggering the same responses as human faces in the brain. Therefore, for my style transfer project, I wanted to have human faces as the input image, and a car face as the style image. I thought it would be fascinating to see how the model would combine the perceived style of the car face into the human face, and what the results would be. Would the output image just be a photo where the machinelike style of the car is textured across the human? Or would the output face be more like a cyborg, where certain qualities of the car is fixed into the face, making it seem part human, part machine?

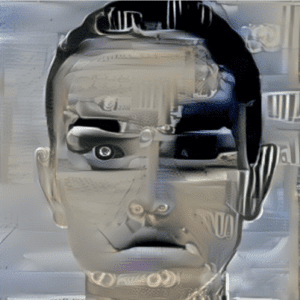

Results:

+

+  =

=

+

+  =

=

+

+  =

=

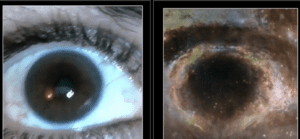

Judging from these results, I would say that my first run with the male test subject turned out the best; I think it may be because the prominent circles on the car translated over to the man’s pupils, giving him that ‘cyborg’ like feel I had hoped for. Interestingly, the model managed to position the headlights of each car into the eyes of the human faces, which I thought was quite fitting. The model also took the gridline patterns of the front of the cars and translated them across the background of the output images (which is most noticeable with the male subject and third female subject). Ultimately, my output images were quite striking in my opinion; it seemed as if they really were like ‘cyborgs’. It would also be interesting to see human faces styled with other face-like objects as well.

Sources:

https://www.smithsonianmag.com/smart-news/for-experts-cars-really-do-have-faces-57005307/