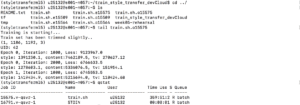

This week, I was playing with the deep dream. I focused on tuning the parameters of the step, num_octave, octave_scale and iteration to see what will happen. I also tested it on several different images.

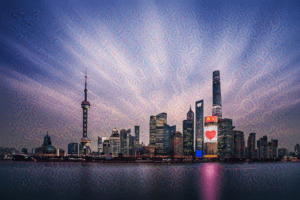

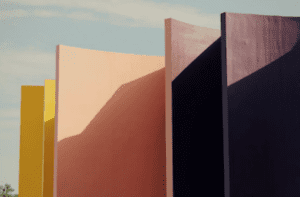

I started by testing with this image:

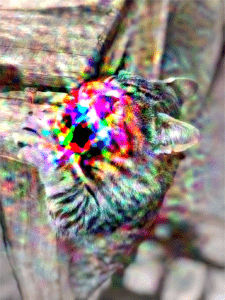

Here shows the result I got with step = 0.01, num_octave = 3, octave_scale = 1.4, max_loss = 10. And the iterations of the following images are 40, 80, 120, 160, 200, 240.

It can be seen that after the first 40 iterations, there were already some detailed structures appeared in the background. If we take a close look, we can see that those structures are composed with a round center and colors like green, red and blue. With the increase of the iteration, those structures become more and more clear. The black-colored areas of the image are more likely to be transfer to the round shape and the original image has been changed slightly in terms of the texture and outline. After 200 iterations, the image doesn’t change a lot. The color and the structures in the background have been reinforced and in some parts of the image some very vague shape of animal faces such as dogs and bird can be observed.

Then I changed the step from 0.01 to 0.06, we can see that the structure created by the neural network become more detailed. And this change made it take longer time to generate any meaningful shape.

Change the number of octave from 3 to 6 yielded some very interesting results. It seems that this change enlarged the structure created by the neural network and the color has been spread to other parts of the image. And here shows what will happen if change the number of octave to 10:

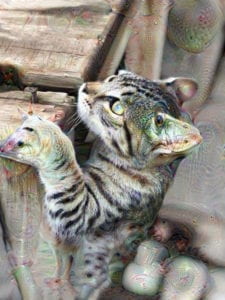

I also found a website called Deep Dream Generator . It allows user to upload their images and the can generate output image based on the deep dream. Here are results I got using this website.

It really gave me a clearer understanding of how did the deep dream try to recognize those image patterns. It always try to occupy a part of the image and generates existing figure based on its texture and shape. It can be seen in the first three pictures that it recognized some shapes such as snake and zebra. For the last one, I switched to another layer. As a result, the patterns recognized by the neural network became totally different.

I am always wondering why the deep dream is always trying to assign eye-like figure to the image. I guesses that’s because the training dataset consist of a lot of animal figures, especially their face, and animal faces usually contains eyes.

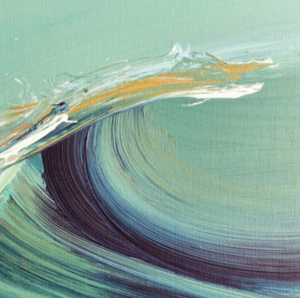

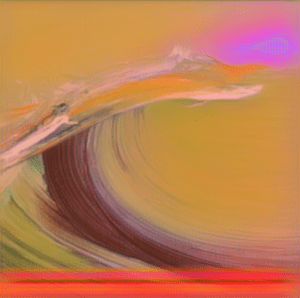

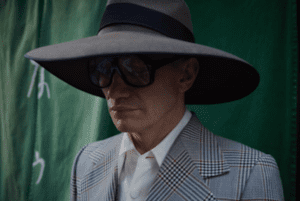

Here are the results I got by applying the deep dream to other pictures:

.

.