To begin my experiment with Deep Dream, I chose to work with the images we used in class. From here, I started by changing the following values: max loss, iterations, and step. I found that the max loss and step values gave the largest visible results, so I kept modifying these. As my CPU is limited, I chose to work with a lower max loss value. Here are some results:

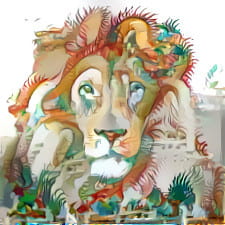

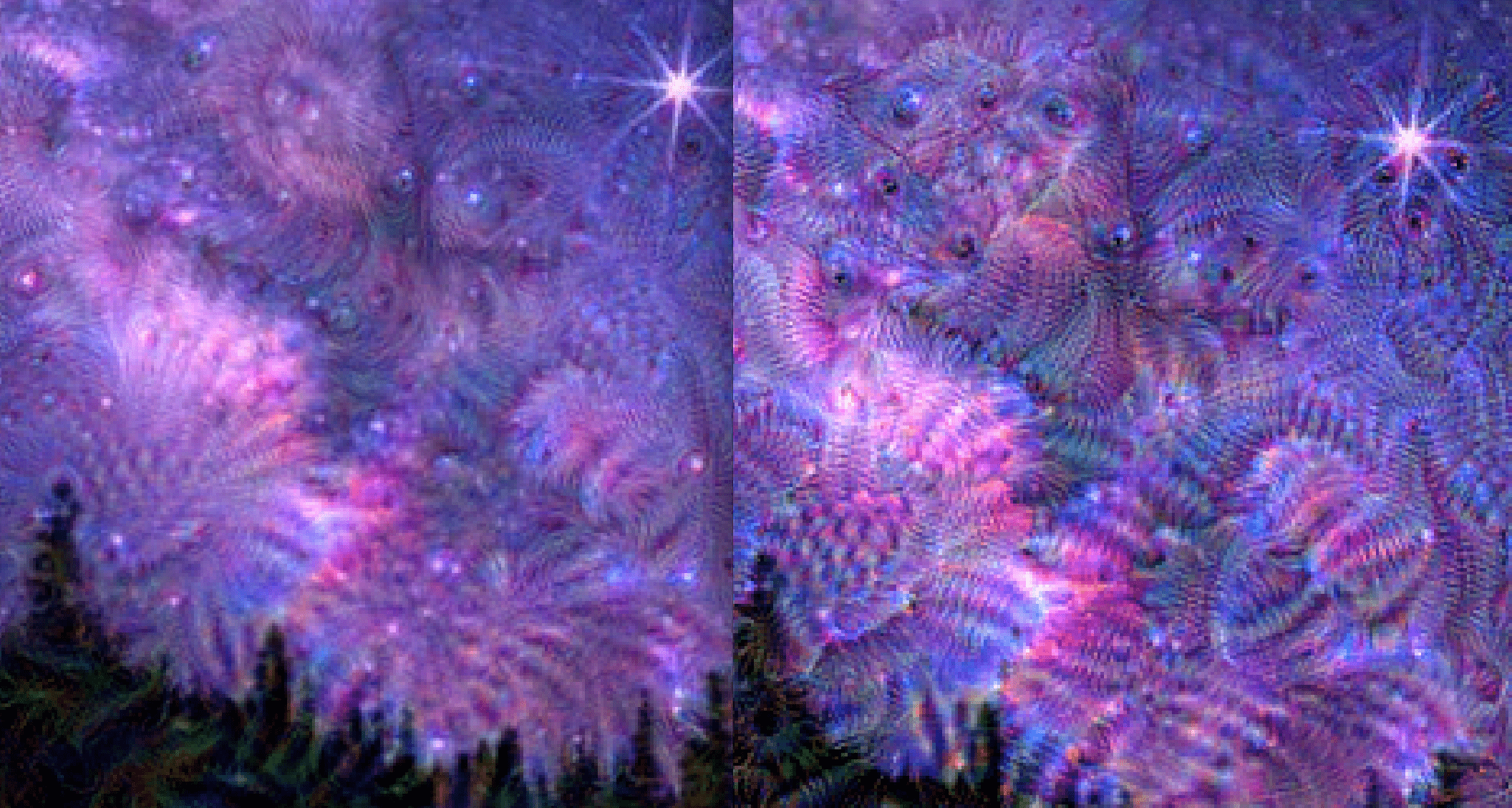

(loss value of 20):

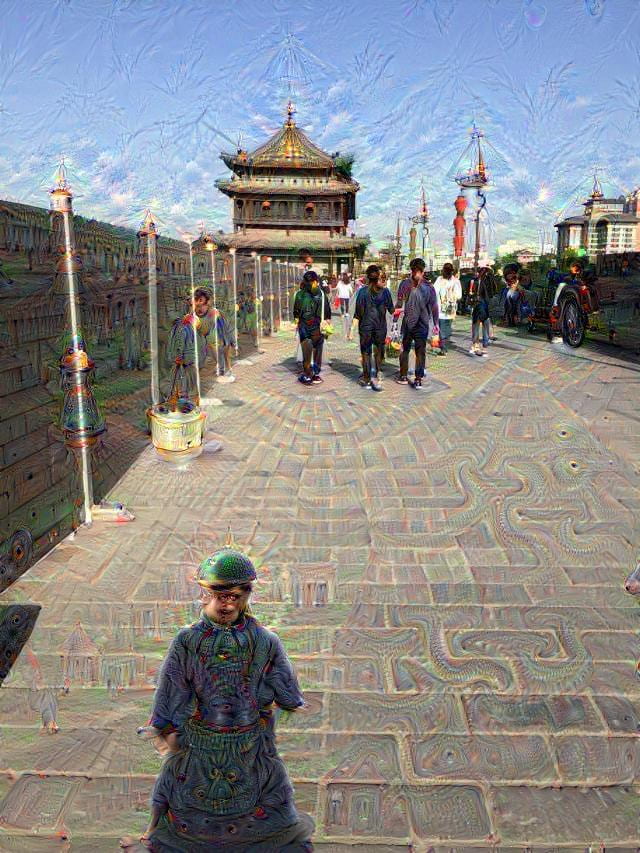

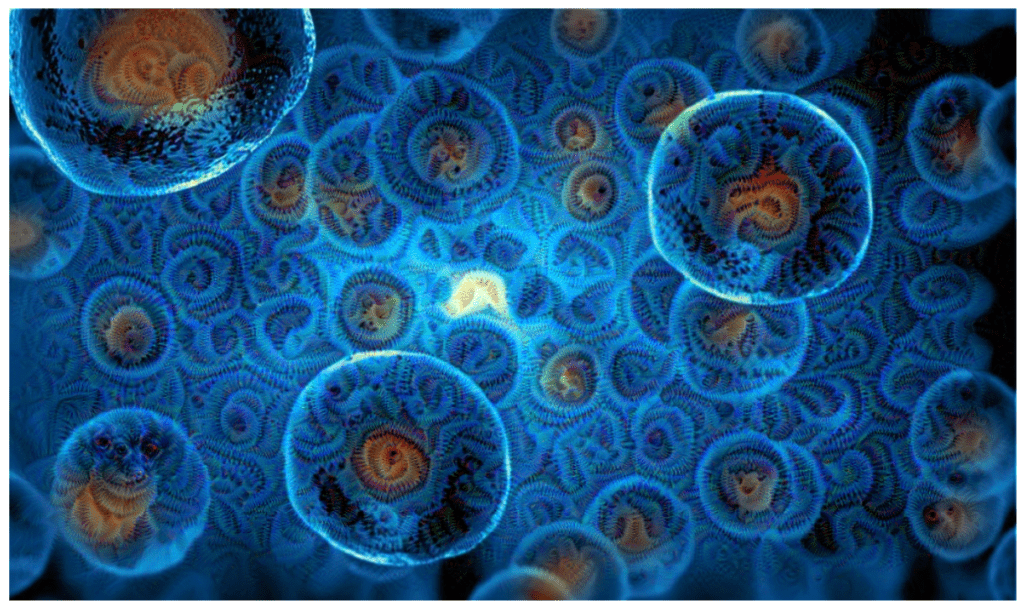

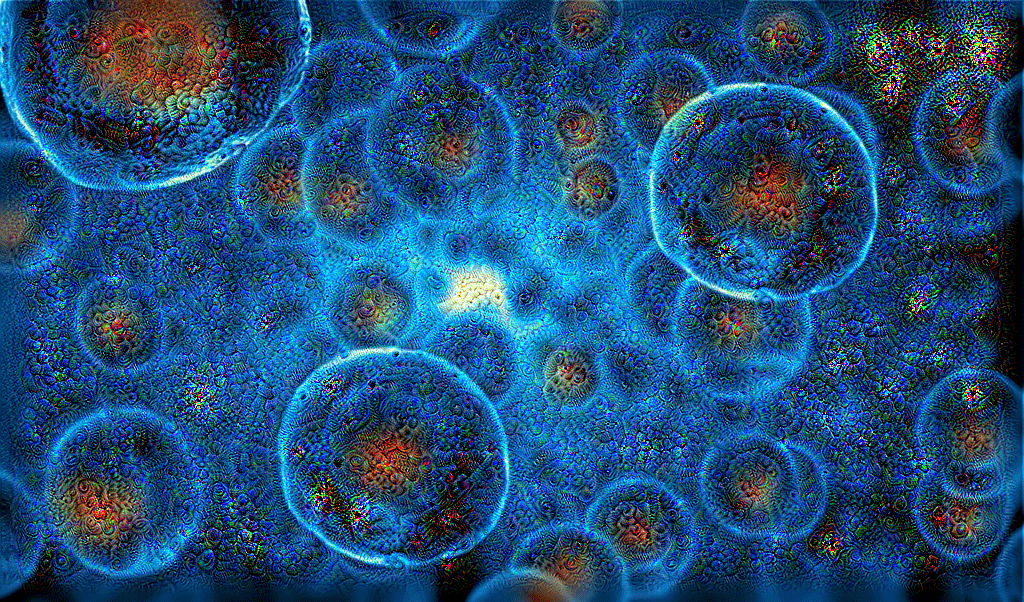

(max loss: 5, step: 0.7):

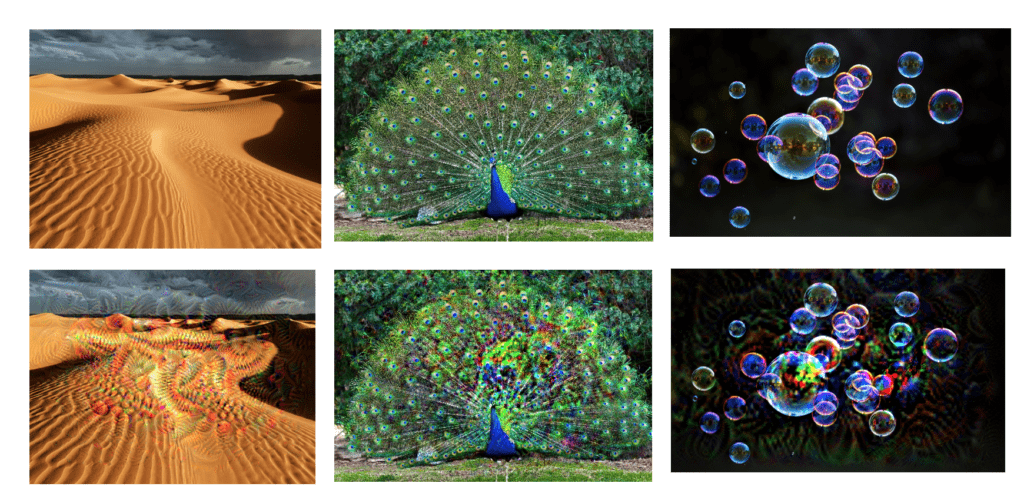

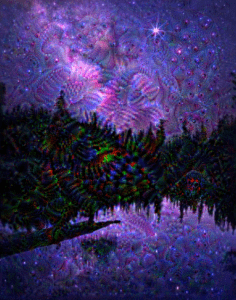

After this, I chose to view the layers and see how each one affected the output. I found that some layers created different shapes and modified colors differently. Finally, I chose to work with my own image (one of myself) to play with as I was inspired my Memo Akten’s “Journey Through The Layers of the Mind.” After running the model once, I would take the output image received and use it as an input. Here are my results after doing this for 6 iterations:

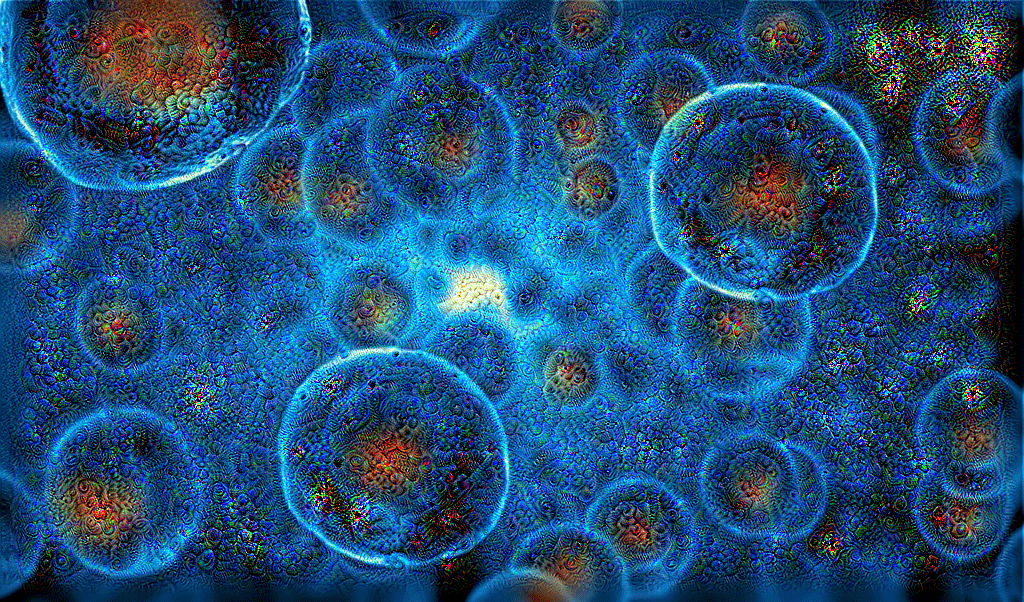

(max loss: 5, step: 0.7, num-octave: 5, octave-scale; 1, iterations: 20)

Layers – (mixed01: 2.5, mixed02: 1.5, mixed03: 0.5, mixed04: 0.5)

You can see that the model begins to detect structural elements in the picture and starts to implement the patterns around them. Overall, I found this model to be fun to work with. It’s easy to use and has many creative applications.

+

+