I used the Deep Dream algorithm provided in class to change the images used in the previous style transfer exercise. This has been the input:

This was the output:

Interactive Media Arts @ NYUSH

I used the Deep Dream algorithm provided in class to change the images used in the previous style transfer exercise. This has been the input:

This was the output:

IML // Style Transfer

In this style transfer exercise, I wanted to try creating a model that would turn natural landscapes, such as mountains, into synthetic and artificial ones. The image I decided to use for my style transfer was this:

Content:

Style:

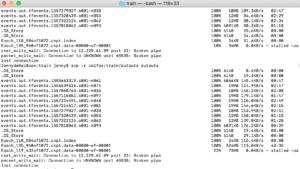

I tried to train the model using the tutorial from the class slides. I prepared all the files, made the train shell script and tried to qsub it. However, every time I tried to add it to the queue, I got the message along the lines of:

XXXX-qsub-07-ai-builder

Nothing happened afterwards, and immediately I got redirected to bash. No training logs or error files were present.

After at least 10 attempts to train the network, I concluded that there is some other technical issue with my neural network setup.

I decided to follow a different tutorial instead. I found a style transfer algorithm in an IBM Watson neural network set. There is an algorithm that enables users to train a neural network to transfer styles. The tutorial is available here:

https://www.youtube.com/watch?v=29S0F6GbcxU

These are the results:

Style Filter is an interactive installation powered by Arduino, p5.js and ml5.js. It incorporates a physical interface for users to apply neural style transfer filters to images, in a way that one would apply a square filter on a camera lens into a holder. As multiple filter holders are provided, users are free to explore applying filters on top of each other in various orders.

Continue reading “IML | Style Filter: Final Project Documentation – Yufeng”

Process

I decide to train CycleGAN with monet2photo dataset for this week’s assignment. I follow all the steps and it took me three days to get the trained model. But when I started to download the model, I faced with a lot of difficulties. Maybe because the checkpoint files are too large, my download stopped every time. I tried about 10 times and it still failed to download the whole checkpoints. Then I decided to switch back to our in-class example and see how it is going to be like if I choose different input images.

Result

I imported some images from a photographer called David Ablehams and the results as follows are satisfactory.

In this week’s assignment, I tried with CycleGAN, monet2photo model. The model trained around 3.5 days. But the download process from Intel Cloud is very frustrating. I have downloaded around 15 times, and for almost 2 days. Every time, the connection is broken, or because of the network, it stalled.

![]()

Finally, I downloaded it. And by following the instruction, I convert the model and freeze the model graph. Then I used this model for different pictures as an experiment. So I first tried several of Monet’s paintings. Some of them work pretty well, but some of them have bad results. Then I also tried with some other impressionism artists’ paintings. Finally, I tried with paintings from other artists who are not impressionism.