Overview

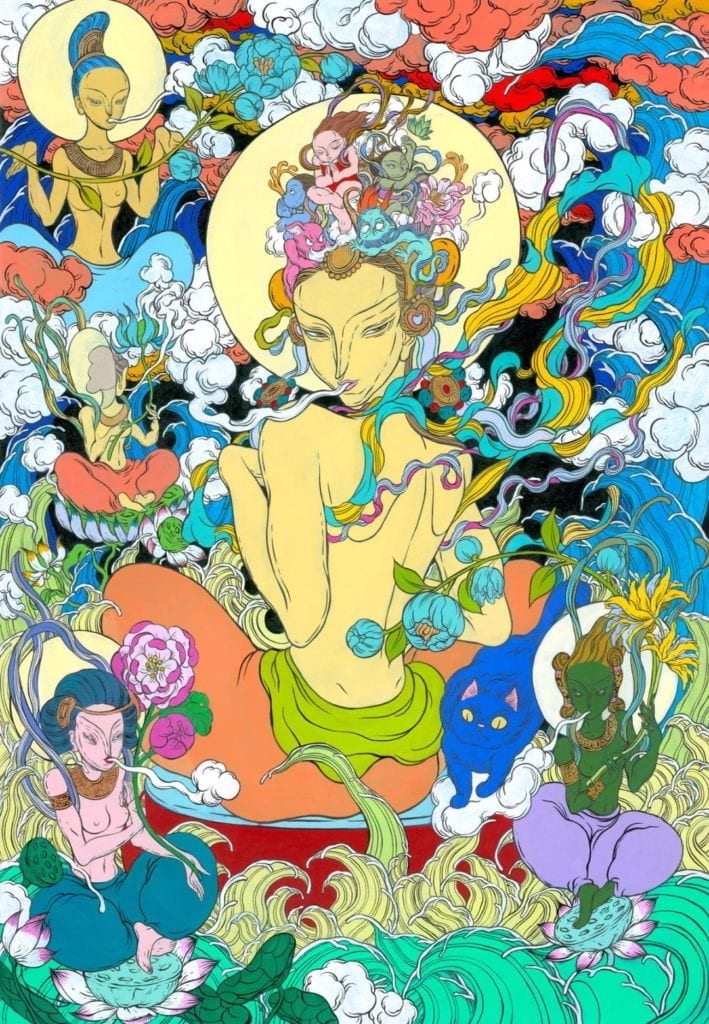

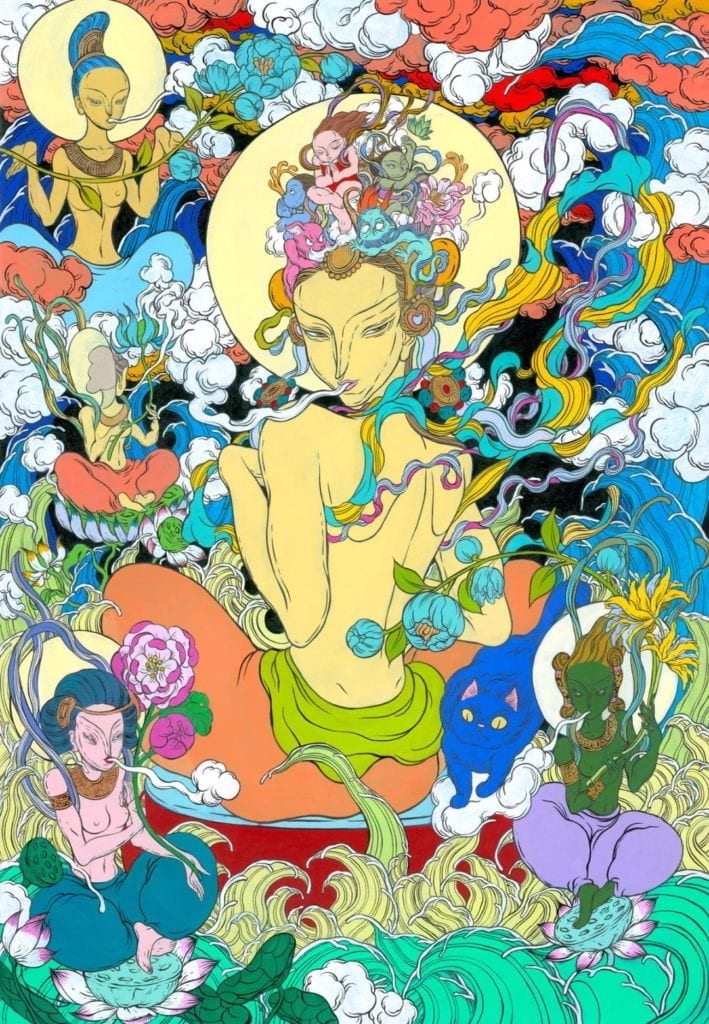

This week, I trained a ml5js style transfer model with devCloud services. I chose the engraving artwork “Thangka” from artist Wenna as the target style for this model.

Continue reading “Training Style Transfer with ml5js | aiarts.week10”

Interactive Media Arts @ NYUSH

Overview

This week, I trained a ml5js style transfer model with devCloud services. I chose the engraving artwork “Thangka” from artist Wenna as the target style for this model.

Continue reading “Training Style Transfer with ml5js | aiarts.week10”

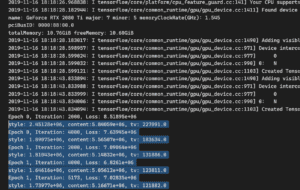

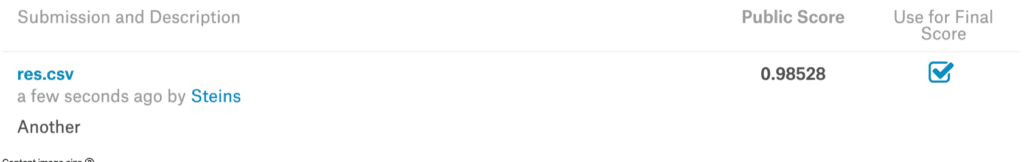

The training script for StyleTransfer depends on a quite old TensorFlow version which imports extra difficulties for building the environment. Moreover, the training process uses the CoCo dataset which contains 14 GB of images. It also introduces a lot of training time.

In my case, I used RTX2080Ti to do the training process. For two epochs and 8 batch, it takes about 3 hours to finish the training phase. In general, it is super fast considering how many images CoCo could have.

Since this is an old script, I can not directly transform it into a browser compatible model. In this case, I used the ml5 script to do the work.

Below aresome images I converted:

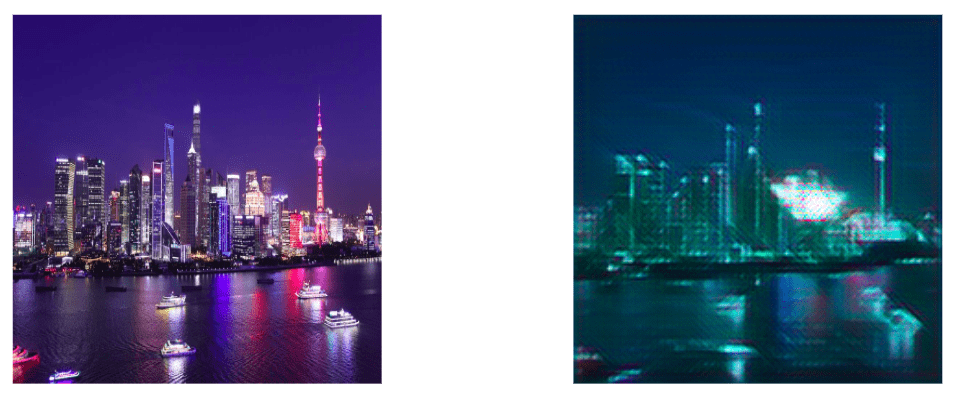

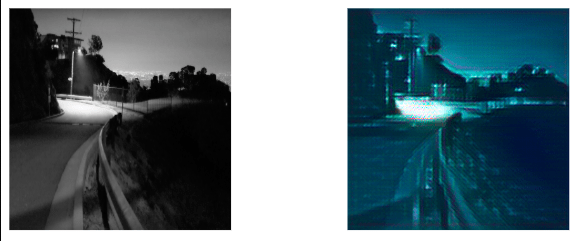

For this week’s assignment, I trained a style transfer model using a cyberpunk style image as my base. I personally fell in love with this genre thanks to movies such as Tron, Beyond the Aquila Rift and others. I wanted to create a model that would translate normal landscapes into something out of one of these movies.

Beginning the project, I didn’t realize that it would take me such a long time to complete. Being on a Windows computer didn’t help as well. I followed along with the slides provided to us however some of the lines of code would not transfer well in the command terminal of the windows system. Therefore, I resorted to transferring my work onto a mac.

From there, I downloaded the finished model and uploaded into the folder that was provided to us. Running the localhost server, I then proceeded to test the finished work out.

Here are some of my finished work:

Personally, I’m very satisfied with the results. It manages to change normal everyday landscapes into actual Tron images. However, I think that the result created a very digitized version of the landscapes. Understandably, there is a lot of loss and the accuracy might not be quite high, but it is safe to say that the model was able to perform better than expected.

The effect doesn’t really work with portraits as seen in this example. If given pictures of landscapes it works very well. I think that this model has potential and if given enough time to develop, it can potentially create even better examples of said images.

Overall, I think that I was able to recreate the cyberpunk aesthetic with the model. It went really well however there is a lot of room to improve. A larger sample size would give me a better result and translate the landscapes into a more accurate depiction of cyberpunk/Tron-esque landscapes. This idea can become a reality and is a very feasible idea in my opinion. This is definitely a good idea to look into.

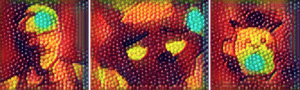

In this week’s assignment, I am going to train a styleTransfer model for ml5 on devCloud. I select an image from the Insider. Using Skittles to draw the portraits of people. This work of art binds people’s mentality with materials and soul, which creates huge visual and social impact.

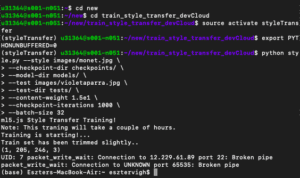

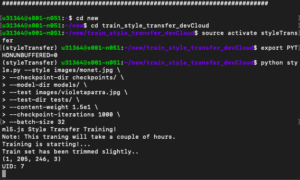

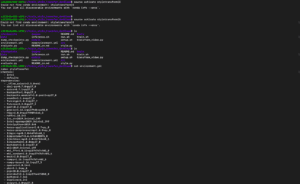

In this training assignment, I am going to train a style transfer network using one of the portraits to let it transfer camera rolls to the art expression. So I follow the instructions in the slides and set up the environment on the devCloud:

$ # Create SSH connection with devCloud

$ ssh devCloud

$ # Clone the training code from GitHub

$ git clone https://github.com/aaaven/train_style_transfer_devCloud.git

$ cd train_style_transfer_devCloud

$ # Set up virtual environment and training image

$ conda env create -f environment.yml

$ sed -i 's/u24235/u31325/g' train.sh

$ sed -i 's/styletransferml5/styleTransfer/g' train.sh

$ sed -i 's/zaha_hadid.jpg/train.jpeg/g' train.sh

$ curl -L https://image.insider.com/5cb7888866ae8f04915dccc8?width=2500&format=jpeg&auto=webp -o images/train.jpeg

$ # Start training

$ qsub -l walltime=24:00:00 -k oe train.sh

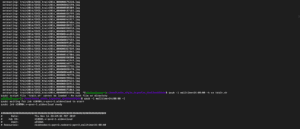

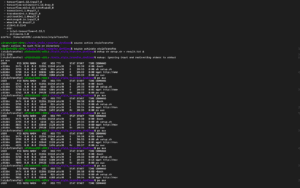

The instructions above are the essential ones that extracted from the original slides to simplify the set-up process. After submitting the job, we can run qstat to check out the training process. It usually shows like that:

Job ID Name User Time Use S Queue

------------------------- ---------------- --------------- -------- - -----

410873.v-qsvr-1 train.sh u31325 07:16:30 R batch

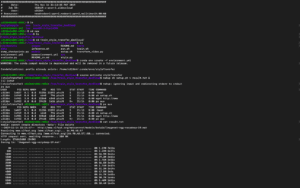

Indicating the training is still on the go. If the result of the command shows nothing after hours of wait, the training process is done, and the model can be extracted from devCloud:

$ # On my own laptop

$ scp -r devcloud:/home/u31325/train_style_transfer_devCloud/models myModels

And the model is saved to the local path under myModels folder.

The next step is to load the model in ml5js and let if transfer the style of images. I followed the instruction here.

Indicating the training is still on the go. If the result of the command shows nothing after hours of wait, the training process is done, and the model can be extracted from devCloud:

const style = ml5.styleTransfer("myModels/", modelLoaded);

function modelLoaded() {

style.transfer(document.getElementById("img"), function(err, resultImg) {

img.src = resultImg.src;

});

}

The JavaScript code will load models from the previously copied folder and run styleTransfer after the model is completely loaded.

I transferred some pictures and get the following results:

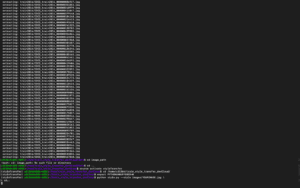

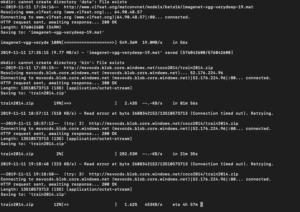

Training this project was difficult and resulted in several failed download attempts of the data set. The ultimate solution to this problem was manually downloading the two files and then manually unzipping both.

I have throughly taken screenshots of my failed attempts.

As you can see, despite my best efforts and several different time tests, there was no way to successfully download this assignment without hacking it (the computer science way, special thanks to Kevin!)

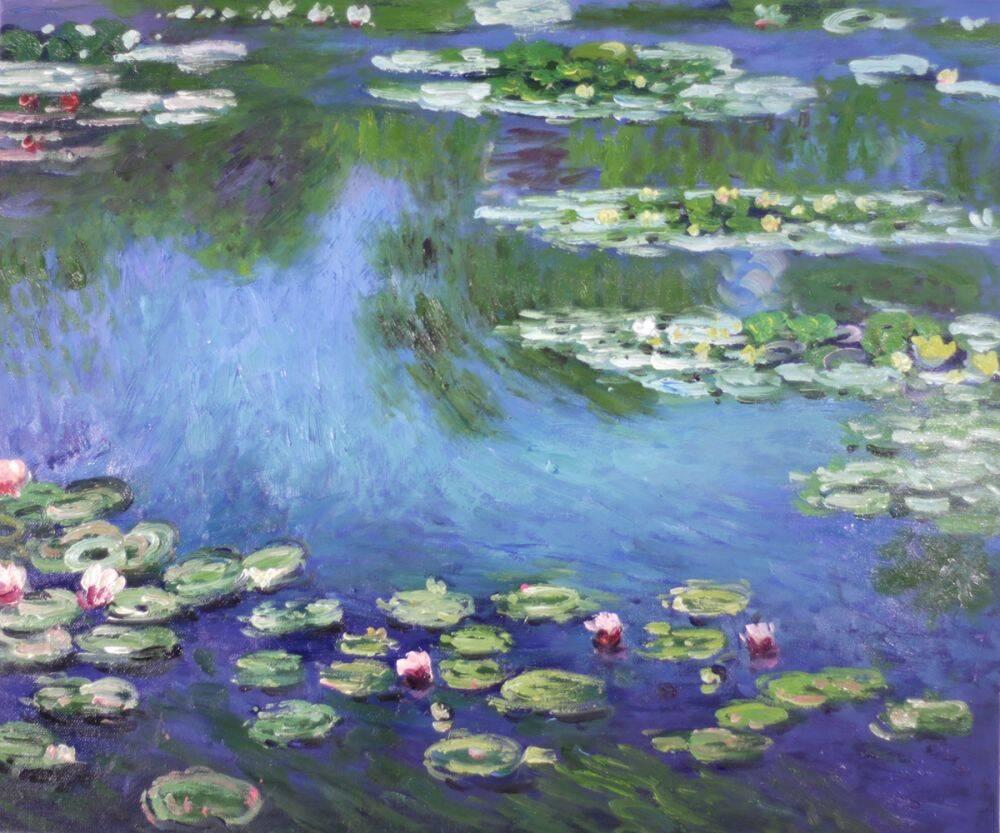

Now, when it came to the image I wanted to use, I selected Monet’s Water Lillies. I thought it would work well since it has strong stylistic elements. Now, this too failed. I couldn’t actually get an output. I am going to continue working with this issue next week to see if I can’t get an actual result.

This is my actual transfer failure! ^

I was hopeful, but honestly, I can’t restart this again… after spending an entire week just trying to get the model downloaded.

You can see the manual unzipping!

This is all samples of the manual code I used to try to make this happen! ^

This alone took half the day! ^

Finally some success! ^

My constant checking to make sure this way didn’t quit! ^

These were y attempts using Aven’s method! ^

I think there is potential to make it work. I am going to retry until I get some results. When I have those results I will add them here.