For this week’s assignment I played around with BigGAN. In class we experimented with how truncation would affect single images, but I wondered how it would affect the video animation from one object into another.

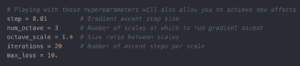

I wanted to morph an object into another one that is already similarly shaped, so at first I chose guacamole and ice cream on truncation 0.1. This turned out to be…really disgusting looking.

Video: https://drive.google.com/file/d/1mAewM63SA8vT1rez3u7co2fDE3eP7d0C/view?usp=sharing

For some reason the guacamole didn’t really seem to be changing at all at the beginning, and when it did begin to morph into ice cream it just looked like moldy food. The ending ice cream picture also didn’t really look like ice cream.

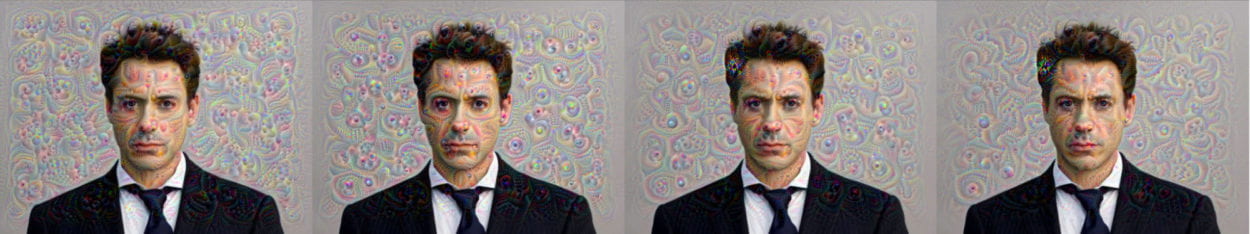

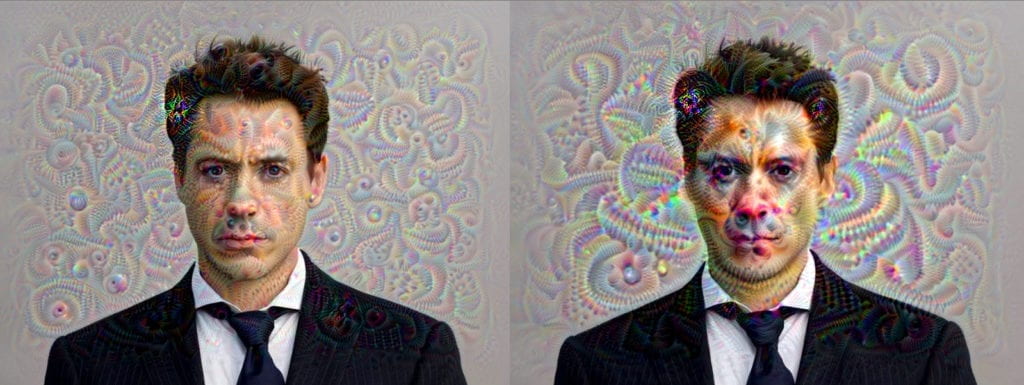

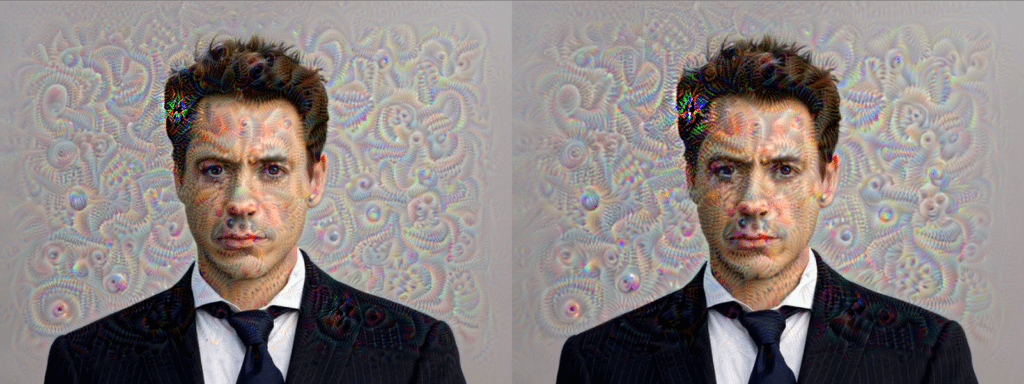

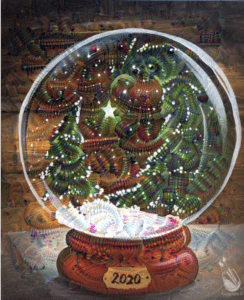

So…I decided to change the objects to a cucumber and a burrito. This worked a lot better. I created four videos, one with truncation 0.2, one with 0.3, one with 0.4 and one with 0.5. I then put these into a collage format so you could see the differences between all of them:

Though it’s subtle, you can definitely tell that there is a difference between the four videos. Theoretically, the top left corner is 0.2, the top right corner is 0.3, the bottom left is 0.4 and the bottom right is 0.5, however I am not super well-versed in video editing and when I put this together in iMovie it was hard to tell which one was which.