Background

Dancing With Strangers is a development of my midterm project Dancing With a Stranger. In my midterm project, I used Posenet model to mirror human movements on the screen and exchange the controls of their legs. With my final project Dancing With Strangers, I’m hoping to create a communal dancing platform that enables every user to use their own terminal to log onto the platform and mirror all the movements on the same platform. In terms of the figure that will be displayed on the screen, I’m planning to build an abstract figure based on the coordinates provided by Posenet, The figure will illustrate the movements of the human body but will not look like the skeleton or the contour.

Motivation

My motivation for this final project is similar to my midterm project: interaction with electronic devices can pull us closer, but can also drift us apart. Using them to strengthen the connections between people become necessary. Dancing, in every culture, is the best way to pull together different people, a communal dancing platform can achieve this goal. Compared with my midterm project, the stick figure I create was too specific, in a way, being specific means assimilation. Yet people are very different, they move different, Therefore, I don’t want to use the common-sense stick figure to illustrate body movement. Abstract provides us with diversity, without the boundary of the human torso, people can express themselves freer.

Reference

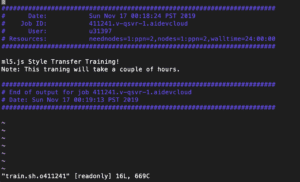

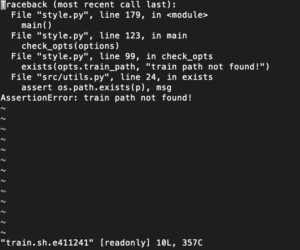

For building the communal dancing platform, I’m using the Firebase server as a data collector that records live data sent by different users from different terminals.

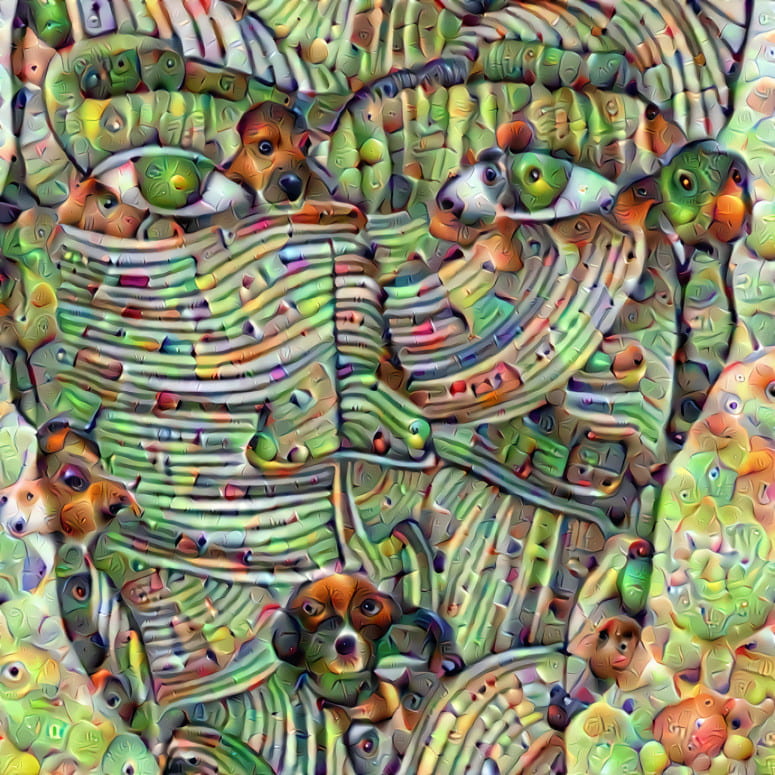

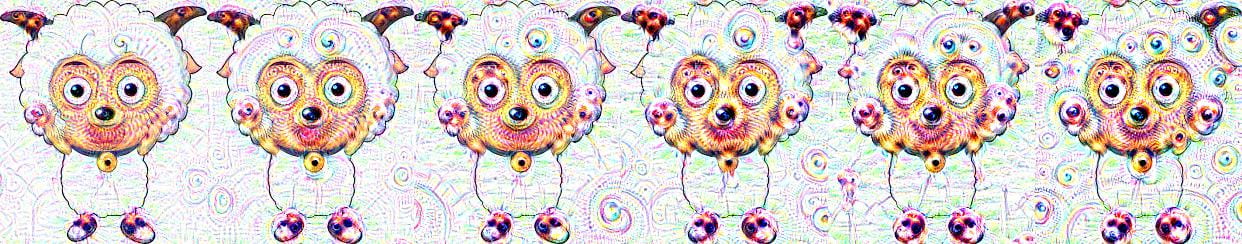

For the inspiration of my abstract figure, I’m deeply inspired by the artist and designer Zach Lieberman. One of his series depicts the human body movement in a very abstract way, it tracks the speed of the movements and the patterns illustrate this change by changing its size. With simple lines, bezier and patterns, he creates various dancing shapes that are aesthetically pleasing. I plan to achieve similar results in my final project.

Some works by Zach Lieberman