slides to presentation: https://drive.google.com/open?id=1kigOl5IQ15UO5NGDD3uHTJ4GrR2BLlJ-OUPsYl3Zp-A

Background:

I have always been a fan of the sci-fi genre. As a child, I would daydream about flying cars and neon lights in the city. However, the more I grew up, the more I looked past the aesthetic of the genre and looked at the implications of a cyber-heavy society. Movies such as Blade Runner and Tron all show a dystopian society in which the world is heavily influenced by the lack of human-to-human interaction. This is partly due to the effect of technology. The more unsupervised technological breakthroughs occur, the higher the chances of it affecting our day to day lives for the worst.

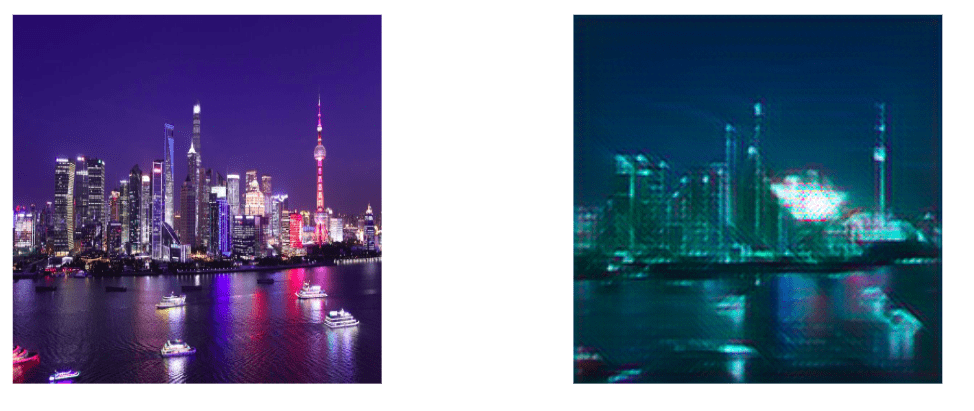

The cyberpunk sub-genre immediately reflects the disparity between humans and machines within our society. The dystopian aspect of the genre can be seen from a couple of common aesthetic themes present in the sub-genre. Dark skies, unnatural neon lights, near-empty streets and so on.

These aesthetic choices reflect the dystopian society with a naturally dark and gloomy scenario which is coupled with unnatural man-made neon lights. This is a showcase of how humans have deviated away from the natural world and attempt to replicate the natural with their own creations.

Motivation:

I want to be able to show the eerie beauty of the genre to everyone else. I want people to see what I see when I walk around a megacity like Shanghai. The future depicted in these media is truly breathtaking, however, a glimpse past the veil of technology shows a terrifying future.

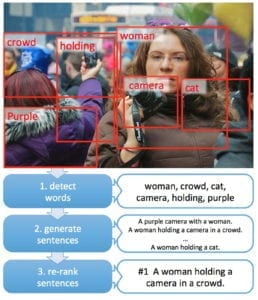

I want to use the knowledge of machine learning and AI to showcase my vision to others around me. The reason for this is because sometimes words fail me and I can’t explain what I see clearly with words. But thanks to what we have been learning in class, I can finally be able to show what I mean.

Reference:

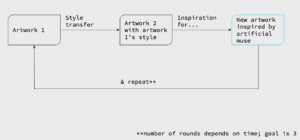

I will be using StyleTransfer to train my models to develop the city skylines that I want.

I also want to use BodyPix to separate the human bodies from the backgrounds through segment. By doing so, I will be able to implement two different Styletransfers that will help my vision come true. However, to showcase this, I might need to take a video of the city in order to actually what the model can do.